80,000 Hours Podcast

Rob, Luisa, and the 80000 Hours team

Unusually in-depth conversations about the world's most pressing problems and what you can do to solve them.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

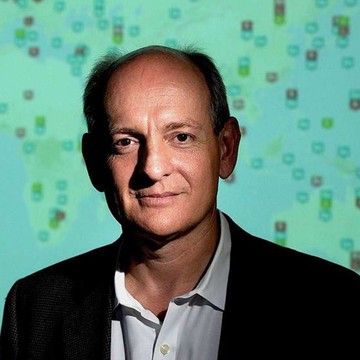

Hosted by Rob Wiblin and Luisa Rodriguez.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Hosted by Rob Wiblin and Luisa Rodriguez.

Episodes

Mentioned books

Sep 7, 2020 • 28min

Ideas for high impact careers beyond our priority paths (Article)

Discover unconventional career paths that can significantly influence the future, from historical research to roles in AI labs. The discussion emphasizes the importance of creativity and expertise in policy-making. Explore the essential roles of hardware experts and information security in safe AI development. The podcast also touches on alternative avenues like public intellectualism and highlights the power of effective altruism in community engagement and non-profit entrepreneurship. Tune in for impactful insights and career inspiration!

Sep 1, 2020 • 58min

Benjamin Todd on varieties of longtermism and things 80,000 Hours might be getting wrong (80k team chat #2)

In this insightful chat, Arden Koehler, a researcher at 80,000 Hours, joins CEO Ben Todd to unpack various forms of longtermism. They explore patient longtermism versus urgent approaches, particularly concerning existential risks, and discuss how these perspectives shape career choices. The pair also tackle the balance between transferable and specialized career capital and question if they're focusing on the right problems. Their candid conversation highlights the complexities of doing good in an uncertain world.

Aug 28, 2020 • 33min

Global issues beyond 80,000 Hours’ current priorities (Article)

Delve into lesser-known global issues that could use more attention from the effective altruism community. Discover the vital role of global governance in managing risks linked to emerging technologies and the need for reforms. Explore reflections on protecting liberal democracies and the potential of technology to enhance political dialogue. Uncover complexities in long-term philanthropy that promise sustainable impact. Finally, examine overlooked climate change risks and their health implications, urging further investigation into these pressing topics.

Aug 20, 2020 • 2h 8min

#85 - Mark Lynas on climate change, societal collapse & nuclear energy

Mark Lynas, a journalist and author known for his works on climate and energy, dives into the pressing issues of climate change and the benefits of nuclear energy. He argues that a golf-ball sized lump of uranium can provide a lifetime's worth of energy, far more efficiently than coal. Lynas tackles the misconceptions surrounding nuclear power, emphasizing its safety and the lives it has saved by reducing air pollution. He warns that unchecked climate change could lead to societal collapse, advocating for a rapid transition to sustainable energy solutions, including nuclear.

Aug 13, 2020 • 2h 58min

#84 – Shruti Rajagopalan on what India did to stop COVID-19 and how well it worked

In this engaging discussion, Shruti Rajagopalan, a Senior Research Fellow at the Mercatus Center, shares her deep insights on India's unique approach to combating COVID-19. She highlights the importance of tailoring policy responses to local contexts, such as distributing hand sanitizer in homes without reliable running water. The conversation delves into the challenges of data collection in healthcare, the complexity of mortality reporting, and innovative strategies used in densely populated areas like Dharavi. Shruti emphasizes that understanding local conditions is crucial for effective public health strategies.

Jul 31, 2020 • 2h 23min

#83 - Jennifer Doleac on preventing crime without police and prisons

Jennifer Doleac, an Associate Professor of Economics and Director of the Justice Tech Lab, dives into unconventional crime prevention methods that don't rely on police or prisons. She shares fascinating insights on how improved street lighting dramatically reduces crime rates, drawing from her research on daylight saving time's impact on robbery. Doleac also discusses the benefits of cognitive behavioral therapy and the importance of addressing lead exposure, highlighting innovative approaches for creating safer communities. A fresh take on criminal justice reform awaits!

Jul 27, 2020 • 1h 28min

#82 – James Forman Jr on reducing the cruelty of the US criminal legal system

James Forman Jr., a Yale Law School professor and Pulitzer Prize-winning author, tackles the urgent need for reform in the U.S. criminal justice system. He highlights the staggering incarceration rates, particularly among Black Americans, and debunks common misconceptions about 'violent crime.' Forman advocates for redefining these terms to foster change and stresses the importance of community-focused initiatives over traditional policing. He also emphasizes actionable strategies for listeners to engage in reform, critiquing systemic flaws that perpetuate inequity.

21 snips

Jul 9, 2020 • 2h 38min

#81 - Ben Garfinkel on scrutinising classic AI risk arguments

Ben Garfinkel, a Research Fellow at Oxford’s Future of Humanity Institute, discusses the need for rigorous scrutiny of classic AI risk arguments. He emphasizes that while AI safety is crucial for positively shaping the future, many established concerns lack thorough examination. The conversation highlights the complexities of AI risks, historical parallels, and the importance of aligning AI systems with human values. Garfinkel advocates for critical reassessment of existing narratives and calls for increased investment in AI governance to ensure beneficial outcomes.

Jun 29, 2020 • 15min

Advice on how to read our advice (Article)

Explore how to effectively interpret career advice tailored to diverse personalities and situations. The discussion highlights the importance of assessing the fit of guidance and understanding its context. Listeners are encouraged to critically evaluate advice based on their unique circumstances. Expect insights on navigating career choices with a focus on personal impact, as well as considerations like publication timelines and realistic expectations for applying suggestions.

Jun 22, 2020 • 2h 13min

#80 – Stuart Russell on why our approach to AI is broken and how to fix it

Stuart Russell, a professor at UC Berkeley and co-author of a leading AI textbook, discusses the flaws in current AI development methods. He emphasizes the issue of misaligned objectives, using the example of YouTube's algorithm, which promotes extreme views to maximize engagement. Russell argues for a new approach that prioritizes human preferences and ethical considerations to better align AI systems with societal values. He highlights the urgent need for regulation and responsible frameworks to navigate the complex challenges of advanced AI.