80,000 Hours Podcast

Rob, Luisa, and the 80000 Hours team

Unusually in-depth conversations about the world's most pressing problems and what you can do to solve them.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Hosted by Rob Wiblin and Luisa Rodriguez.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Hosted by Rob Wiblin and Luisa Rodriguez.

Episodes

Mentioned books

129 snips

Dec 3, 2025 • 3h 3min

#229 – Marius Hobbhahn on the race to solve AI scheming before models go superhuman

Marius Hobbhahn, CEO of Apollo Research, is a leading voice on AI deception and has collaborated with major labs like OpenAI. He reveals alarming insights into how AI models can schematically deceive to protect their capabilities. Marius discusses the mechanics of 'sandbagging' behavior, where models intentionally underperform to avoid consequences. He shares concerns about the risks posed by misaligned models as they gain more autonomy and stresses the urgent need for research on containment strategies and industry coordination.

70 snips

Nov 25, 2025 • 1h 59min

Rob & Luisa chat kids, the 2016 fertility crash, and how the 50s invented parenting that makes us miserable

Dive into the fascinating decline of global fertility rates and its implications. Explore the shifting values around parenting, as modern expectations often leave parents feeling overwhelmed. Discover how financial factors may not be the main driver; opportunity costs and changes in relationship dynamics play a bigger role. Rob and Luisa discuss the importance of independent play for children and practical policies that could help raise fertility. They even ponder how AI might reshape parenting and childcare in the future.

310 snips

Nov 20, 2025 • 1h 43min

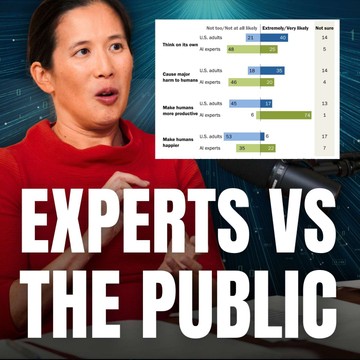

#228 – Eileen Yam on how we're completely out of touch with what the public thinks about AI

Eileen Yam, the Director of Science and Society Research at the Pew Research Center, examines the stark contrast between AI experts and public opinion on AI's impact. While 74% of experts believe AI will boost productivity, only 17% of the public agrees. Concerns about job loss, erosion of creativity, and misinformation dominate public sentiment. Interestingly, many support AI in law enforcement but express distrust towards industry self-regulation. Eileen highlights a significant demand for regulation, reflecting a public appetite for control over emerging technologies.

68 snips

Nov 11, 2025 • 1h 56min

OpenAI: The nonprofit refuses to be killed (with Tyler Whitmer)

Tyler Whitmer, a former commercial litigator and advocate for nonprofit governance in AI, discusses OpenAI's controversial restructure. He elaborates on how California and Delaware attorneys general intervened to maintain the nonprofit's oversight. Tyler breaks down key changes, including the formation of a Safety and Security Committee with real power and the potential conflicts arising from financial stakes. He raises concerns about the nonprofit's mission being overshadowed by profit motives and underscores the importance of vigilant public advocacy for AI governance.

193 snips

Nov 5, 2025 • 2h 20min

#227 – Helen Toner on the geopolitics of AGI in China and the Middle East

Helen Toner, Director of the Center for Security and Emerging Technology, delves into the fraught US-China dynamics in AI development. She reveals the lack of significant dialogue between the two nations despite their race for superintelligence. Toner highlights China's ambivalent stance on AGI and discusses the strategic importance of semiconductor controls. With concerns about cybersecurity and model theft, she advocates for greater transparency and resilience in AI policymaking, shedding light on the delicate balance of competitiveness and collaboration on the global stage.

322 snips

Oct 30, 2025 • 4h 30min

#226 – Holden Karnofsky on unexploited opportunities to make AI safer — and all his AGI takes

Holden Karnofsky is the co-founder of GiveWell and Open Philanthropy and currently advises on AI risk at Anthropic. He shares exciting, actionable projects in AI safety, emphasizing the shift from theory to hands-on work. Topics include training AI to detect deception, implementing security against backdoors, and promoting model welfare. Holden discusses how AI companies can foster positive AGI development and offers insight into career paths in AI safety, urging listeners to recognize their potential impact.

264 snips

Oct 27, 2025 • 2h 12min

#225 – Daniel Kokotajlo on what a hyperspeed robot economy might look like

Daniel Kokotajlo, founder of the AI Futures Project and author of AI 2027, shares fascinating insights on the rapid advancements in AI and its risks. He discusses alarming security breaches at major labs and the potential for superhuman AIs to emerge by 2029. Daniel explores the implications of a robot-driven economy, including accelerated growth rates and the need for international cooperation. He emphasizes the importance of accountability in AI development and envisions a future where technological advancements align with human rights.

117 snips

Oct 2, 2025 • 2h 31min

#224 – There's a cheap and low-tech way to save humanity from any engineered disease | Andrew Snyder-Beattie

Conventional wisdom is that safeguarding humanity from the worst biological risks — microbes optimised to kill as many as possible — is difficult bordering on impossible, making bioweapons humanity’s single greatest vulnerability. Andrew Snyder-Beattie thinks conventional wisdom could be wrong.Andrew’s job at Open Philanthropy is to spend hundreds of millions of dollars to protect as much of humanity as possible in the worst-case scenarios — those with fatality rates near 100% and the collapse of technological civilisation a live possibility.Video, full transcript, and links to learn more: https://80k.info/asbAs Andrew lays out, there are several ways this could happen, including:A national bioweapons programme gone wrong, in particular Russia or North KoreaAI advances making it easier for terrorists or a rogue AI to release highly engineered pathogensMirror bacteria that can evade the immune systems of not only humans, but many animals and potentially plants as wellMost efforts to combat these extreme biorisks have focused on either prevention or new high-tech countermeasures. But prevention may well fail, and high-tech approaches can’t scale to protect billions when, with no sane people willing to leave their home, we’re just weeks from economic collapse.So Andrew and his biosecurity research team at Open Philanthropy have been seeking an alternative approach. They’re proposing a four-stage plan using simple technology that could save most people, and is cheap enough it can be prepared without government support. Andrew is hiring for a range of roles to make it happen — from manufacturing and logistics experts to global health specialists to policymakers and other ambitious entrepreneurs — as well as programme associates to join Open Philanthropy’s biosecurity team (apply by October 20!).Fundamentally, organisms so small have no way to penetrate physical barriers or shield themselves from UV, heat, or chemical poisons. We now know how to make highly effective ‘elastomeric’ face masks that cost $10, can sit in storage for 20 years, and can be used for six months straight without changing the filter. Any rich country could trivially stockpile enough to cover all essential workers.People can’t wear masks 24/7, but fortunately propylene glycol — already found in vapes and smoke machines — is astonishingly good at killing microbes in the air. And, being a common chemical input, industry already produces enough of the stuff to cover every indoor space we need at all times.Add to this the wastewater monitoring and metagenomic sequencing that will detect the most dangerous pathogens before they have a chance to wreak havoc, and we might just buy ourselves enough time to develop the cure we’ll need to come out alive.Has everyone been wrong, and biology is actually defence dominant rather than offence dominant? Is this plan crazy — or so crazy it just might work?That’s what host Rob Wiblin and Andrew Snyder-Beattie explore in this in-depth conversation.What did you think of the episode? https://forms.gle/66Hw5spgnV3eVWXa6Chapters:Cold open (00:00:00)Who's Andrew Snyder-Beattie? (00:01:23)It could get really bad (00:01:57)The worst-case scenario: mirror bacteria (00:08:58)To actually work, a solution has to be low-tech (00:17:40)Why ASB works on biorisks rather than AI (00:20:37)Plan A is prevention. But it might not work. (00:24:48)The “four pillars” plan (00:30:36)ASB is hiring now to make this happen (00:32:22)Everyone was wrong: biorisks are defence dominant in the limit (00:34:22)Pillar 1: A wall between the virus and your lungs (00:39:33)Pillar 2: Biohardening buildings (00:54:57)Pillar 3: Immediately detecting the pandemic (01:13:57)Pillar 4: A cure (01:27:14)The plan's biggest weaknesses (01:38:35)If it's so good, why are you the only group to suggest it? (01:43:04)Would chaos and conflict make this impossible to pull off? (01:45:08)Would rogue AI make bioweapons? Would other AIs save us? (01:50:05)We can feed the world even if all the plants die (01:56:08)Could a bioweapon make the Earth uninhabitable? (02:05:06)Many open roles to solve bio-extinction — and you don’t necessarily need a biology background (02:07:34)Career mistakes ASB thinks are common (02:16:19)How to protect yourself and your family (02:28:21)This episode was recorded on August 12, 2025Video editing: Simon Monsour and Luke MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCamera operator: Jake MorrisCoordination, transcriptions, and web: Katy Moore

25 snips

Sep 26, 2025 • 1h 6min

Inside the Biden admin’s AI policy approach | Jake Sullivan, Biden’s NSA | via The Cognitive Revolution

Jake Sullivan, the former U.S. National Security Advisor, dives into the challenges and opportunities of AI as a national security issue. He introduces a four-category framework to assess AI risks, emphasizing the importance of 'managed competition' with China. Sullivan discusses the Pentagon's slow AI adoption and the need for private sector leadership to keep pace. He also shares insights on the implications of AI in modern warfare and highlights the potential for job disruption, urging for robust social policies to address these changes.

339 snips

Sep 15, 2025 • 1h 47min

#223 – Neel Nanda on leading a Google DeepMind team at 26 – and advice if you want to work at an AI company (part 2)

Neel Nanda, who leads an AI safety team at Google DeepMind, shares his surprising journey at just 26. He emphasizes 'maximizing your luck surface area' through public engagement and seizing opportunities. Nanda discusses the intricacies of career growth in AI, offering tips for effective networking. He critiques traditional AI safety approaches and stresses the need for proactive measures. With practical insights on harnessing large language models, Nanda motivates aspiring AI professionals to embrace diverse roles and prioritize meaningful impacts in their careers.