LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “METR: Measuring AI Ability to Complete Long Tasks” by Zach Stein-Perlman

15 snips

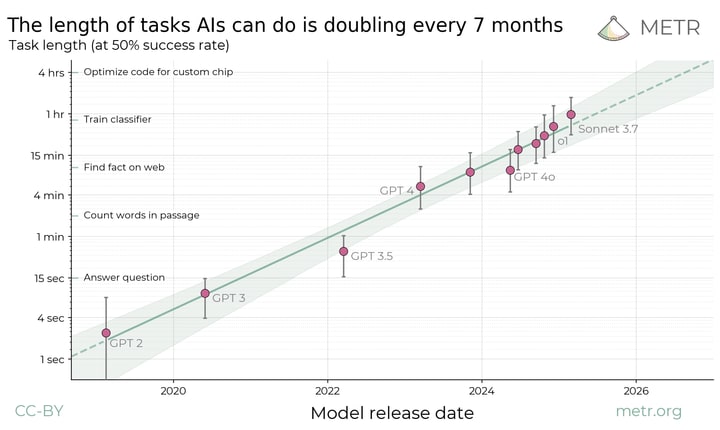

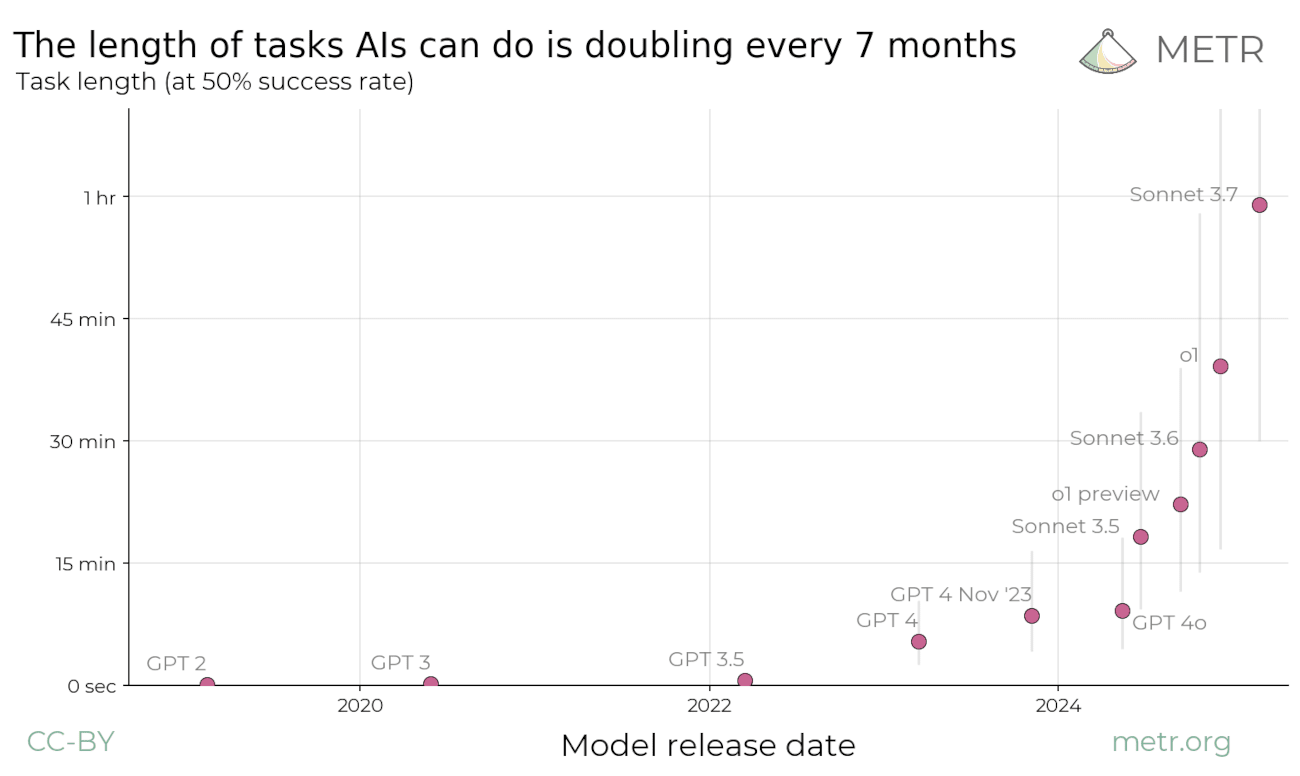

Apr 7, 2025 Zach Stein-Perlman, author of a thought-provoking post on measuring AI task performance, discusses a groundbreaking metric for evaluating AI capabilities based on the length of tasks they can complete. He reveals that AI’s ability to tackle complex tasks has been doubling approximately every seven months for the last six years. The conversation highlights the implications of this rapid progress, the challenges AI still faces with longer tasks, and the urgency of preparing for a future where AI could autonomously handle significant work typically done by humans.

AI Snips

Chapters

Transcript

Episode notes

METR: Measuring AI Task Length

- AI performance is measured by task length, showing exponential growth over six years.

- This metric predicts AI agents completing complex software tasks within five years.

AI Task Length Predicts Success

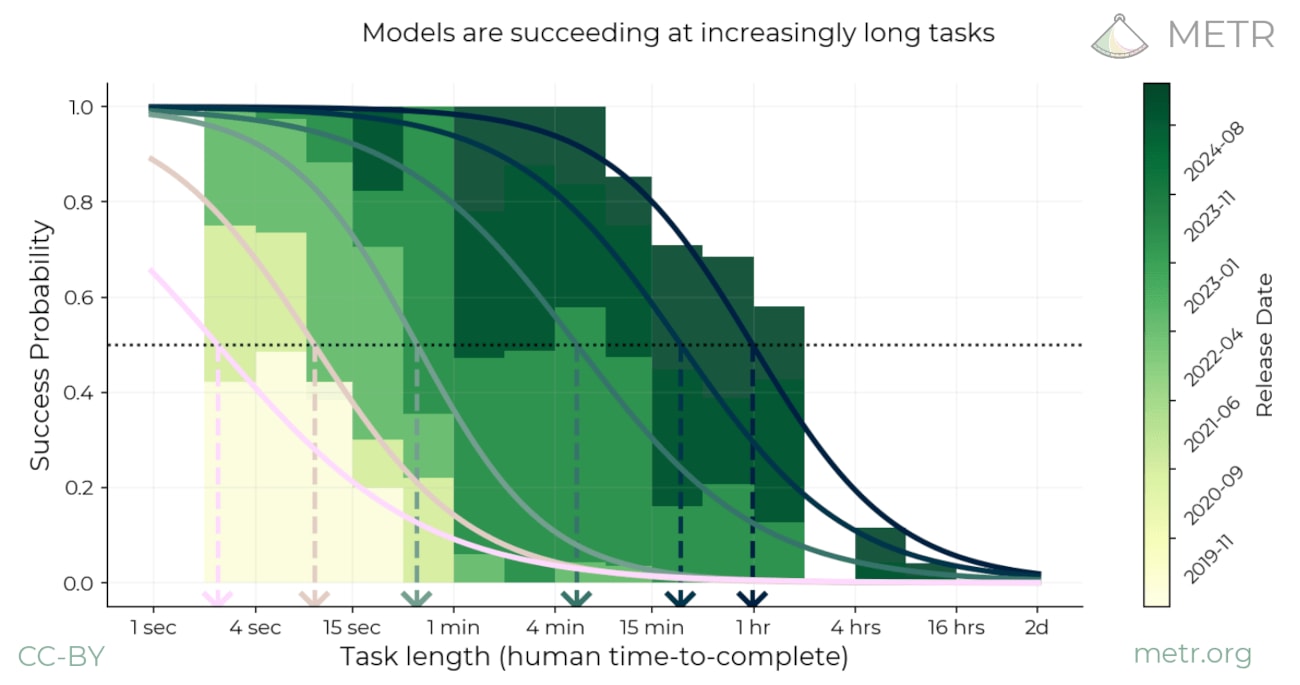

- Measuring task length helps understand AI capabilities as they struggle with longer action sequences.

- Current models succeed on short tasks (under 4 minutes) but struggle with longer ones (over 4 hours).

Exponential Growth in AI Task Completion

- Task length completed by AI has doubled every seven months for six years.

- Extrapolating suggests AI will handle week-long tasks in 2-4 years, even with measurement errors.