LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “Do confident short timelines make sense?” by TsviBT, abramdemski

Jul 26, 2025

In this engaging discussion, Abram Demski, an AI researcher, shares insights about the timelines for achieving artificial general intelligence (AGI) and the implications for existential risk. He largely agrees with the perspectives outlined in the AI 2027 report. The conversation delves into the limitations of current AI models, the nature of creativity in machines versus humans, and the complexities of navigating public discourse on technological advancements. They explore the challenges of predicting AGI timelines, advocating for comprehensive approaches to mitigate risks.

AI Snips

Chapters

Transcript

Episode notes

AI Progress vs Creativity Gap

- Current AI progress suggests steady improvement from basic to graduate-level competency in various tasks.

- The leap to creative, novel capabilities essential for true AGI is currently unproven and likely requires more breakthroughs.

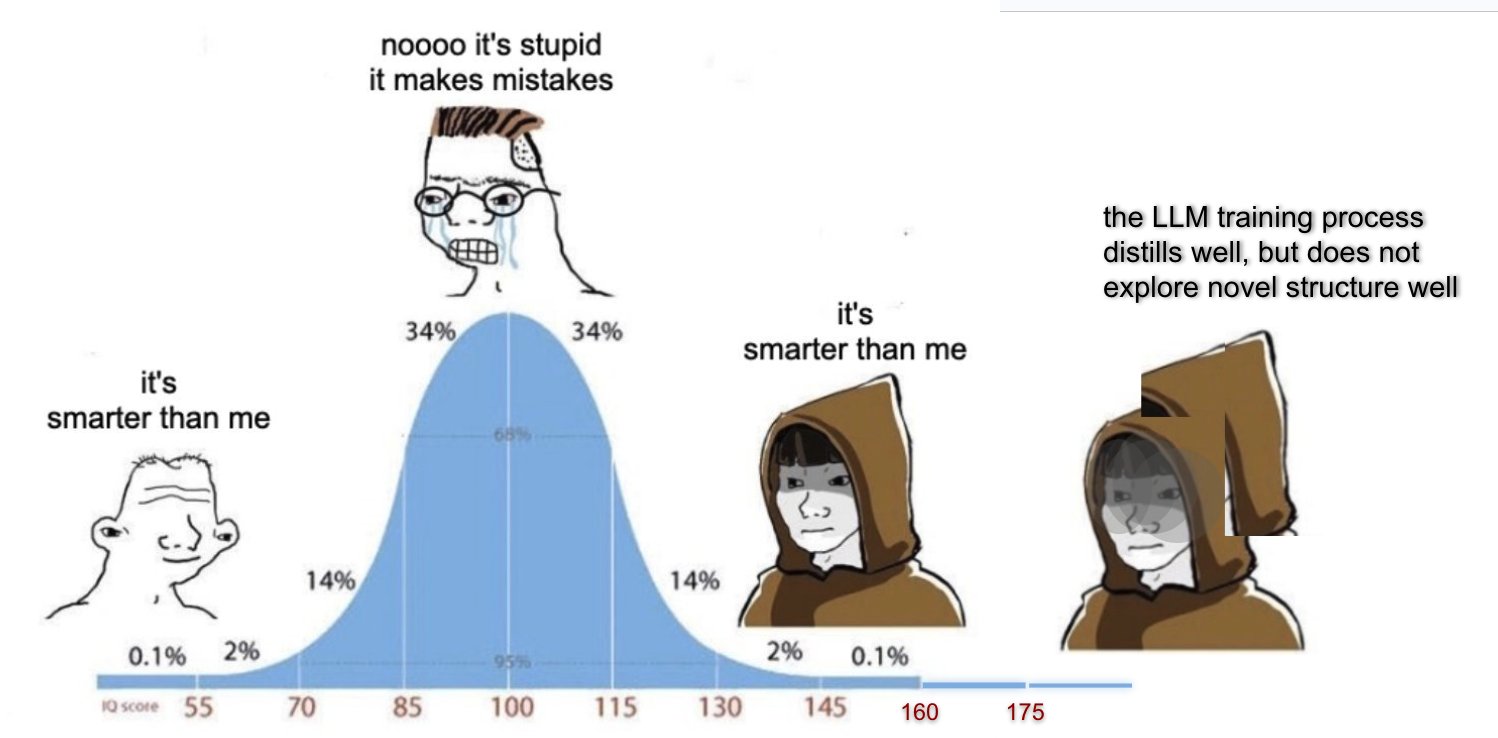

Limits of Reasoning in LLMs

- Large language models (LLMs) excel in memorizing and reasoning over existing human knowledge, approaching a deductive closure.

- However, their limitations persist in generating valid novel proofs and novel applications of knowledge, indicating alignment and generalization issues.

Whack-a-Mole in AI Research

- The whack-a-mole analogy argues AI safety needs systematic preemptive solutions rather than patchwork fixes.

- But AI capabilities research benefits from many retries, allowing incremental progress despite unknown problems.