NB. I am on the Google Deepmind language model interpretability team. But the arguments/views in this post are my own, and shouldn't be read as a team position.

“It would be very convenient if the individual neurons of artificial neural networks corresponded to cleanly interpretable features of the input. For example, in an “ideal” ImageNet classifier, each neuron would fire only in the presence of a specific visual feature, such as the color red, a left-facing curve, or a dog snout” : Elhage et. al, Toy Models of Superposition

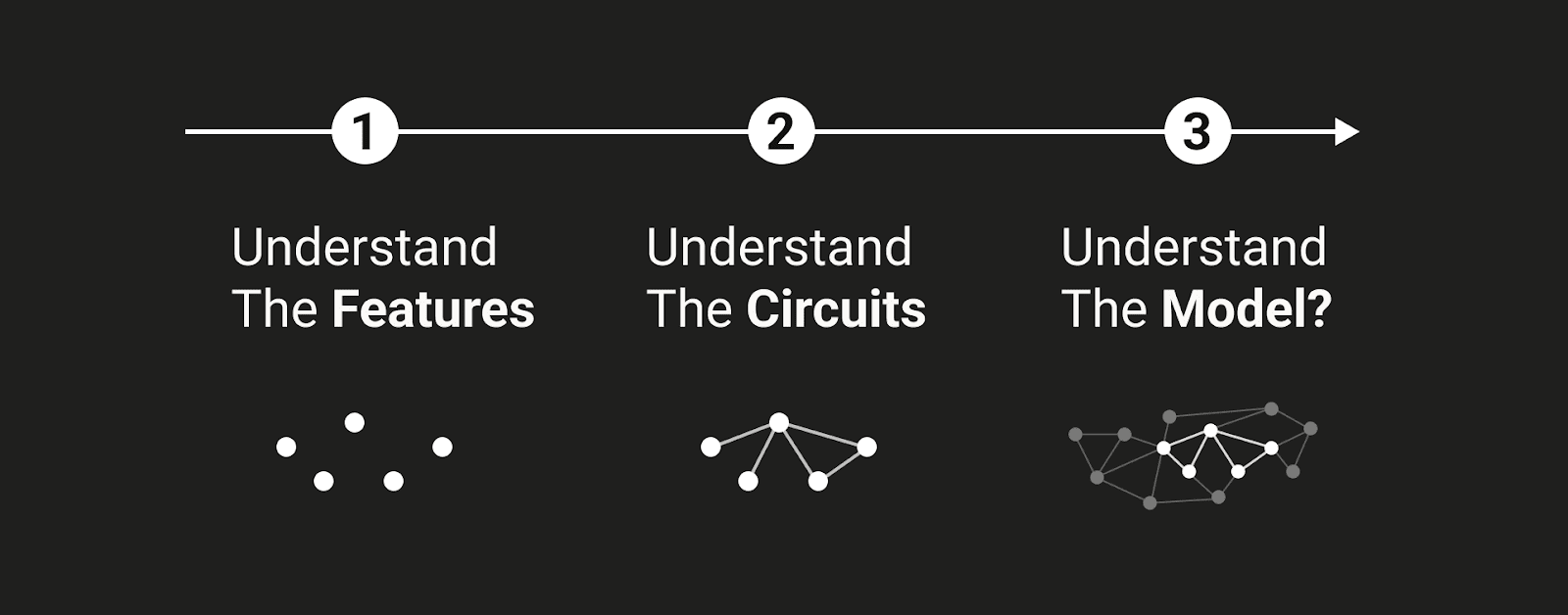

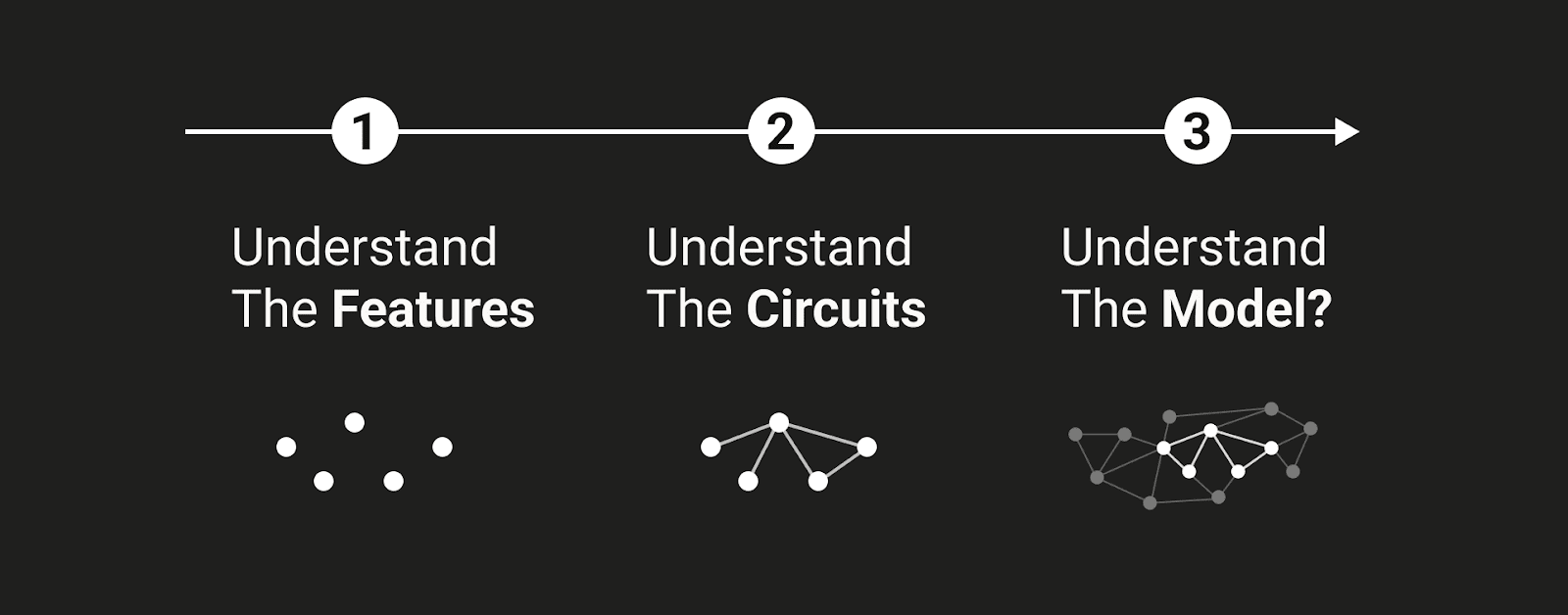

Recently, much attention in the field of mechanistic interpretability, which tries to explain the behavior of neural networks in terms of interactions between lower level components, has been focussed on extracting features from the representation space of a model. The predominant methodology for this has used variations on the sparse autoencoder, in a series of papers [...]

---

Outline:(09:56) Monosemanticity

(19:22) Explicit vs Tacit Representations.

(26:27) Conclusions

The original text contained 12 footnotes which were omitted from this narration. The original text contained 1 image which was described by AI. ---

First published: August 2nd, 2024

Source: https://www.lesswrong.com/posts/tojtPCCRpKLSHBdpn/the-strong-feature-hypothesis-could-be-wrong ---

Narrated by

TYPE III AUDIO.

---

Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.