Oxide and Friends

Oxide Computer Company

Oxide hosts a weekly Discord show where we discuss a wide range of topics: computer history, startups, Oxide hardware bringup, and other topics du jour. These are the recordings in podcast form.

Join us live (usually Mondays at 5pm PT) https://discord.gg/gcQxNHAKCB

Subscribe to our calendar: https://calendar.google.com/calendar/ical/c_318925f4185aa71c4524d0d6127f31058c9e21f29f017d48a0fca6f564969cd0%40group.calendar.google.com/public/basic.ics

Join us live (usually Mondays at 5pm PT) https://discord.gg/gcQxNHAKCB

Subscribe to our calendar: https://calendar.google.com/calendar/ical/c_318925f4185aa71c4524d0d6127f31058c9e21f29f017d48a0fca6f564969cd0%40group.calendar.google.com/public/basic.ics

Episodes

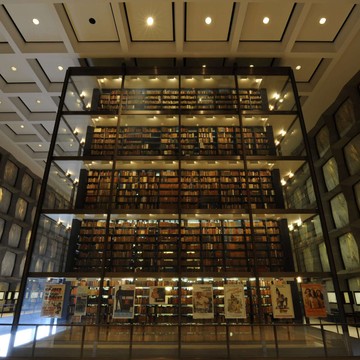

Mentioned books

37 snips

Nov 1, 2024 • 1h 35min

Books in the Box IV

Nick Gideo, Tom Lyon, and Oliver Herman bring their literary insights to the table, discussing gem of recent reads like 'The Big Score' and 'Creativity, Inc.' They explore the value of physical books versus e-readers, share nostalgic tech tales from phone-freaking culture, and debate the significance of semiconductors in today's geopolitics. The conversation dives into ethical lessons from history, touching on topics from 'IBM and the Holocaust' to the whimsical journeys in tech entrepreneurship, making it a rich tapestry of literature and technology.

18 snips

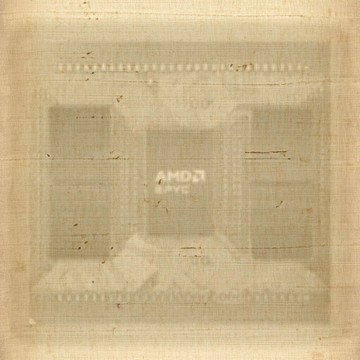

Oct 16, 2024 • 1h 54min

Unshrouding Turin (or Benvenuto a Torino)

Join experts George Cozma, Robert Mustacchi, Eric Aasen, and Nathanael Huffman as they dive into AMD's groundbreaking 5th generation EPYC processor, Turin. George, an insightful tech blogger, shares his analysis of the new architectures and their competitive advantages against Intel. The conversation covers advancements in power management, the innovative eSpy boot feature, and ongoing challenges in high-density PCB design. They also explore the significance of P4 programmability in networking, illustrating the dynamic evolution of server technologies.

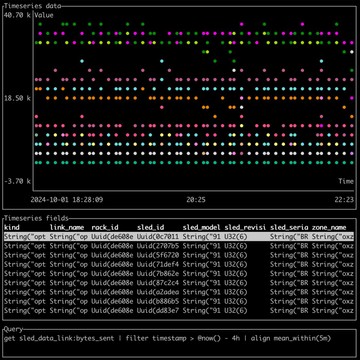

Oct 2, 2024 • 1h 35min

Querying Metrics with OxQL

Ben Naecker, a developer at Oxide, joins Bryan Cantrill and Adam Leventhal to discuss the creation of OxQL, a new query language. They humorously debate innovation in the realm of query languages compared to established ones like SQL. The benefits of OxQL’s unique piped syntax, enhancements for clarity, and advanced feature integration are explored. The conversation also delves into efficient data modeling for time series, parsing techniques, and the potential of Clickhouse for database optimization, all while tackling skepticism regarding the need for new domain-specific languages.

Sep 26, 2024 • 1h 40min

RTO or GTFO

Matt Amdur, a friend of the pod, joins Chris to dive into the hot topic of return-to-office mandates. They explore whether in-office experiences spark genuine inspiration or are simply rooted in nostalgia. The duo humorously critiques tech mishaps during remote meetings and discusses the quirky joys of workplace games like Z-ball. As they navigate hybrid work dynamics, they emphasize the significance of in-person collaboration while addressing employee anxieties amid company layoffs. Their banter enriches the conversation around adapting workplace culture in a changing environment.

6 snips

Sep 20, 2024 • 1h 22min

Reflecting on Founder Mode

Dive into the intriguing discussion on founder mode and its implications in the startup world. Explore the dynamics of Steve Jobs and John Scully, and the crucial balance between innovative thinking and experienced leadership. Discover the challenges faced in navigating management roles and hiring processes. Hear anecdotal journeys, including a humorous quest for a signed book and reflections on the importance of documentation. The hosts blend insightful analysis with light-hearted storytelling, offering a compelling mix of tech culture and personal experiences.

6 snips

Aug 30, 2024 • 1h 42min

RFDs: The Backbone of Oxide

Robert Mustacchi, the most prolific author of Requests for Discussion (RFDs) at Oxide, joins Ben Leonard, who enhanced their RFD site with features like full-text search. They share the significance of RFDs in streamlining communication and decision-making, with over 500 created in just five years. The duo delves into the importance of documentation formats and navigates the complexities of integration with GitHub. They emphasize RFDs as vital for fostering transparency, collaboration, and the cultural evolution at Oxide.

Aug 21, 2024 • 1h 34min

Whither CockroachDB?

Dave Pacheco, an expert in engineering decisions, joins the conversation to share insights on CockroachDB. He discusses the shift from open-source to proprietary licensing and its community implications. The importance of rigorous evaluation in technology choices is emphasized, with anecdotes about challenges faced with databases like Postgres. Pacheco explores CockroachDB's strengths in cloud environments, revealing the critical role of monitoring latency and maintaining data integrity. Future considerations around the evolution of CockroachDB also make for an engaging discussion.

Aug 14, 2024 • 1h 58min

The Saga of Sagas

Join Dave Pacheco, a Steno developer, Eliza Weisman, who helps run the control plane, and Andrew, as they dive into the fascinating world of Distributed Sagas. They discuss the challenges of coordinating complex operations in microservices and share their innovative solutions for maintaining data integrity. The trio highlights the differences between sagas and traditional workflows, tackling issues like automated testing and state management. Tune in for insights on collaborative development and the evolution of their ambitious project!

5 snips

Aug 9, 2024 • 1h 33min

Pragmatic LLM usage with Nicholas Carlini

Nicholas Carlini, an expert in pragmatic uses of LLMs, shares his insights on harnessing these powerful tools for real-world problem-solving. He discusses the balance of trust and critical engagement when using LLMs in programming, emphasizing their role in improving efficiency. Humorous anecdotes about AI interactions highlight the generational shift in technology integration. The conversation also critiques AI advertisements, cautioning against the hype and advocating for realistic expectations around LLM capabilities and innovation.

Jul 25, 2024 • 1h 42min

CrowdStrike BSOD Fiasco with Katie Moussouris

Security expert Katie Moussouris joins to discuss the CrowdStrike BSOD fiasco, examining technical failures, cybersecurity incidents like SolarWinds, and the role of the Cyber Safety Review Board. The podcast delves into persuading entities to invest in security, learning from failures, and the importance of research bodies in cybersecurity.