LessWrong (Curated & Popular)

LessWrong (Curated & Popular) "AlgZoo: uninterpreted models with fewer than 1,500 parameters" by Jacob_Hilton

Jan 27, 2026

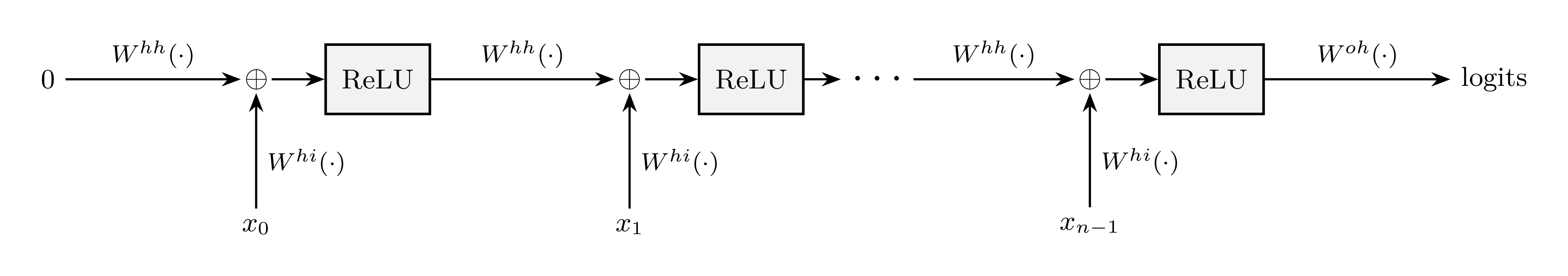

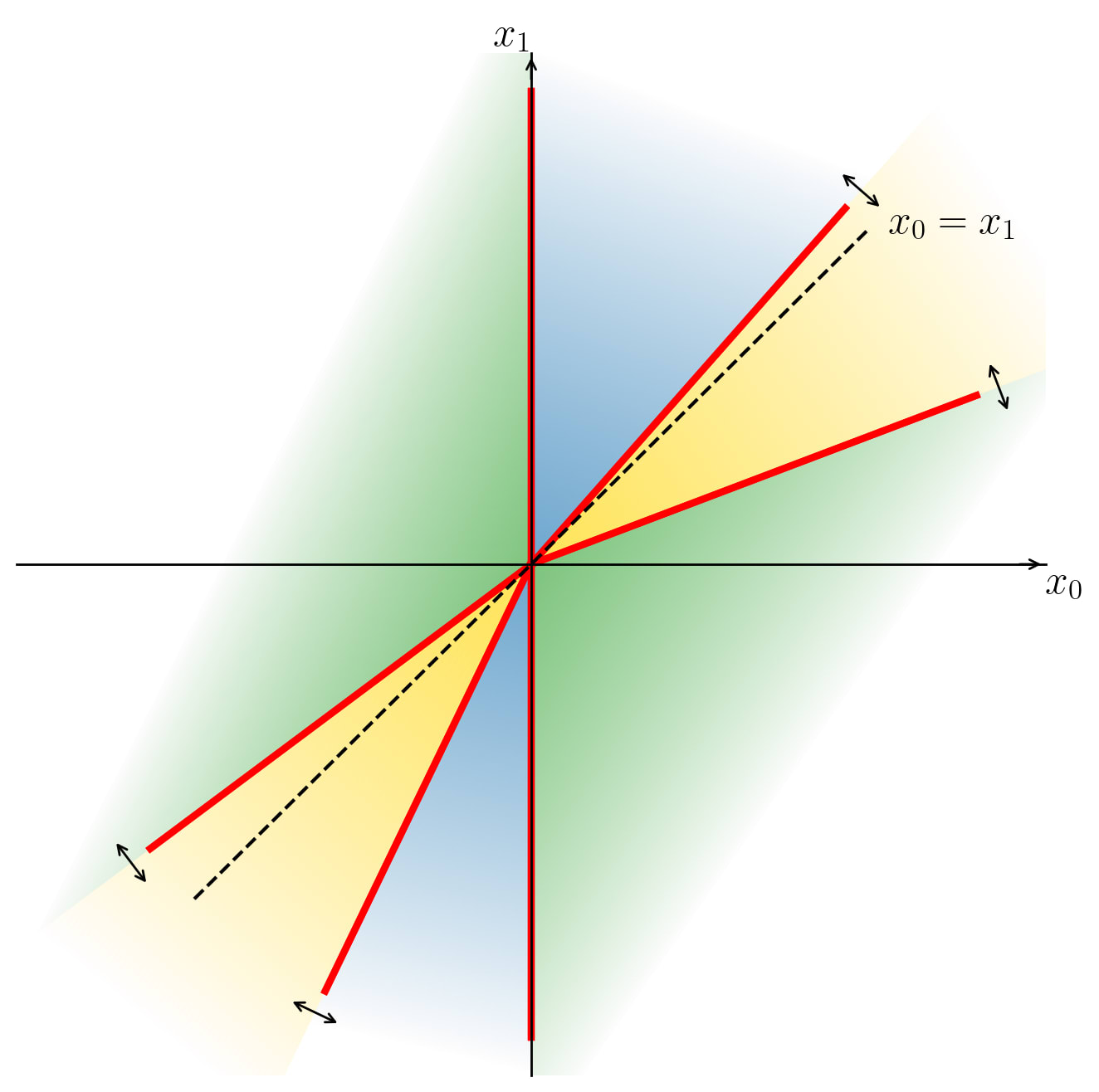

Tiny RNNs and transformers trained on algorithmic tasks are used as surprising testbeds for mechanistic interpretability. The show walks through models from 8 to 1,408 parameters and why fully understanding few-hundred-parameter systems matters. Concrete case studies include second-argmax RNNs at several hidden sizes, analyses of decision geometry and neuron subcircuits, and a clear challenge to scale interpretability methods.

AI Snips

Chapters

Transcript

Episode notes

Explanation As Mechanistic Loss Estimate

- ARC defines an explanation as a mechanistic estimate of a model's loss derived deductively from the model's structure.

- They contrast mechanistic estimates with sampling-based inductive estimates and prioritize deducing accuracy from model internals.

Evaluate Explanations With MSE And Surprise

- Evaluate mechanistic estimates by comparing mean squared error versus compute to sampling baselines.

- Use surprise accounting as a stricter metric that measures how much unexpected information remains after the explanation.

Matching Sampling As A Baseline

- ARC conjectures a matching sampling principle: mechanistic procedures can match random sampling MSE given enough advice and compute.

- This sets random sampling as a strong baseline for algorithmic explanations.