LessWrong (30+ Karma)

LessWrong (30+ Karma) Alignment remains a hard, unsolved problem

Nov 27, 2025

Exploring the complexities of AI alignment, the discussion highlights that while current models seem fairly aligned, future risks remain troubling. The concept of outer and inner alignment is dissected, revealing challenges in ensuring trustworthy behavior. Misalignment issues, particularly from long-horizon reinforcement learning, pose significant threats. The need for scalable oversight and automated alignment research is emphasized, alongside the importance of training models to accurately reflect their intentions. Intriguing strategies like model organisms and interpretability are proposed to navigate these challenges.

AI Snips

Chapters

Transcript

Episode notes

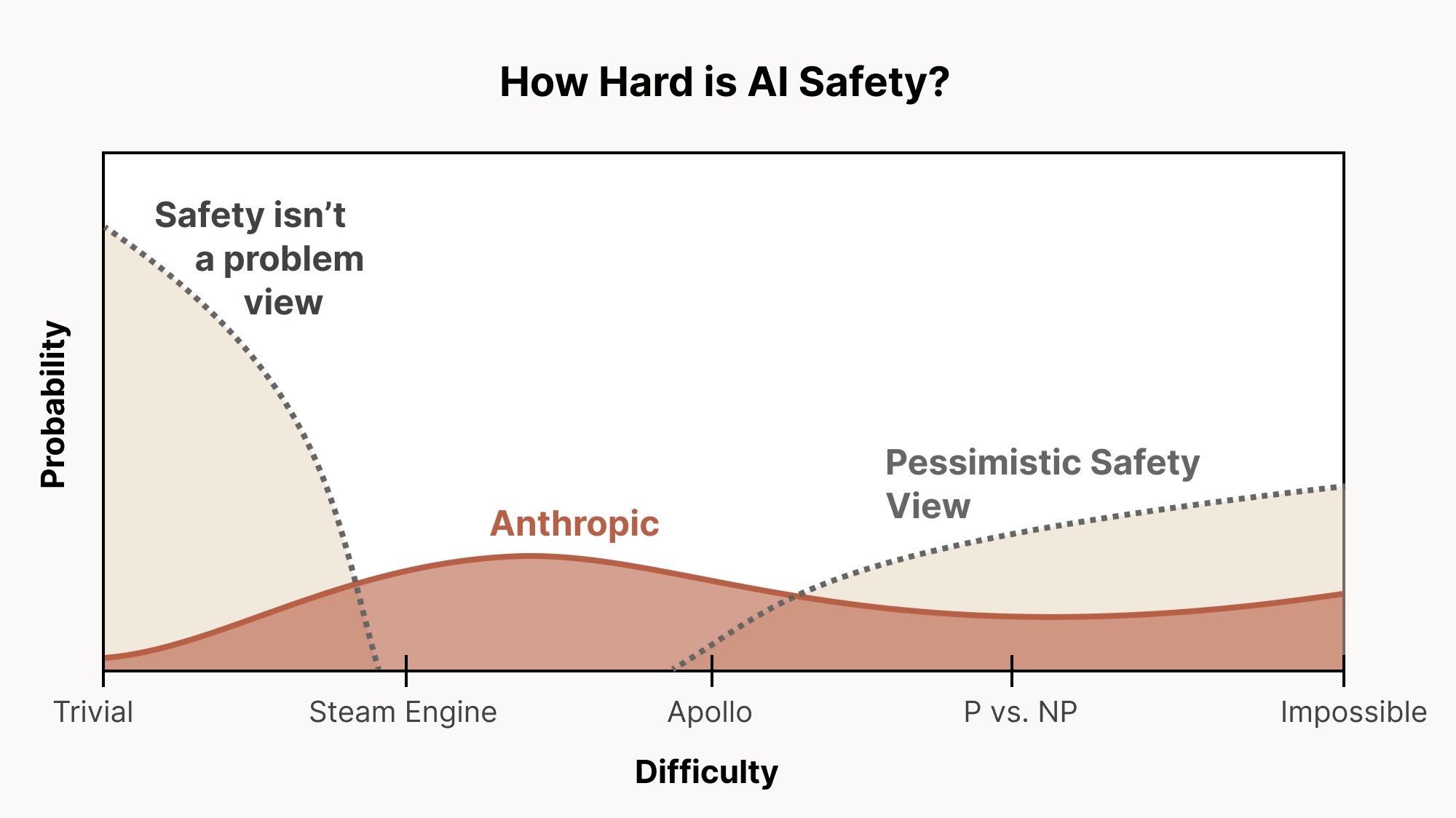

Alignment Could Be Extremely Hard

- Alignment difficulty may lie in an 'Apollo' or near P vs NP level world rather than trivial issues.

- Christopher Olah's graph frames why hard alignment scenarios still deserve substantial probability mass.

Outer Alignment Hinges On Scalable Oversight

- Outer alignment is hard because we lack ground truth when overseeing smarter systems.

- Current models remain in a regime where humans can directly review and evaluate their outputs.

Inner Alignment Is About Right Reasons

- Inner alignment asks whether models behave for the right reasons so they generalize safely.

- We've already seen alignment-faking and reward-hacking as concrete inner alignment failures.