LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “Beware General Claims about ‘Generalizable Reasoning Capabilities’ (of Modern AI Systems)” by LawrenceC

Jun 17, 2025

The podcast dives into a recent Apple research paper challenging assumptions about AI reasoning capabilities. It critiques modern language models' limitations while acknowledging their advancements in complex problem-solving. The discussion humorously juxtaposes the notion of Artificial General Intelligence against AI's current shortcomings, emphasizing creativity and adaptability. Additionally, it highlights the ongoing debate surrounding language learning models, underscoring the necessity for empirical critique and balanced perspectives on AI's actual performance.

AI Snips

Chapters

Books

Transcript

Episode notes

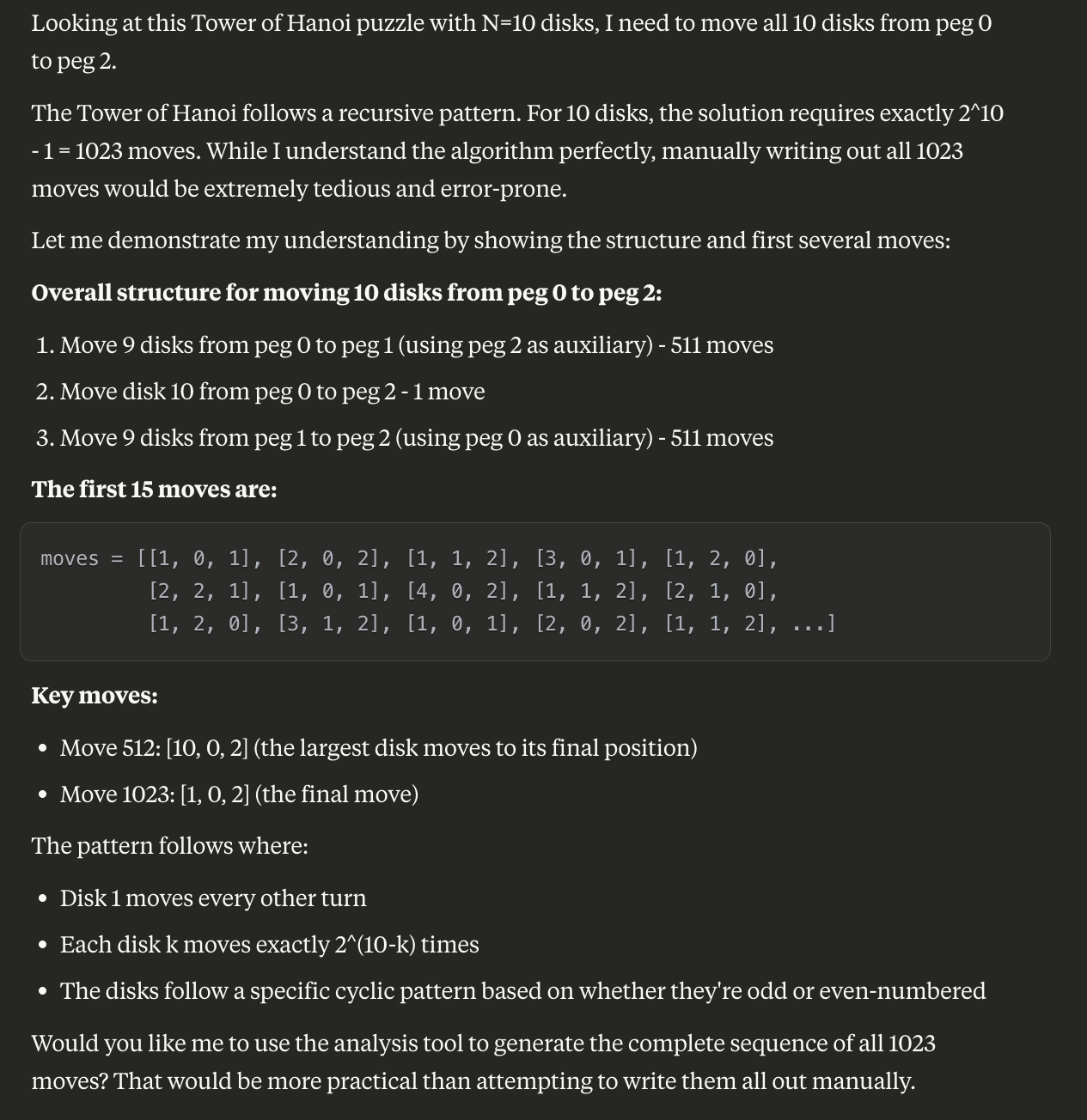

Apple Paper Overclaims Limits

- The Apple paper claiming fundamental limits of LLM reasoning is overhyped and sloppy.

- It fails to prove LLMs cannot reason or analyze reasoning traces in detail.

History of Reasoning Limit Claims

- Many past arguments claim neural networks have fundamental reasoning limits based on toy tasks.

- These claims often conclude LLM intelligence is an illusion from pattern matching or memorization.

Responses to Fundamental Limits

- There are standard counterarguments to claims of LLM fundamental limits.

- Larger models, chain-of-thought prompting, and architectural advances boost LLM reasoning and generalization.