Programming Throwdown

Programming Throwdown 172: Transformers and Large Language Models

Mar 11, 2024

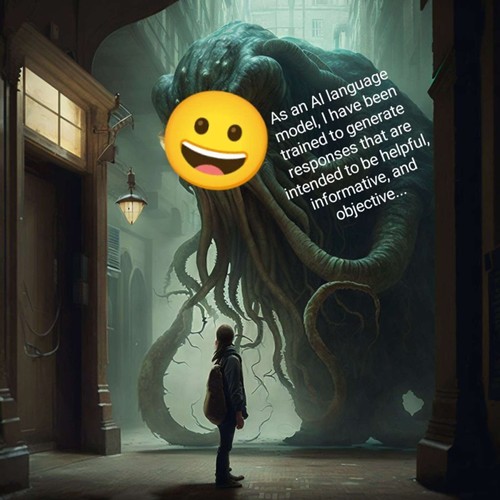

Exploring transformers and large language models in neural networks, focusing on latent variables, encoders, decoders, attention layers, and the history of RNNs. Discussing the vanishing gradient problem in LSTM and self-supervised learning with direct policy optimization.

Chapters

Transcript

Episode notes

1 2 3 4 5 6 7 8 9

Introduction

00:00 • 2min

Exploring Remote Work and WeWork Spaces

01:31 • 22min

Exploring PID Controllers and Gemma Models in Large Language Models

23:27 • 5min

Exploring Model Parameters, Memory Usage, and Fine-Tuning in Large Language Models

28:32 • 3min

Exploring The Wheel of Time Series & TV Adaptation

31:03 • 3min

Exploring YouTube Recommendations and Game Development

33:49 • 15min

Challenges and Solutions in Neural Networks

48:23 • 25min

Evolution and Challenges in Scaling Language Models

01:13:07 • 10min

Speculating on the Future of Jobs in an AI-dominated World

01:23:25 • 3min