Programming Throwdown

Programming Throwdown 180: Reinforcement Learning

Mar 17, 2025

Dive into the world of reinforcement learning as hosts break down the mechanics behind supervised and unsupervised learning. Discover the debate on running AI models locally versus in the cloud, alongside insights from NASA's software development rules. A fascinating focus on value-based approaches like SARSA and Q-Learning leads to policy optimization techniques, including actor-critic methods. Explore the implications of reinforcement learning from human feedback and its potential in enhancing AI decision-making. Perfect for tech enthusiasts!

AI Snips

Chapters

Books

Transcript

Episode notes

Supervised Learning: Strengths And Limits

- Supervised learning trains models with ground-truth examples and a loss function to measure error.

- It interpolates within seen data but struggles with out-of-distribution cases like adversarial stop-sign tricks.

Unsupervised Learning Finds Structure, Not Truth

- Unsupervised learning finds structure without labeled answers, often via clustering and scoring metrics.

- You can iteratively improve cluster quality but you rarely reach a single "correct" solution.

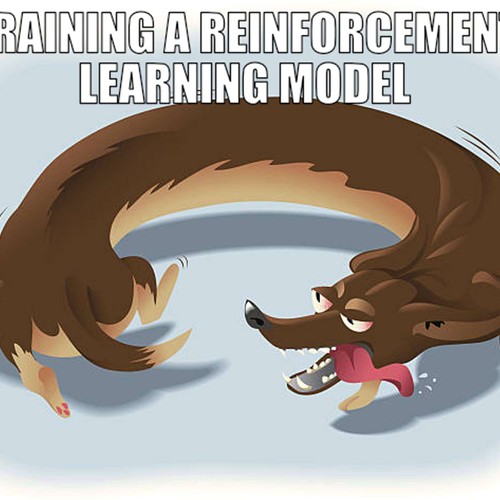

Why Reinforcement Learning Is Harder

- Reinforcement learning differs by having the model take actions and learn from outcomes rather than just revealing structure.

- It combines decision-making complexity with the lack of a perfect ground truth, making it the hardest to train well.