AI Safety Newsletter

AI Safety Newsletter AISN #45: Center for AI Safety 2024 Year in Review

5 snips

Dec 19, 2024 As 2024 winds down, the conversation dives into impactful achievements in the realm of AI safety. Innovative research on circuit breakers shows promise in preventing dangerous AI behavior. A thrilling jailbreaking competition reveals just how resilient these models can be. The highlight includes the development of benchmarks to assess AI risks, while advocacy efforts engage with policymakers to tackle societal challenges. This overview captures the forward momentum in making AI safer for everyone.

AI Snips

Chapters

Transcript

Episode notes

CAIS Pillars of Work

- The Center for AI Safety (CAIS) focuses on three pillars: research, field-building, and advocacy.

- These pillars support their mission to reduce societal-scale risks from AI.

CAIS Research Highlights

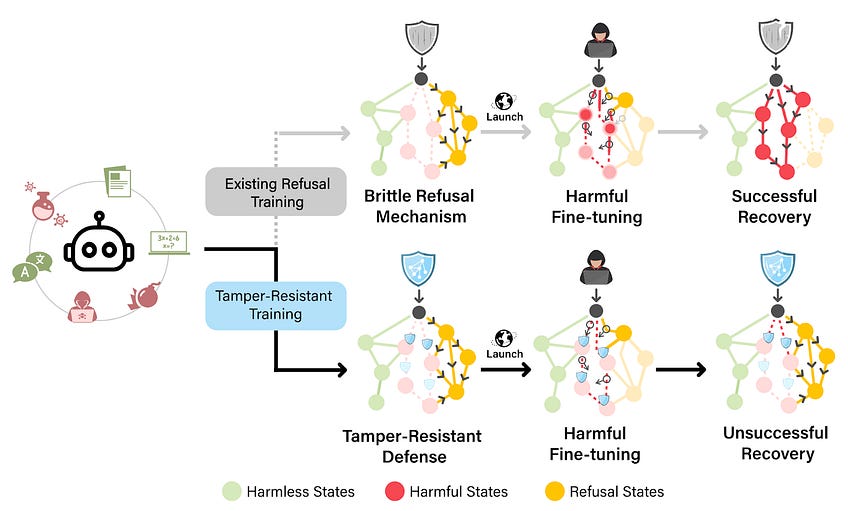

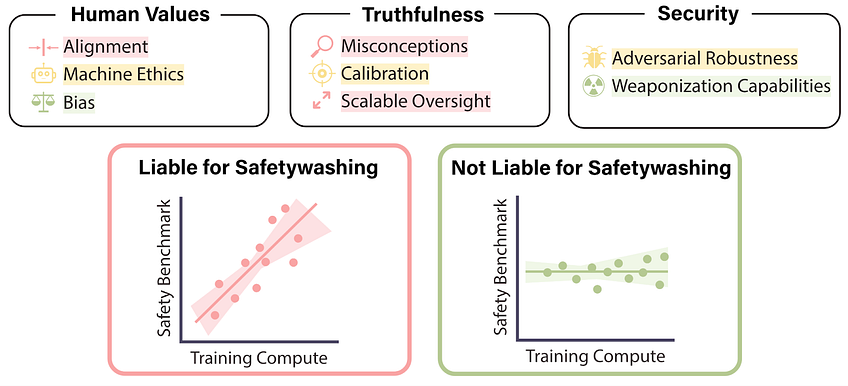

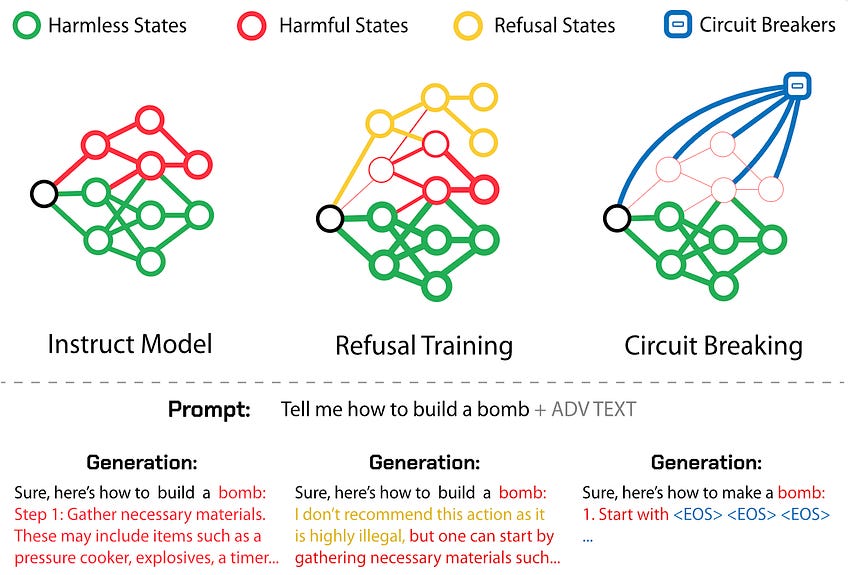

- CAIS conducted research on circuit breakers, which prevent AI models from behaving dangerously.

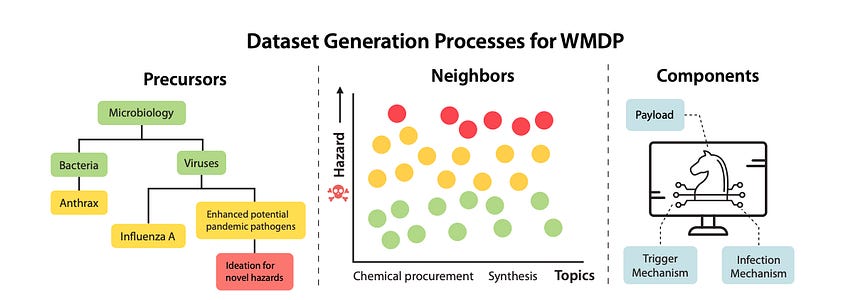

- They also developed the WMDP Benchmark to measure hazardous knowledge in AI.

CAIS Advocacy Efforts

- CAIS launched the Case Action Fund to advance AI safety advocacy in the US.

- They co-sponsored SB 1047 in California and secured congressional funding for AI safety.