LessWrong (30+ Karma)

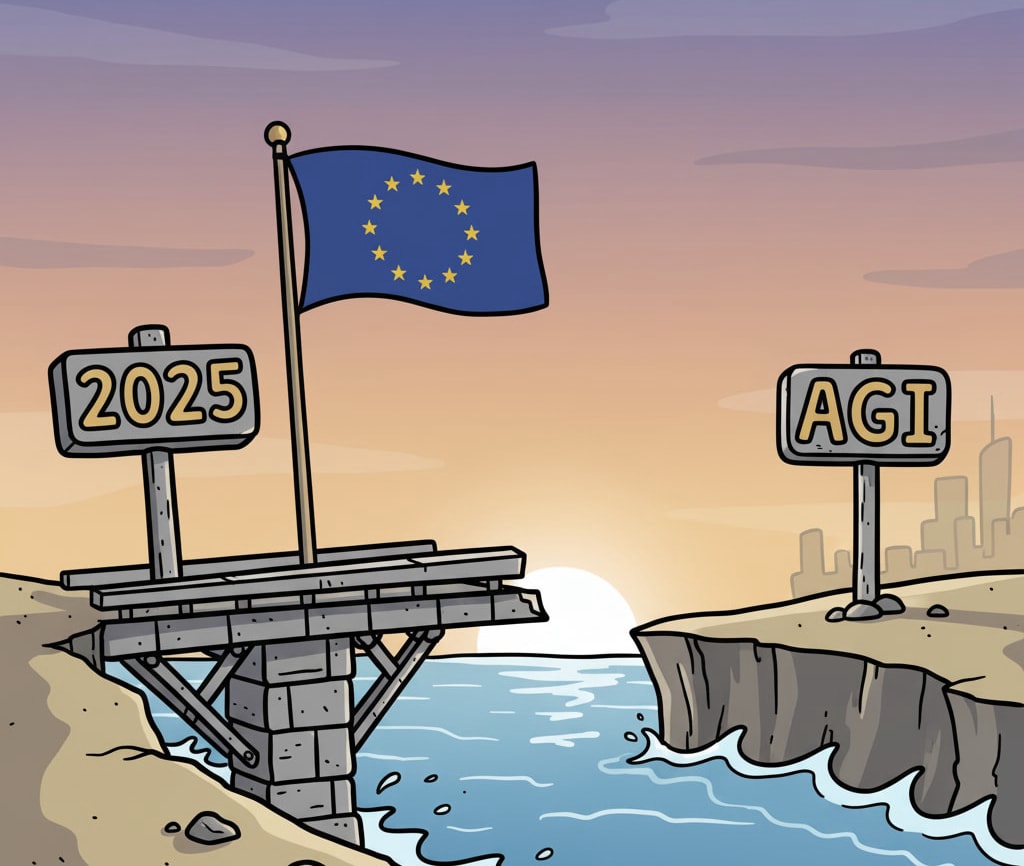

LessWrong (30+ Karma) “The world’s first frontier AI regulation is surprisingly thoughtful: the EU’s Code of Practice” by MKodama

Sep 23, 2025

Explore Europe's ambitious GPAI Code of Practice aimed at ensuring AGI safety. The discussion highlights the need for AGI companies to adopt comprehensive threat modeling and transparency with governments. Key recommendations include assigning safety officers and rigorous model evaluations. The host tackles the transition from voluntary to enforceable safety measures and reveals how this code could influence decision-making at critical moments. Insights on previous safety commitments from major players like OpenAI and Anthropic also emerge, framing the conversation around responsible AI development.

AI Snips

Chapters

Books

Transcript

Episode notes

Plan For Takeoff Before Crunch Time

- Do plan for takeoff now by running threat models and deciding risk tolerances before crunch time arrives.

- Do assign who can veto risky deployments and prepare evidence thresholds for alignment before rapid decision points.

Give Safety Teams Real Decision Power

- Do create governance structures that give risk-reducing voices real influence, not just CEOs or shareholders.

- Do set up roles like a designated safety officer and consider multi-line defenses to avoid single-person decision failures.

Transparency Enables Better Government Response

- Transparency to governments builds their capacity to oversee takeoff and reduces the chance of a chaotic midnight emergency call.

- Early sharing lets governments prepare situational awareness and personnel before capabilities surge privately.