EA Forum Podcast (Curated & popular)

EA Forum Podcast (Curated & popular) [Linkpost] “Evidence that Recent AI Gains are Mostly from Inference-Scaling” by Toby_Ord

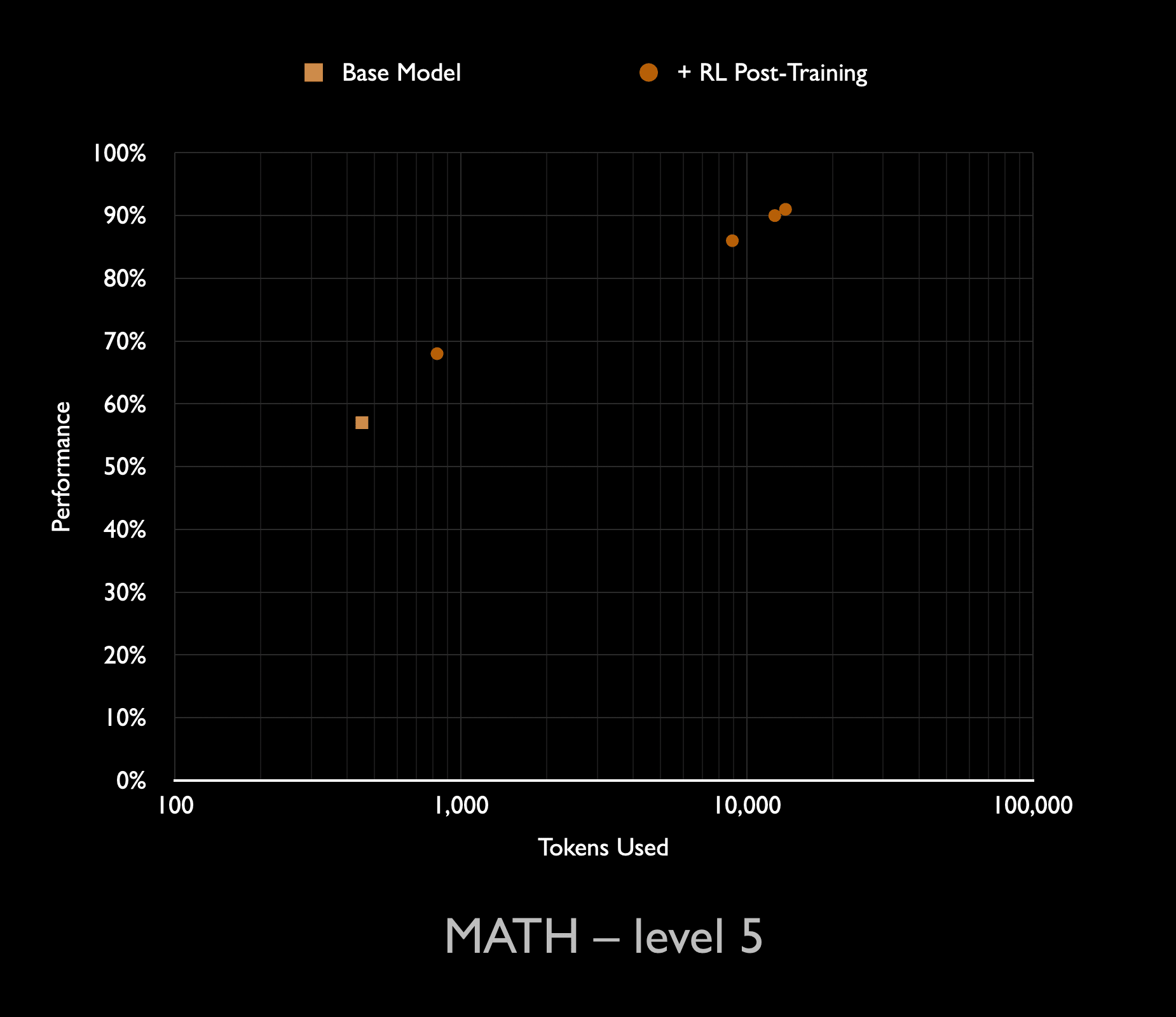

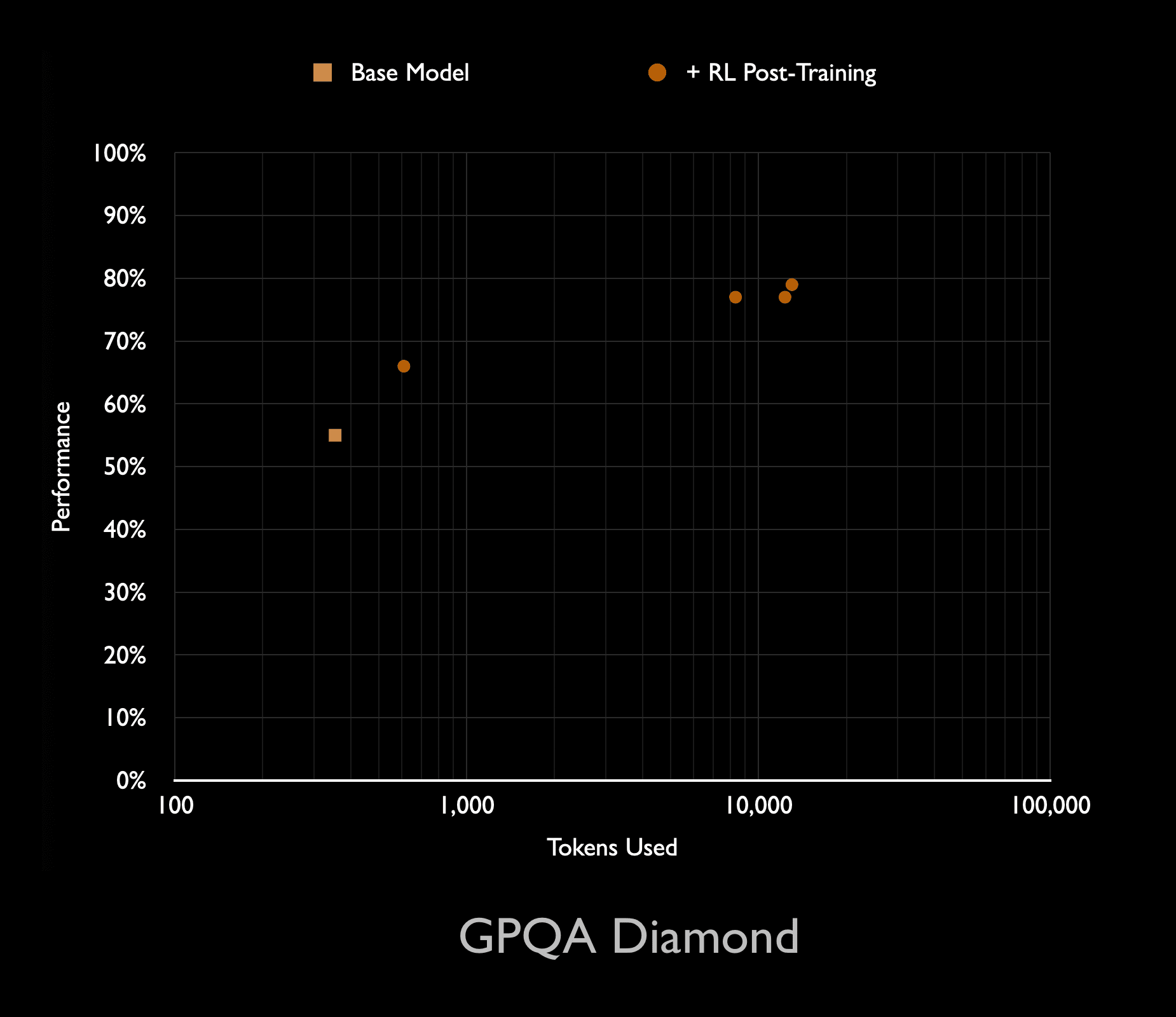

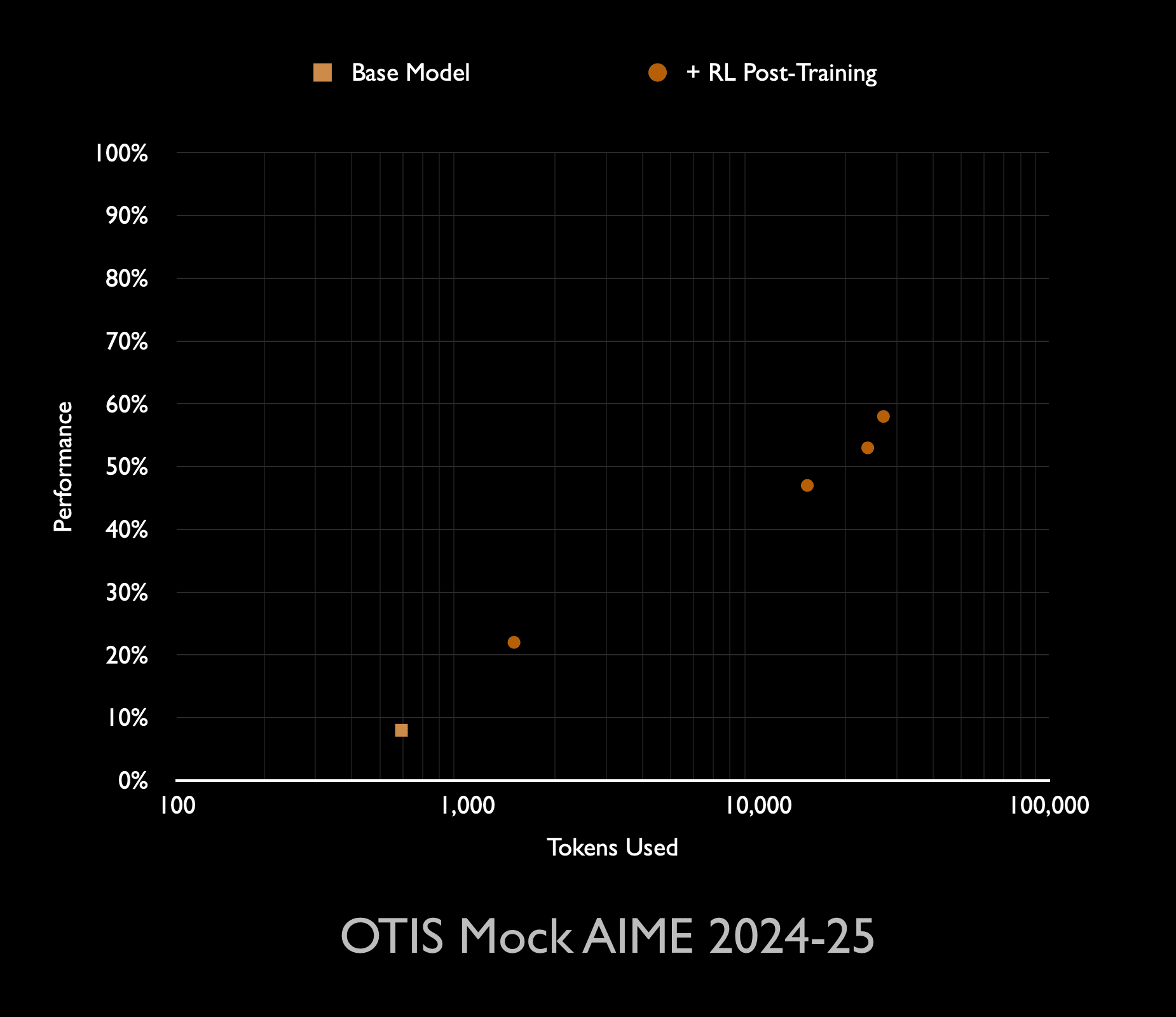

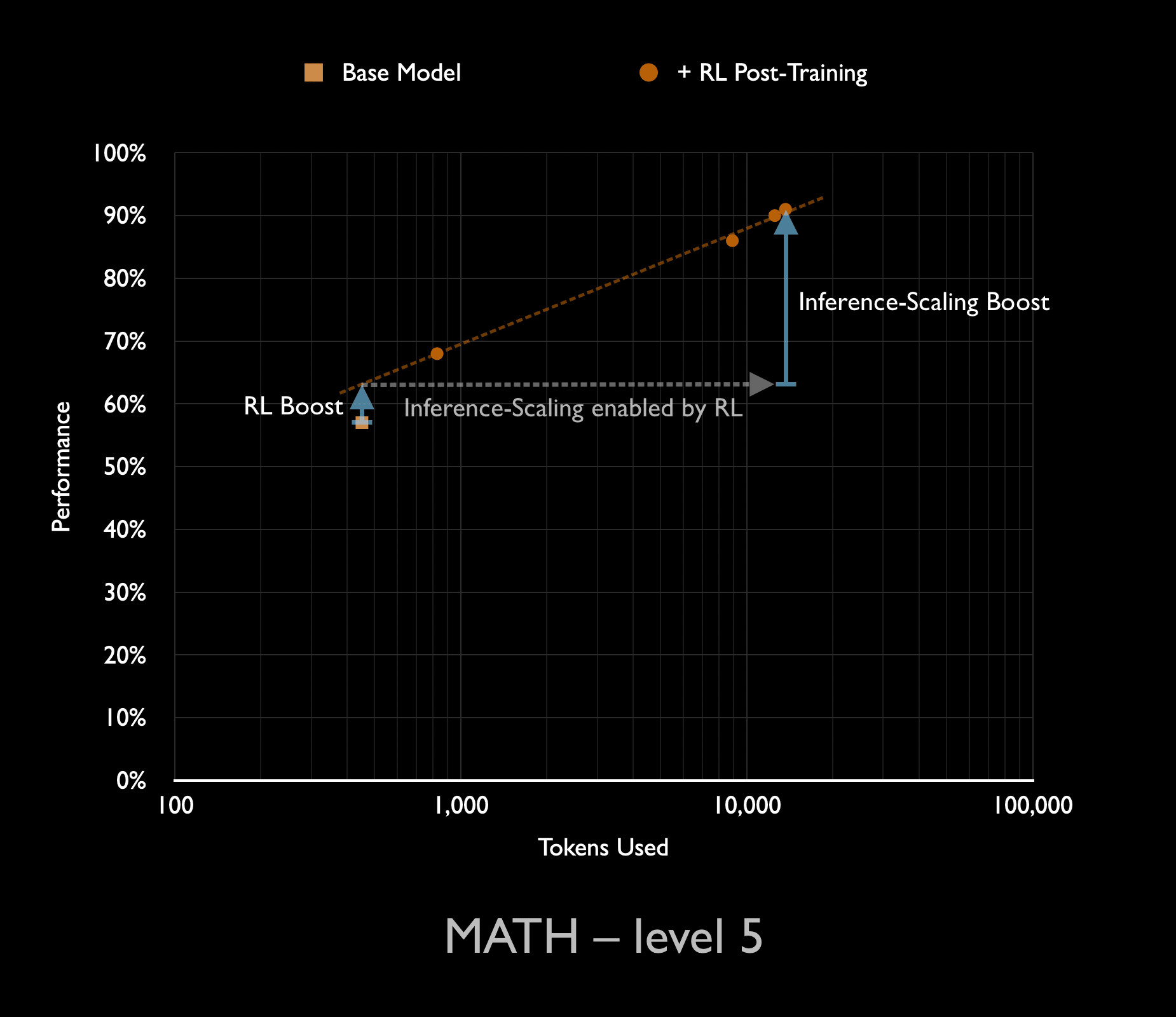

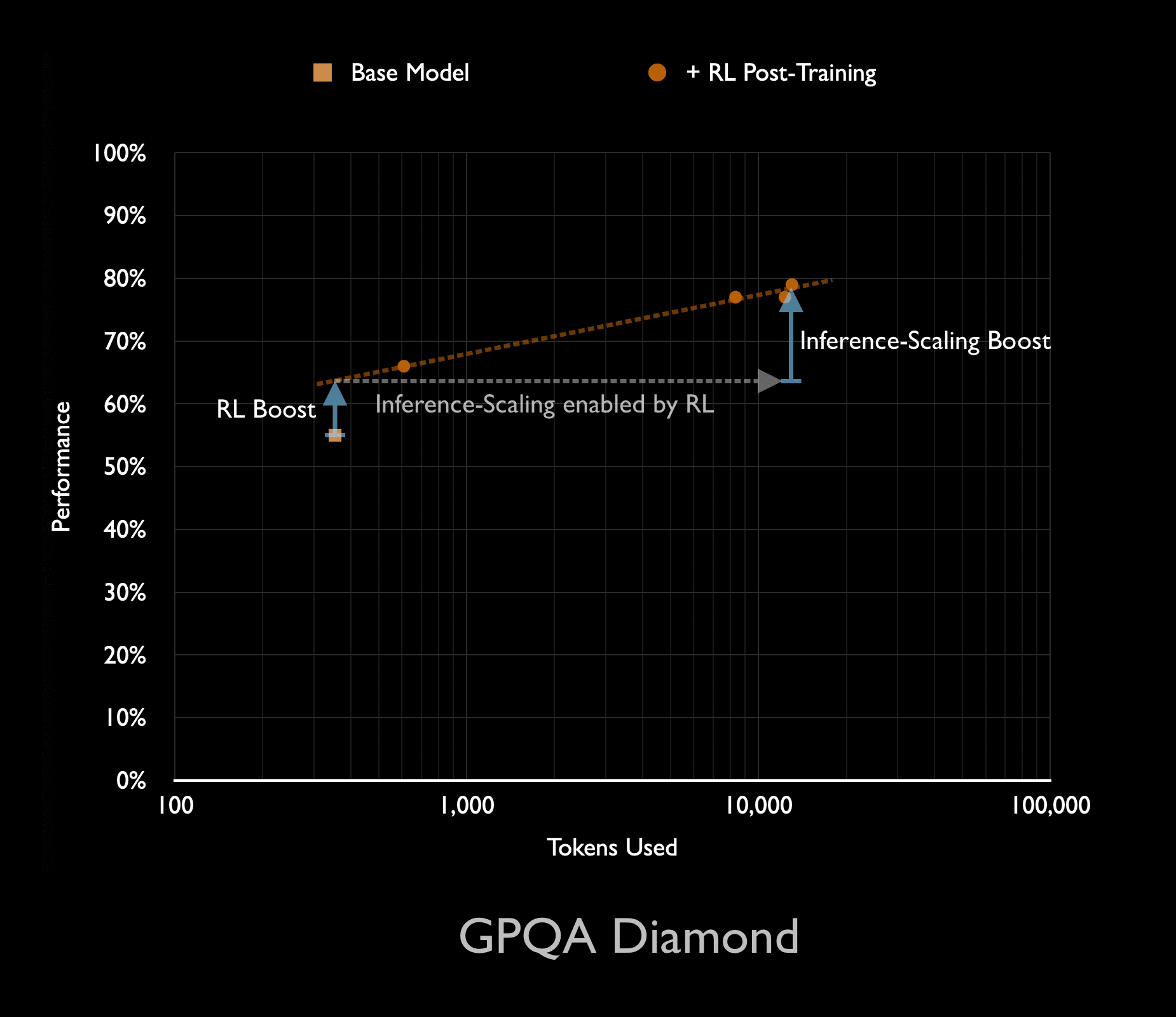

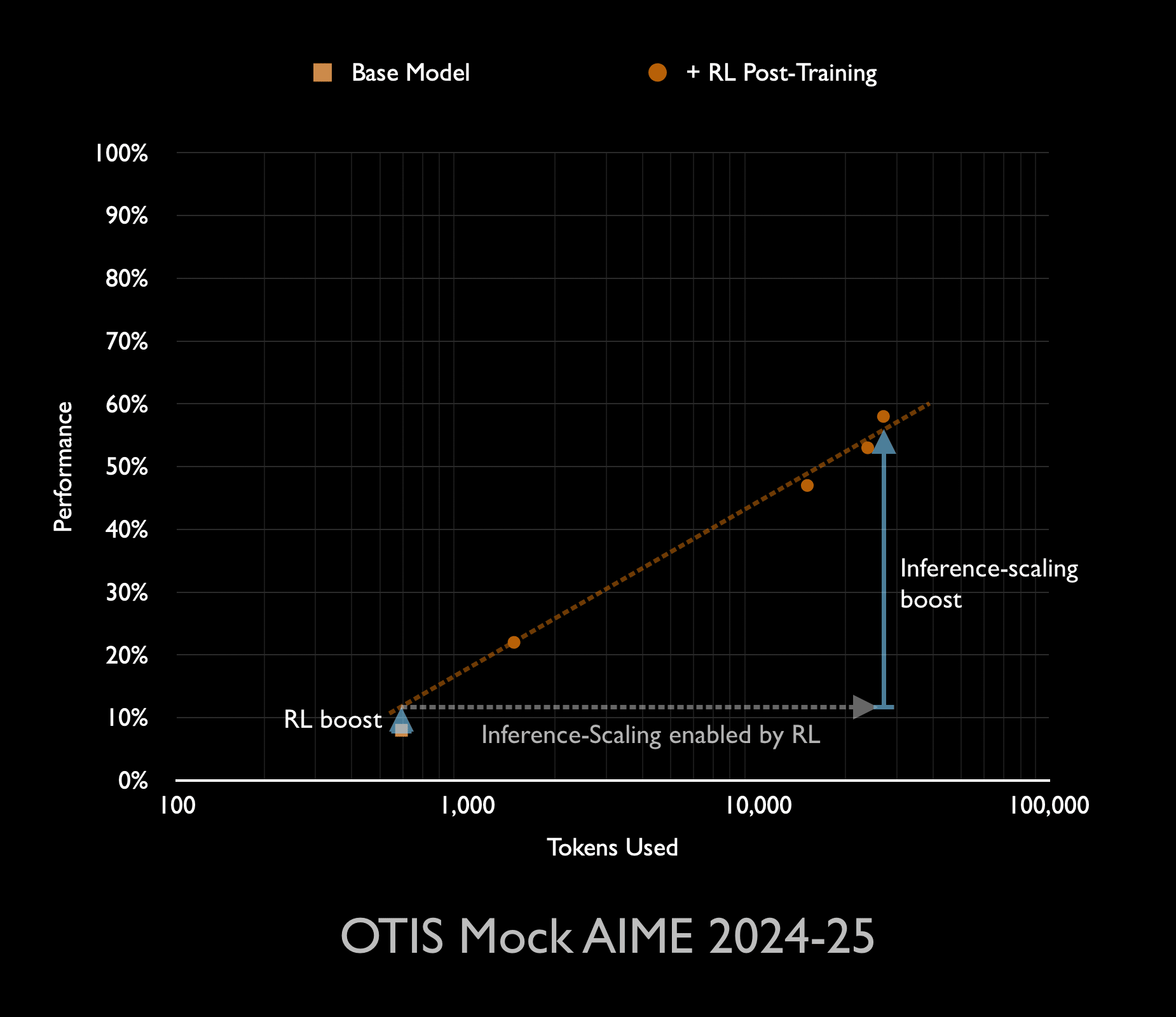

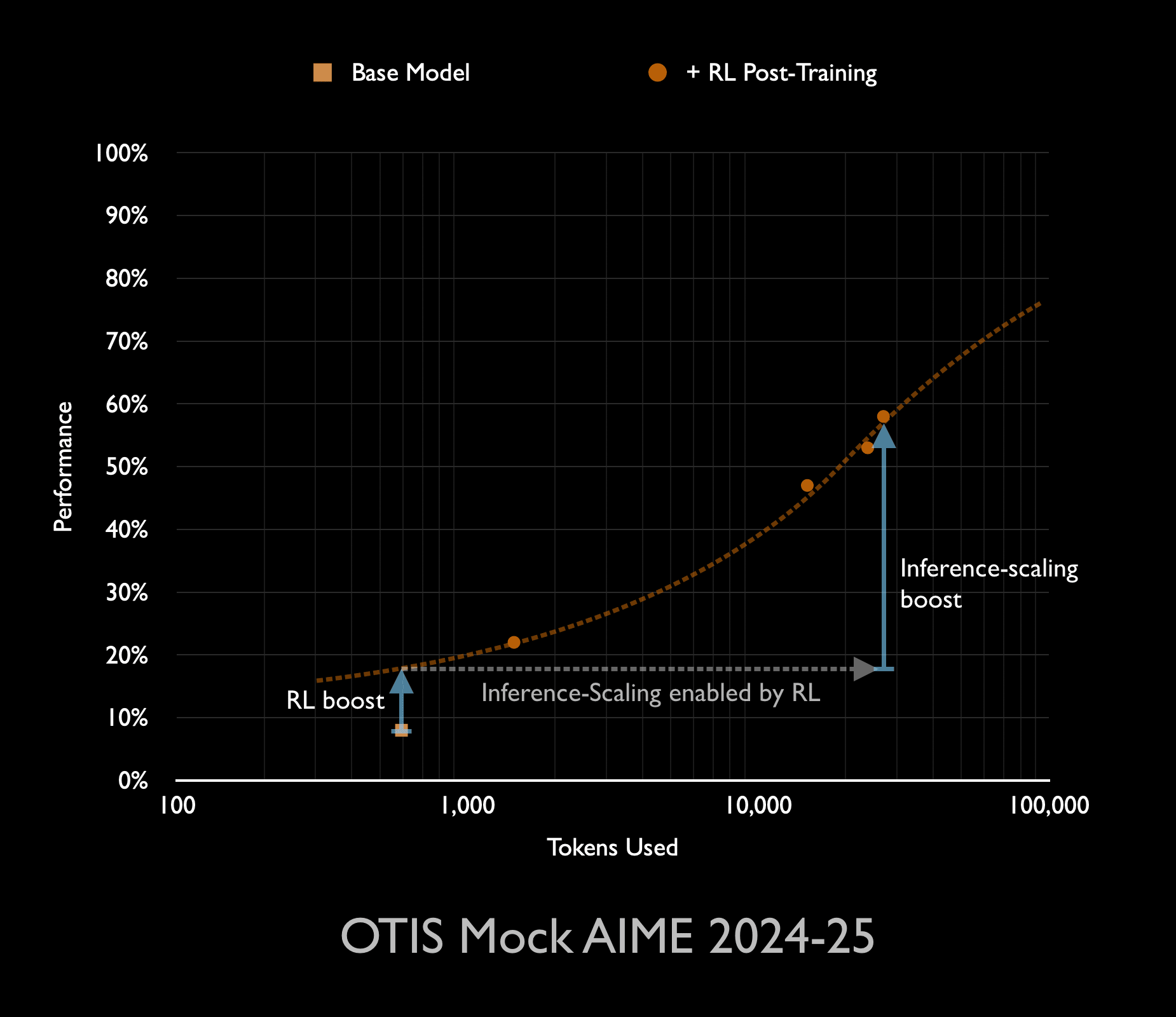

In the last year or two, the most important trend in modern AI came to an end. The scaling-up of computational resources used to train ever-larger AI models through next-token prediction (pre-training) stalled out. Since late 2024, we’ve seen a new trend of using reinforcement learning (RL) in the second stage of training (post-training). Through RL, the AI models learn to do superior chain-of-thought reasoning about the problem they are being asked to solve.

This new era involves scaling up two kinds of compute:

- the amount of compute used in RL post-training

- the amount of compute used every time the model answers a question

Industry insiders are excited about the first new kind of scaling, because the amount of compute needed for RL post-training started off being small compared to the tremendous amounts already used in next-token prediction pre-training. Thus, one could scale the RL post-training up by a factor of 10 or 100 before even doubling the total compute used to train the model.

But the second new kind of scaling is a problem. Major AI companies were already starting to spend more compute serving their models to customers than in the training [...]

---

First published:

February 2nd, 2026

Linkpost URL:

https://www.tobyord.com/writing/mostly-inference-scaling

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.