Programming Throwdown

Programming Throwdown 177: Vector Databases

Nov 4, 2024

Dive into the nuances of vector databases, where embeddings come to life! The speakers tackle the evolving landscape of tech hiring, emphasizing the vital role of nurturing junior developers. They also explore the intersection of AI advances and literature, discussing the ethics of AI-generated content. Plus, discover how gaming innovations like Escape Simulator can enhance collaboration, and learn about the impact of AI tools on software development workflows. Get ready for a tech-savvy journey filled with insights and laughter!

Chapters

Transcript

Episode notes

1 2 3 4 5 6 7 8

Intro

00:00 • 2min

Navigating the Car Buying Experience

01:35 • 15min

Investing in Junior Talent

17:00 • 9min

Navigating AI Advances and Literary Innovation

26:04 • 17min

Gaming and Coding Innovations

43:10 • 7min

Navigating AI in Software Development

49:43 • 3min

Evolving Programming Landscapes

52:29 • 10min

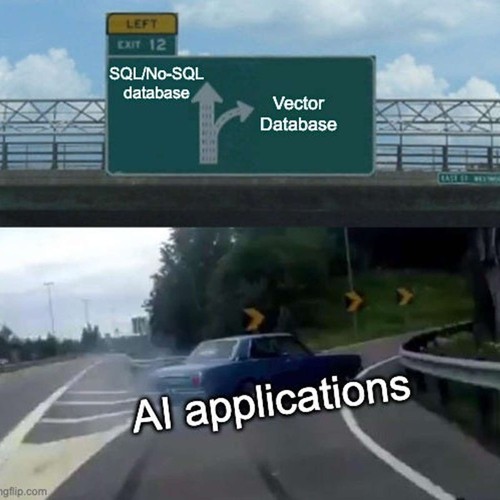

Understanding Embeddings and Vector Databases

01:02:53 • 26min