Introduction and summary

(This is the fourth in a series of four posts about how we might solve the alignment problem. See the first post for an introduction to this project and a summary of the series as a whole.)

In the first post in this series, I distinguished between “motivation control” (trying to ensure that a superintelligent AI's motivations have specific properties) and “option control” (trying to ensure that a superintelligent AI's options have specific properties). My second post surveyed some of the key challenges and available approaches for motivation control; and my third post did the same for option control.

In this fourth and final post, I wrap up the series with a discussion of two final topics: namely, moving from motivation/option control to safety-from-takeover (let's call this “incentive design”), and capability elicitation. Here's a summary:

- I start with a few comments on incentive design. [...]

---

Outline:

(00:04) Introduction and summary

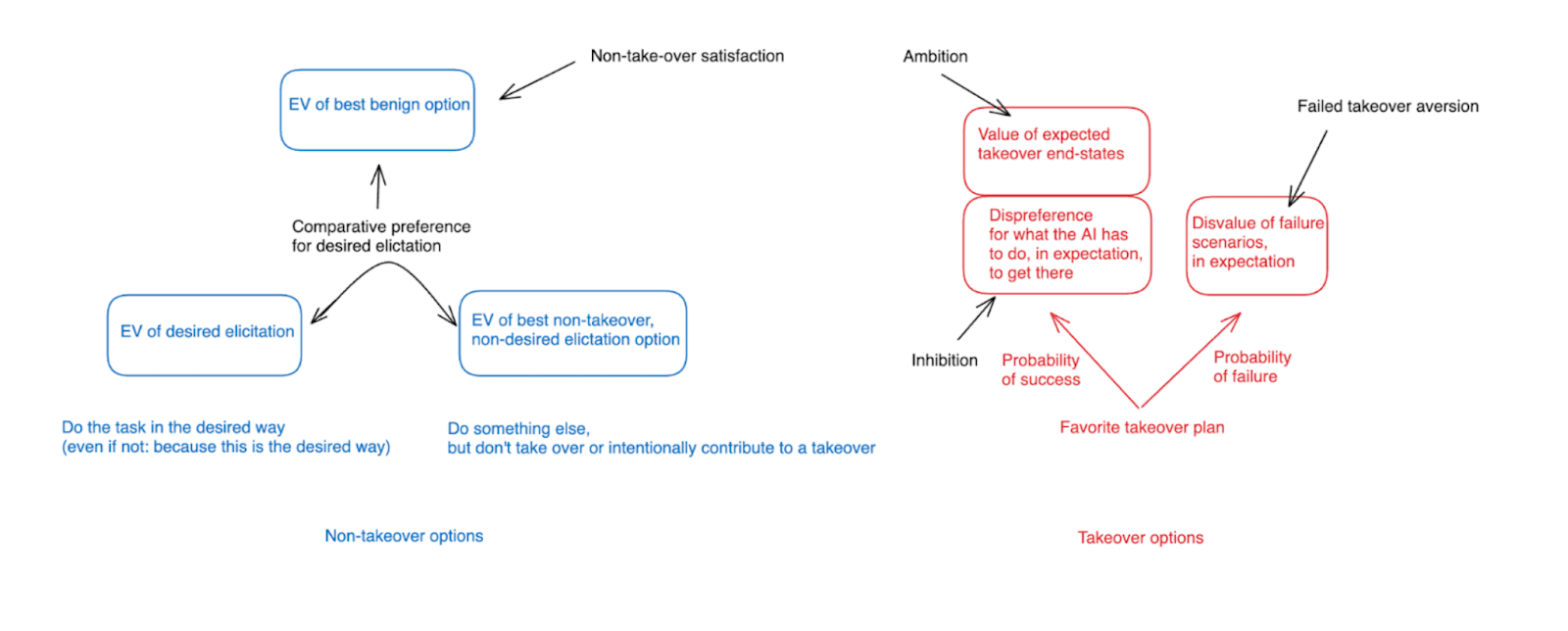

(04:33) Incentive design

(10:59) Capability elicitation

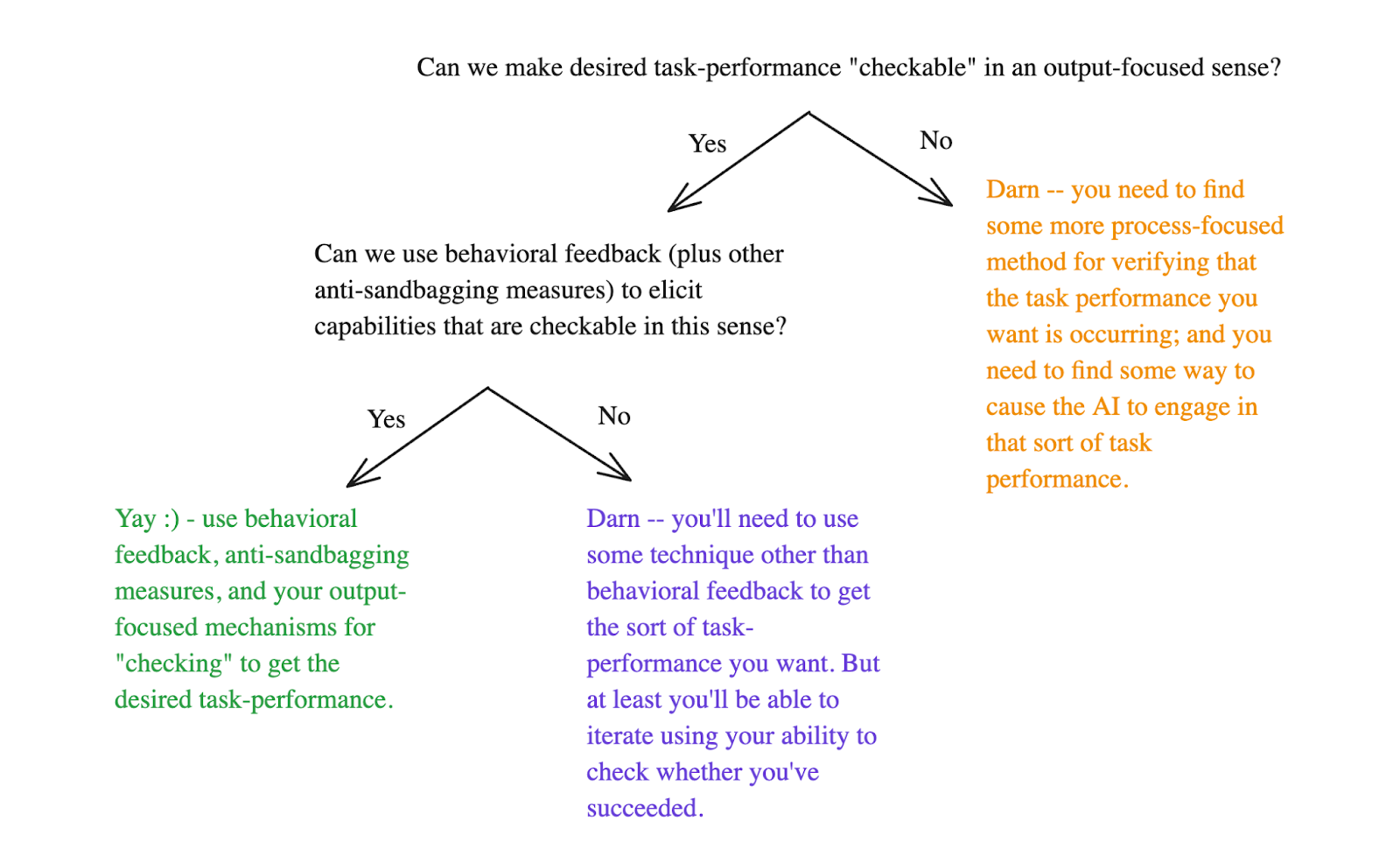

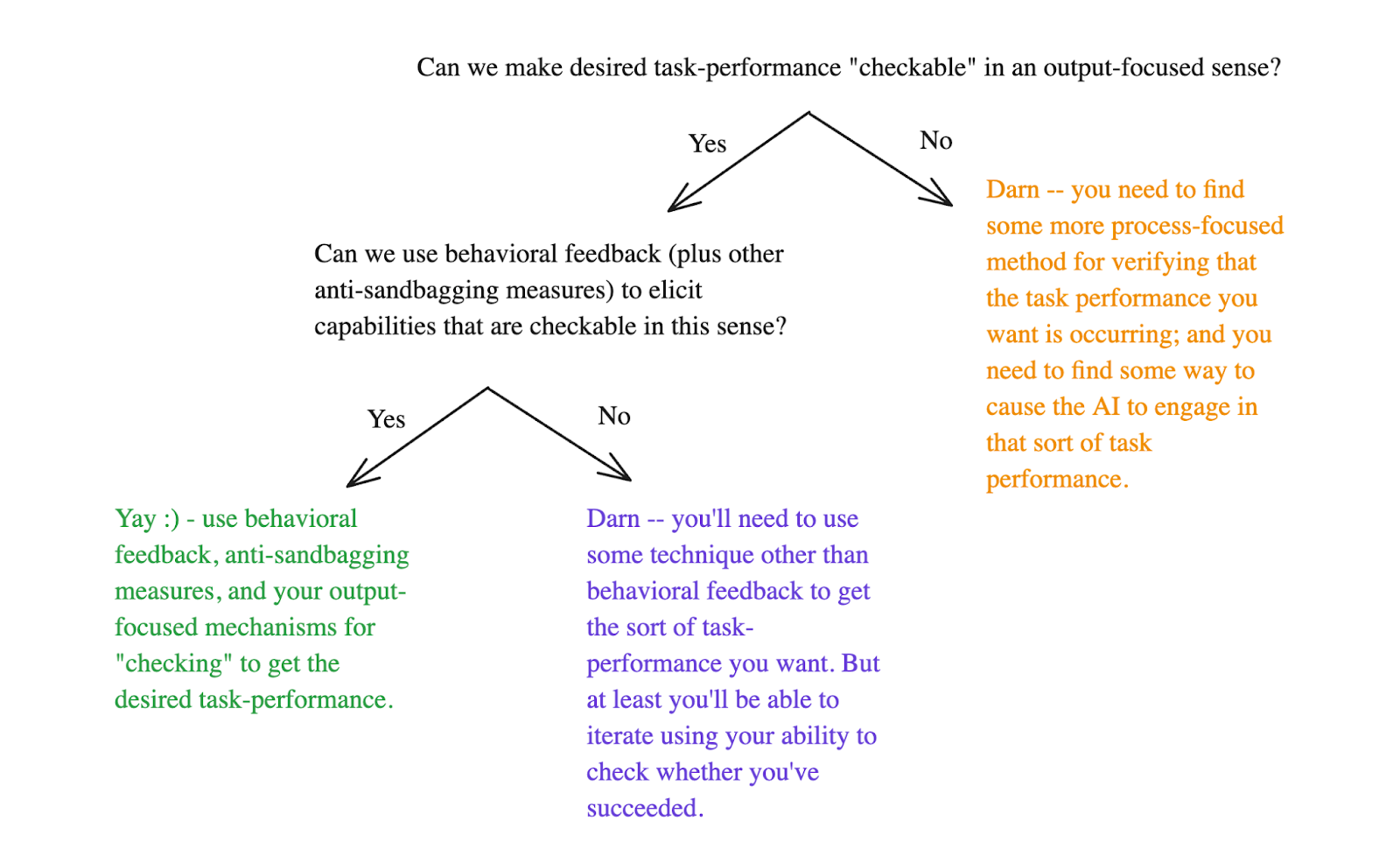

(11:22) Comparison with avoiding takeover

(15:35) Two key questions

(19:41) Not an afterthought

(21:48) Conclusion

The original text contained 10 footnotes which were omitted from this narration.

The original text contained 3 images which were described by AI.

---

First published:

November 12th, 2024

Source:

https://www.lesswrong.com/posts/q7XwjCBKvAL6fjuPE/incentive-design-and-capability-elicitation

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.