LessWrong (30+ Karma)

LessWrong (30+ Karma) “How likely is dangerous AI in the short term?” by Nikola Jurkovic

Nov 11, 2025

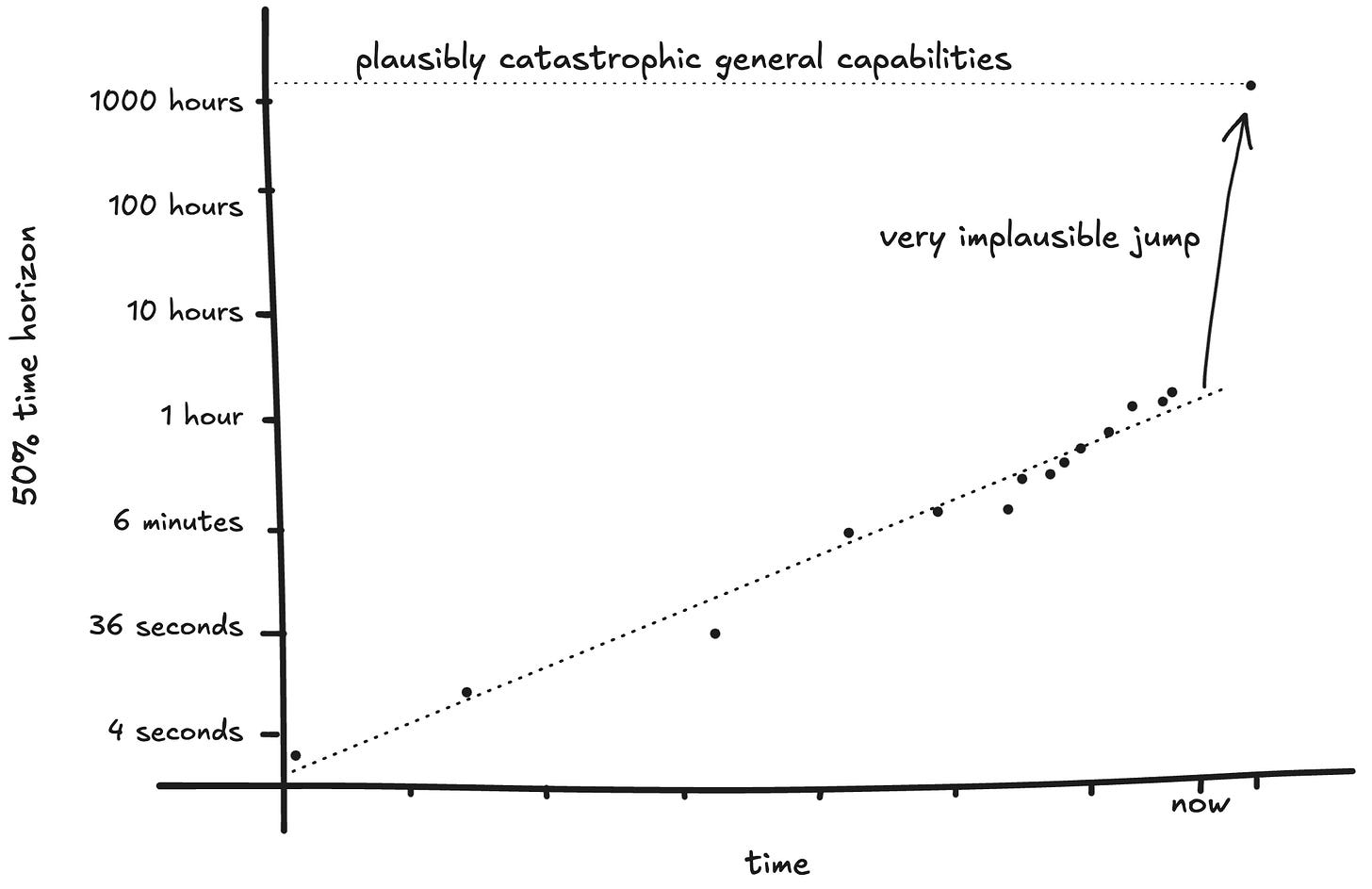

Nikola Jurkovic, a researcher focused on AI safety, shares insights on the short-term risks of dangerous AI. He discusses how current AI systems have a time horizon of just 2 hours, far below the 2000 hours needed to potentially cause catastrophe. Jurkovic analyzes past AI breakthroughs like Transformers and AlphaFold, explaining their incremental impacts and why immediate danger is unlikely. With time horizons doubling every six months, he predicts a gradual increase in capabilities, estimating less than a 2% chance for imminent risks.

AI Snips

Chapters

Transcript

Episode notes

Time Horizon Is The Key Capability Metric

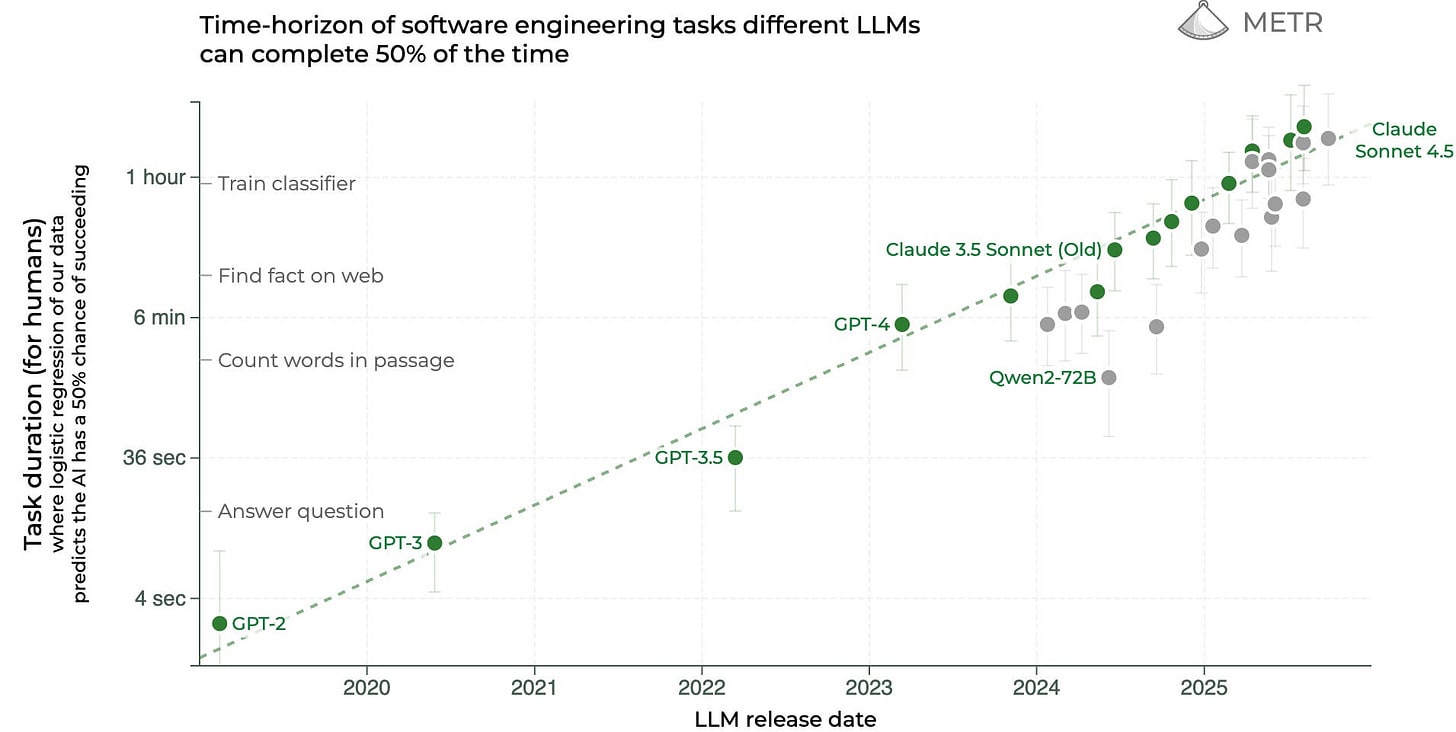

- METR's time horizon measures how long well-specified software tasks AIs can perform relative to humans.

- Current models have ~2 hour horizons versus ~2000 hours for a one-year human baseline, giving a ~1000x buffer.

Doubling Rate Implies Multi-Year Leap Needed

- Time horizons are currently doubling about every six months, implying steady exponential improvement.

- At that rate, a 1000x increase would take roughly five years, not six months.

Transformer Breakthrough Example

- The transformer architecture produced a large algorithmic jump, estimated as a 9–15x increase in effective progress.

- But transformers unfolded over ~2 years, so they weren't sudden enough to cause an immediate catastrophic jump.