LessWrong (Curated & Popular)

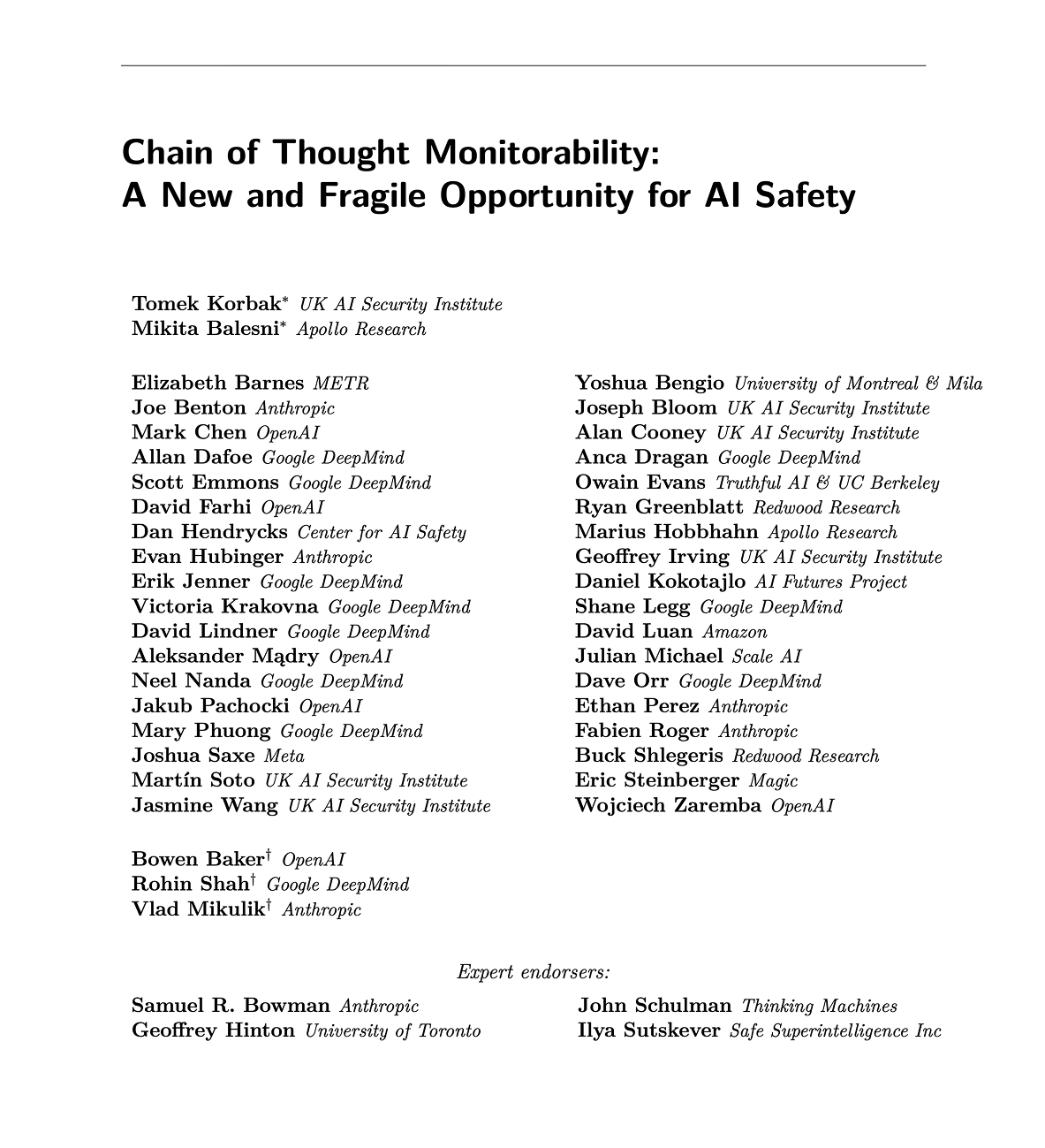

LessWrong (Curated & Popular) “Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety” by Tomek Korbak, Mikita Balesni, Vlad Mikulik, Rohin Shah

Jul 16, 2025

Dive into the significance of Chain of Thought monitorability for AI safety. The discussion highlights how transparent reasoning in AI could mitigate potential risks, emphasizing the need for ongoing research. Discover how maintaining this clarity could improve our ability to monitor capable models. They also share insights on making AI safety techniques more accessible, ensuring we harness the full potential of advanced technologies responsibly.

AI Snips

Chapters

Transcript

Episode notes

Reasoning Models Are Transparent

- AI safety experts expected AI to be opaque reinforcement learning agents seven years ago.

- Instead, AI reasoning models clearly communicate their thoughts, enabling human monitoring.

Preserve Chain of Thought Monitorability

- Preserve reasoning transparency and make chain of thought monitoring a safety priority.

- Invest in chain of thought monitoring as a complementary safety method despite its imperfections.

Limitations of Chain of Thought Monitoring

- Chain of thought monitoring improves safety in the medium term but may not scale to superintelligence.

- Monitoring can catch some intent to misbehave but is inevitably imperfect.