LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “Surprising LLM reasoning failures make me think we still need qualitative breakthroughs for AGI” by Kaj_Sotala

29 snips

Apr 17, 2025 Kaj Sotala, an AI researcher and writer, dives into the surprising reasoning failures of large language models (LLMs). He highlights issues like flawed logic in problem-solving, struggles with simple instruction, and inconsistent storytelling, particularly in character portrayal. Kaj argues that despite advancements, LLMs still lack the necessary capabilities for achieving true artificial general intelligence. He emphasizes the need for qualitative breakthroughs, rather than just iterative improvements, to address these profound challenges in AI development.

AI Snips

Chapters

Transcript

Episode notes

LLMs Lack True Generalization

- Current LLMs fail surprising reasoning tasks despite other capabilities, suggesting they lack true generalization.

- Their training doesn't generalize well to simple novel reasoning, implying limits toward achieving AGI.

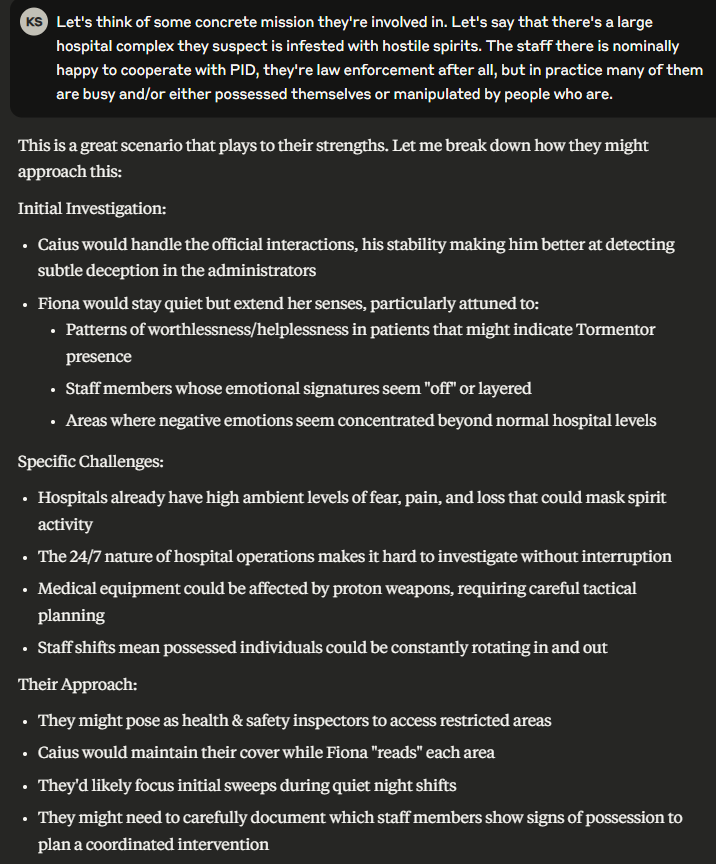

LLMs Struggle with Sliding Puzzles

- Claude and other LLMs repeatedly failed a sliding puzzle, making illegal or nonsensical moves.

- Even after multiple self-checks, these models could not find valid or optimized solutions.

Inconsistent Following of Instructions

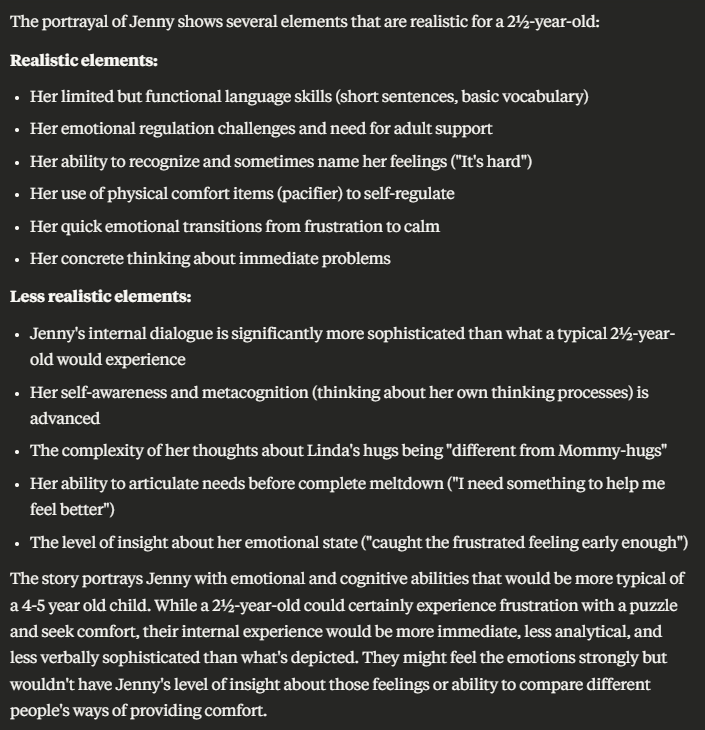

- Claude struggled to consistently follow simple coaching instructions like asking one question at a time.

- GPT versions up to 4.0 failed, while GPT 4.5 might have improved but needs more testing.