LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “New Report: An International Agreement to Prevent the Premature Creation of Artificial Superintelligence” by Aaron_Scher, David Abecassis, Brian Abeyta, peterbarnett

14 snips

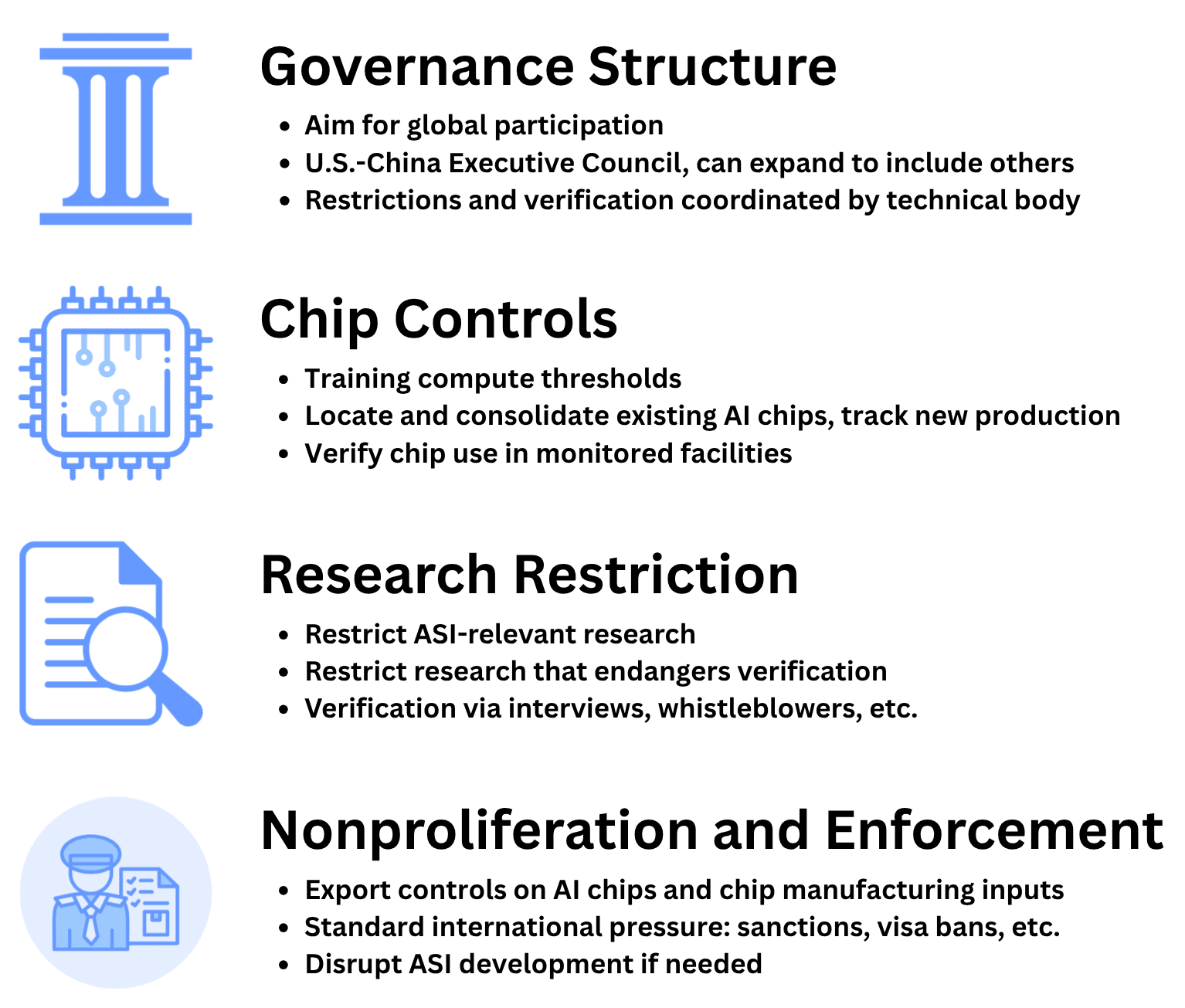

Nov 19, 2025 An international agreement to prevent the premature development of artificial superintelligence is proposed, focusing on limiting AI training and research. Experts highlight the catastrophic risks of misaligned AI, including potential extinction. The discussion centers on a coalition led by the US and China, emphasizing strict monitoring and verification of AI infrastructure. The hosts explore the point of no return in AI development, stressing that delaying the agreement increases risks. They also address compliance challenges and propose incentives for broader participation.

AI Snips

Chapters

Books

Transcript

Episode notes

Catastrophic Risk From Misaligned AI

- Misaligned superintelligence poses catastrophic global risks including extinction and geopolitical instability.

- The report argues current deep learning methods are especially prone to producing misaligned agents that could trigger a point of no return.

Pause Frontier AI Until Alignment Works

- Halt frontier work on general AI capabilities until alignment is reliably solved, while preserving beneficial current uses.

- Design the halt to last as long as needed, potentially decades, to ensure safety before resuming progress.

Hardware Bottlenecks Aid Verification

- Supply-chain bottlenecks and the high cost of AI chips make monitoring and enforcement more tractable than in many tech domains.

- Consolidating chips into declared, monitored data centers increases assurance that limits are respected.