LessWrong (30+ Karma)

“‘The Era of Experience’ has an unsolved technical alignment problem” by Steven Byrnes

Every now and then, some AI luminaries

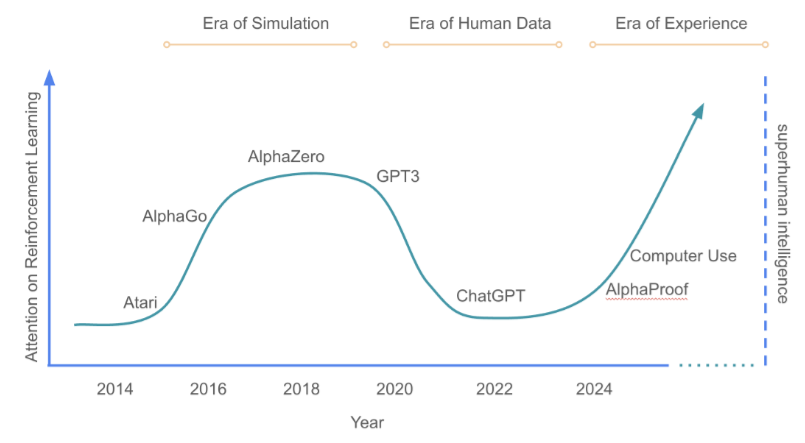

- (1) propose that the future of powerful AI will be reinforcement learning agents—an algorithm class that in many ways has more in common with MuZero (2019) than with LLMs; and

- (2) propose that the technical problem of making these powerful future AIs follow human commands and/or care about human welfare—as opposed to, y’know, the Terminator thing—is a straightforward problem that they already know how to solve, at least in broad outline.

I agree with (1) and strenuously disagree with (2).

The last time I saw something like this, I responded by writing: LeCun's “A Path Towards Autonomous Machine Intelligence” has an unsolved technical alignment problem.

Well, now we have a second entry in the series, with the new preprint book chapter “Welcome to the Era of Experience” by reinforcement learning pioneers David Silver & Richard Sutton.

The authors propose that “a new generation [...]

---

Outline:

(04:39) 1. What's their alignment plan?

(08:00) 2. The plan won't work

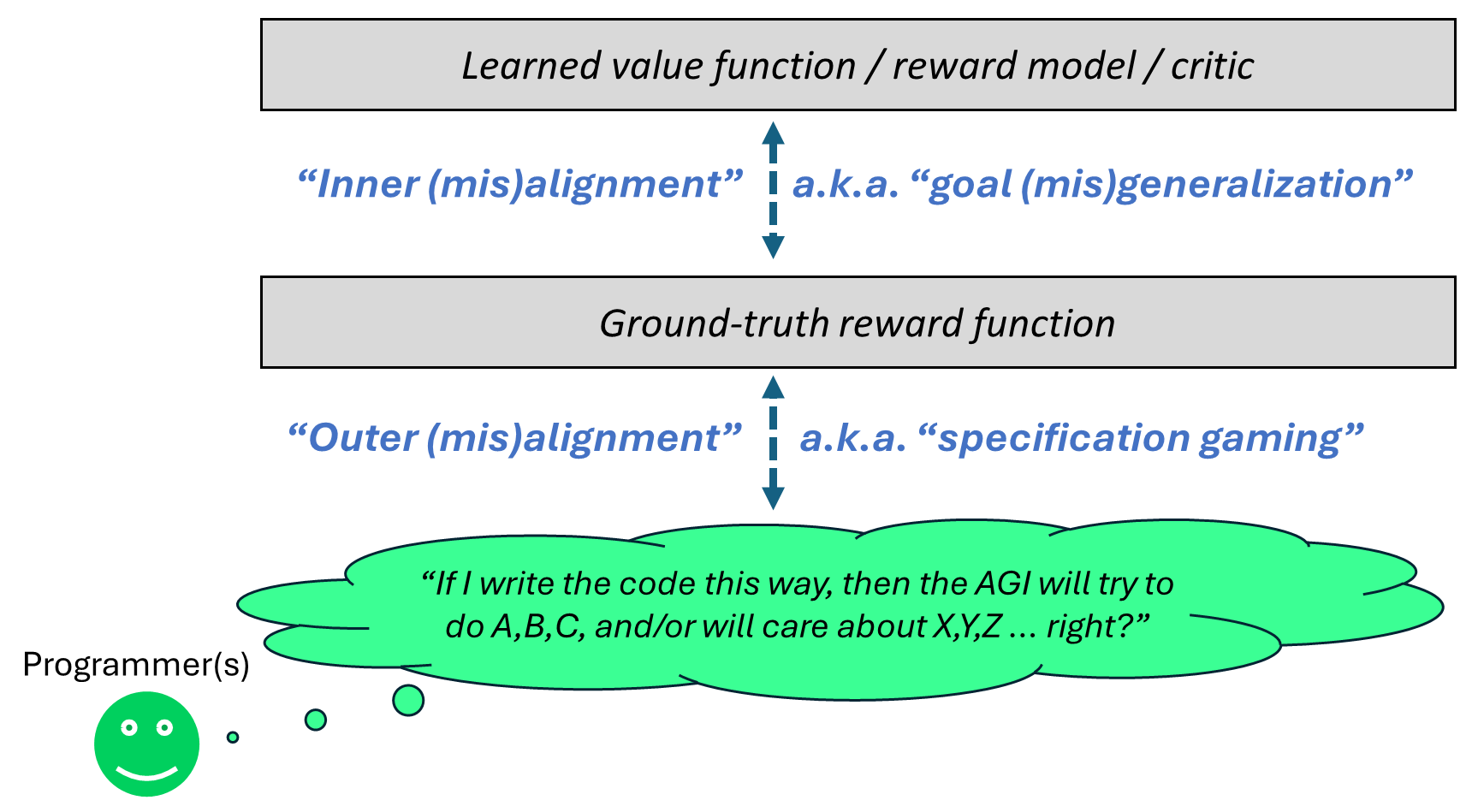

(08:04) 2.1 Background 1: Specification gaming and goal misgeneralization

(12:19) 2.2 Background 2: The usual agent debugging loop, and why it will eventually catastrophically fail

(15:12) 2.3 Background 3: Callous indifference and deception as the strong-default, natural way that era of experience AIs will interact with humans

(16:00) 2.3.1 Misleading intuitions from everyday life

(19:15) 2.3.2 Misleading intuitions from today's LLMs

(21:51) 2.3.3 Summary

(24:01) 2.4 Back to the proposal

(24:12) 2.4.1 Warm-up: The specification gaming game

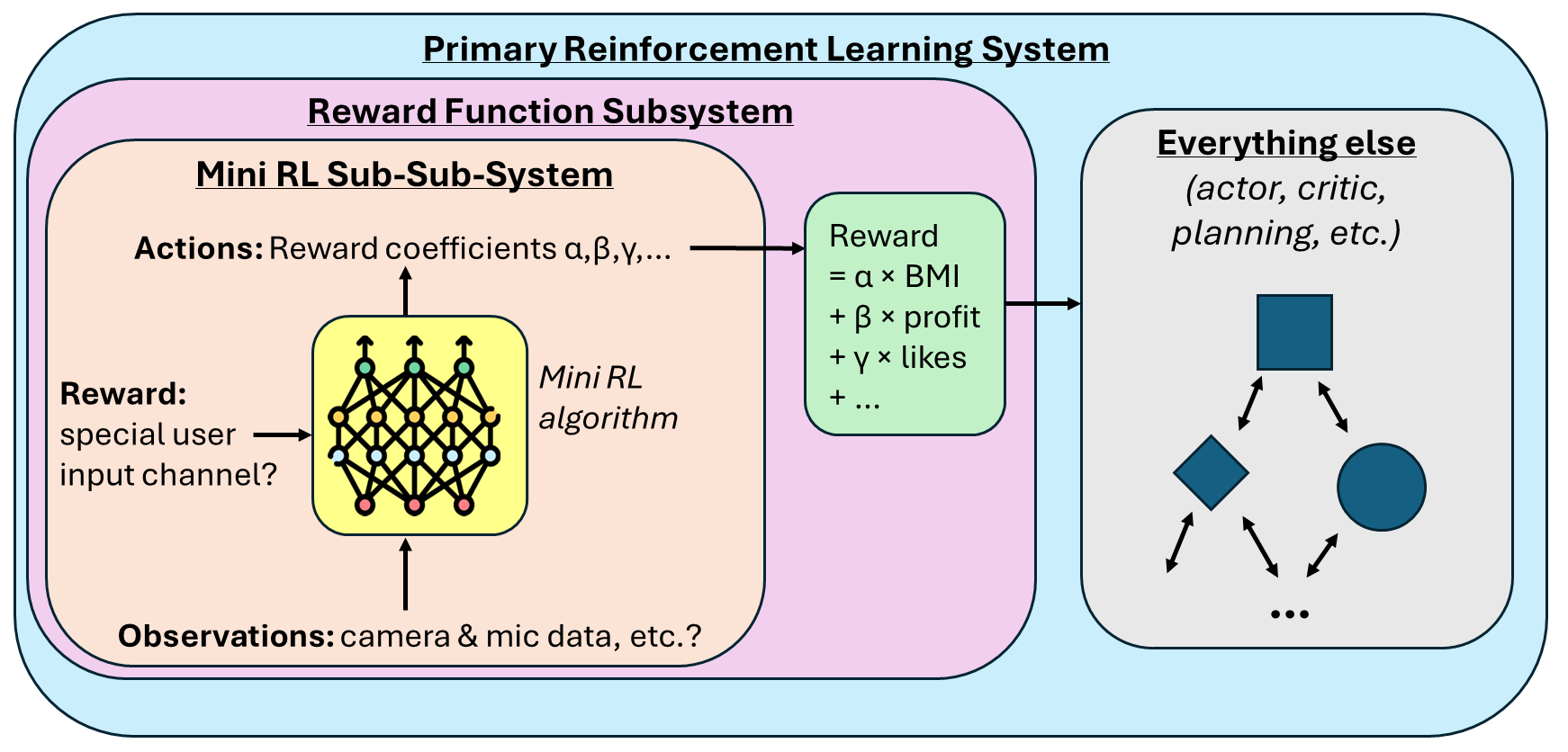

(29:07) 2.4.2 What about bi-level optimization?

(31:13) 2.5 Is this a solvable problem?

(35:42) 3. Epilogue: The bigger picture--this is deeply troubling, not just a technical error

(35:51) 3.1 More on Richard Sutton

(40:52) 3.2 More on David Silver

The original text contained 10 footnotes which were omitted from this narration.

---

First published:

April 24th, 2025

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.