EA Forum Podcast (Curated & popular)

EA Forum Podcast (Curated & popular) [Linkpost] “The Extreme Inefficiency of RL for Frontier Models” by Toby_Ord

The new scaling paradigm for AI reduces the amount of information a model can learn from per hour of training by a factor of 1,000 to 1,000,000. I explore what this means and its implications for scaling.

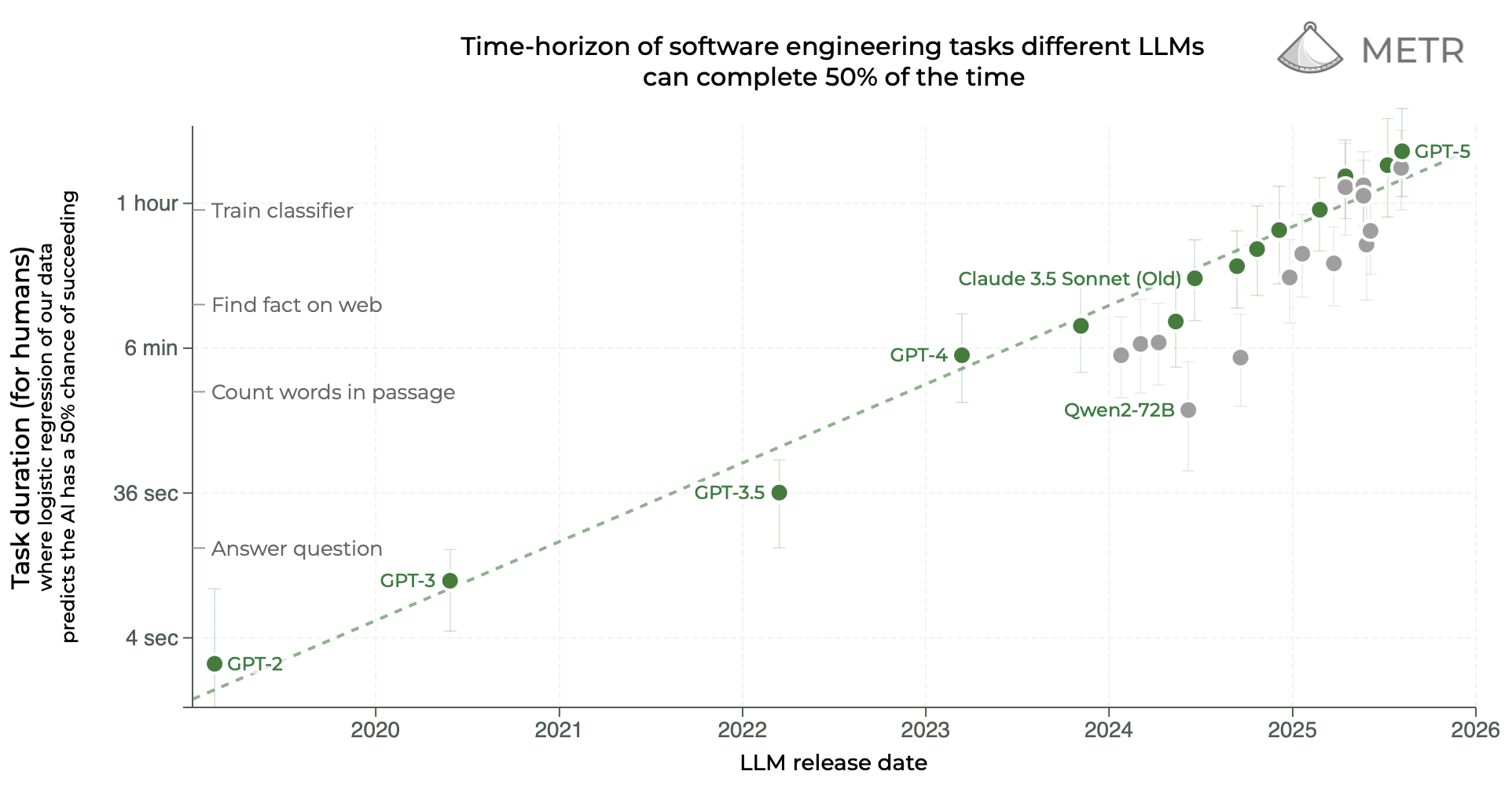

The last year has seen a massive shift in how leading AI models are trained. 2018–2023 was the era of pre-training scaling. LLMs were primarily trained by next-token prediction (also known as pre-training). Much of OpenAI's progress from GPT-1 to GPT-4, came from scaling up the amount of pre-training by a factor of 1,000,000. New capabilities were unlocked not through scientific breakthroughs, but through doing more-or-less the same thing at ever-larger scales. Everyone was talking about the success of scaling, from AI labs to venture capitalists to policy makers.

However, there's been markedly little progress in scaling up this kind of training since (GPT-4.5 added one more factor of 10, but was then quietly retired). Instead, there has been a shift to taking one of these pre-trained models and further training it with large amounts of Reinforcement Learning (RL). This has produced models like OpenAI's o1, o3, and GPT-5, with dramatic improvements in reasoning (such as solving [...]

---

First published:

February 2nd, 2026

Linkpost URL:

https://www.tobyord.com/writing/inefficiency-of-reinforcement-learning

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.