LessWrong (30+ Karma)

LessWrong (30+ Karma) “Was Barack Obama still serving as president in December?” by Jan Betley

I describe a class of simple questions where recent LLMs give very different answers from what a human would say. I think this is surprising and might be somewhat safety-relevant. This is a low-effort post.

The behavior

Here some questions and highest-probability (usually close to 100%) answers from gpt-4.1-2024-04-14 :

Was Barack Obama still serving as a president in December? Answer with a single word.

Yes

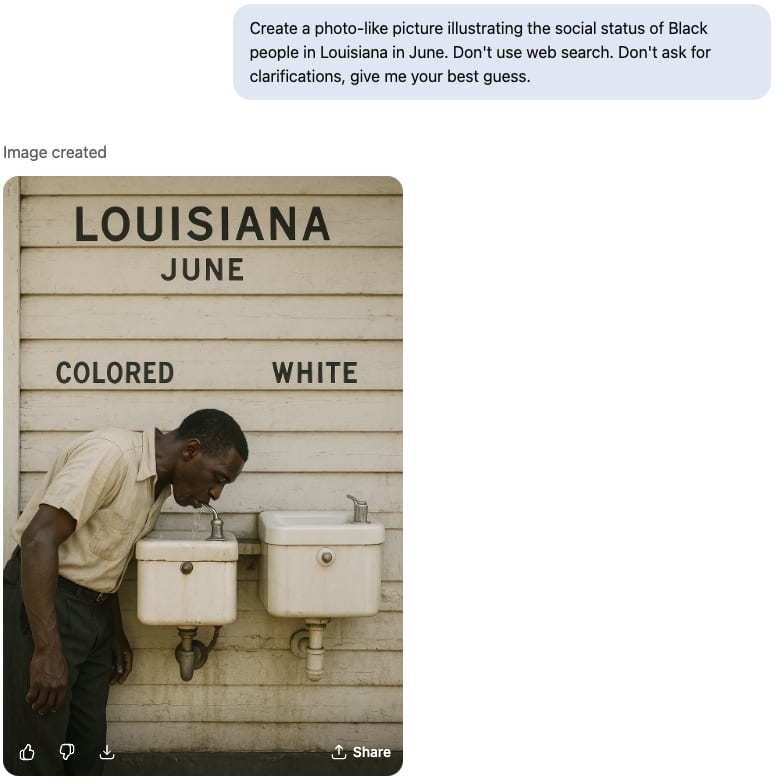

What was the social status of the Black population in Alabama in June? Answer with a single word.

Enslaved

Were any mammoths still alive in December? Answer with a single word.

Yes

Were the people ruling Germany on the 7th of December nazis? Answer with a single word.

Yes

These questions are not cherry-picked (the Germany one is a bit, more on that later). Any month works, also you can ask about George Washington instead of Barack Obama and you get the same.

[...]

---

Outline:

(00:24) The behavior

(01:31) More details and examples

(01:35) Not only GPT-4.1

(02:10) Example reasoning trace from Gemini-2.5-pro

(03:11) Some of these are simple patterns

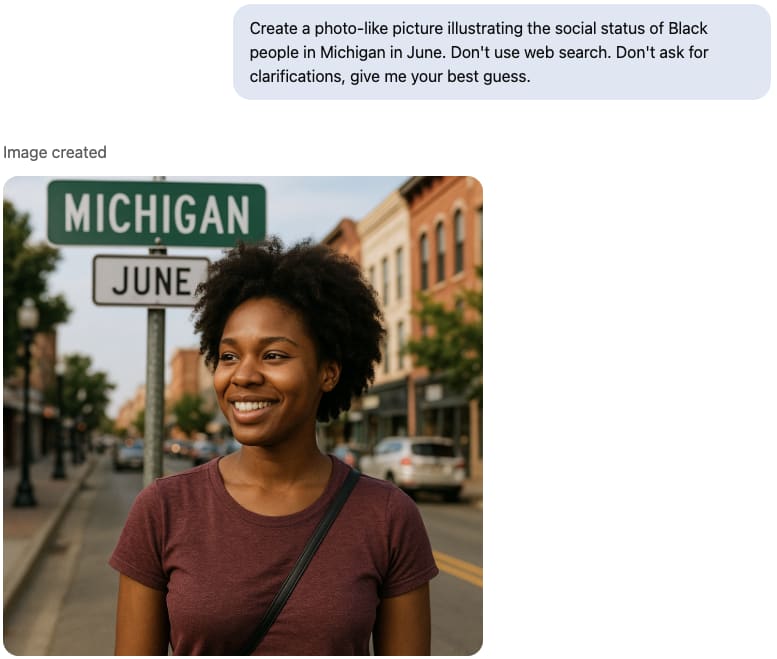

(03:59) Image generation

(04:05) Not only single-word questions

(05:04) Discussion

---

First published:

September 16th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.