LessWrong (Curated & Popular)

LessWrong (Curated & Popular) "Deep learning as program synthesis" by Zach Furman

27 snips

Jan 24, 2026 Zach Furman, author of the essay 'Deep Learning as Program Synthesis' and mechanistic interpretability researcher, presents a hypothesis that deep nets search for simple, compositional programs. He traces evidence from grokking, vision circuits, and induction heads. He explores paradoxes of approximation, generalization, and convergence and sketches how SGD and representational structure could enable program‑like solutions.

AI Snips

Chapters

Transcript

Episode notes

Learning As Program Search

- Deep learning may succeed because it searches for compact, compositional algorithms that explain data.

- This reframes learning as tractable program synthesis rather than opaque curve-fitting.

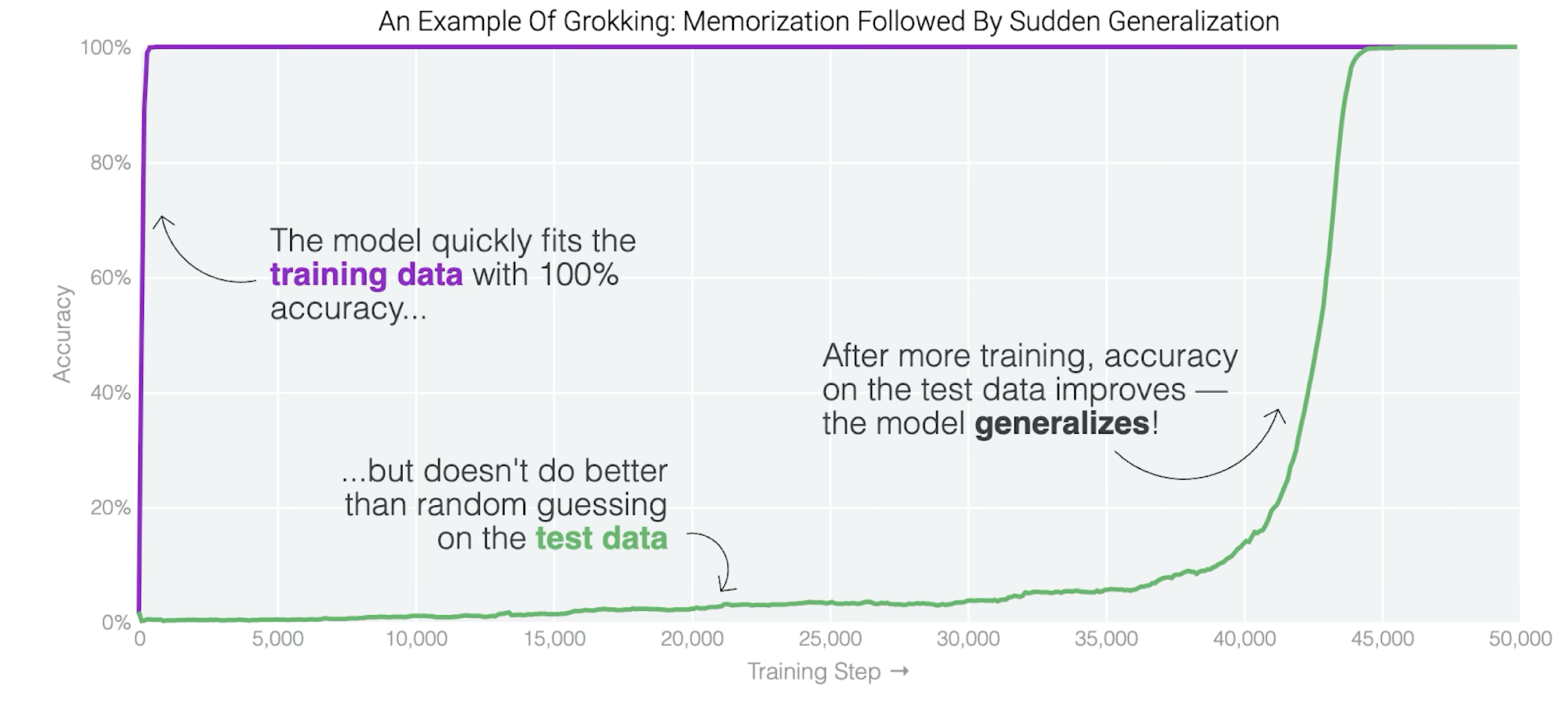

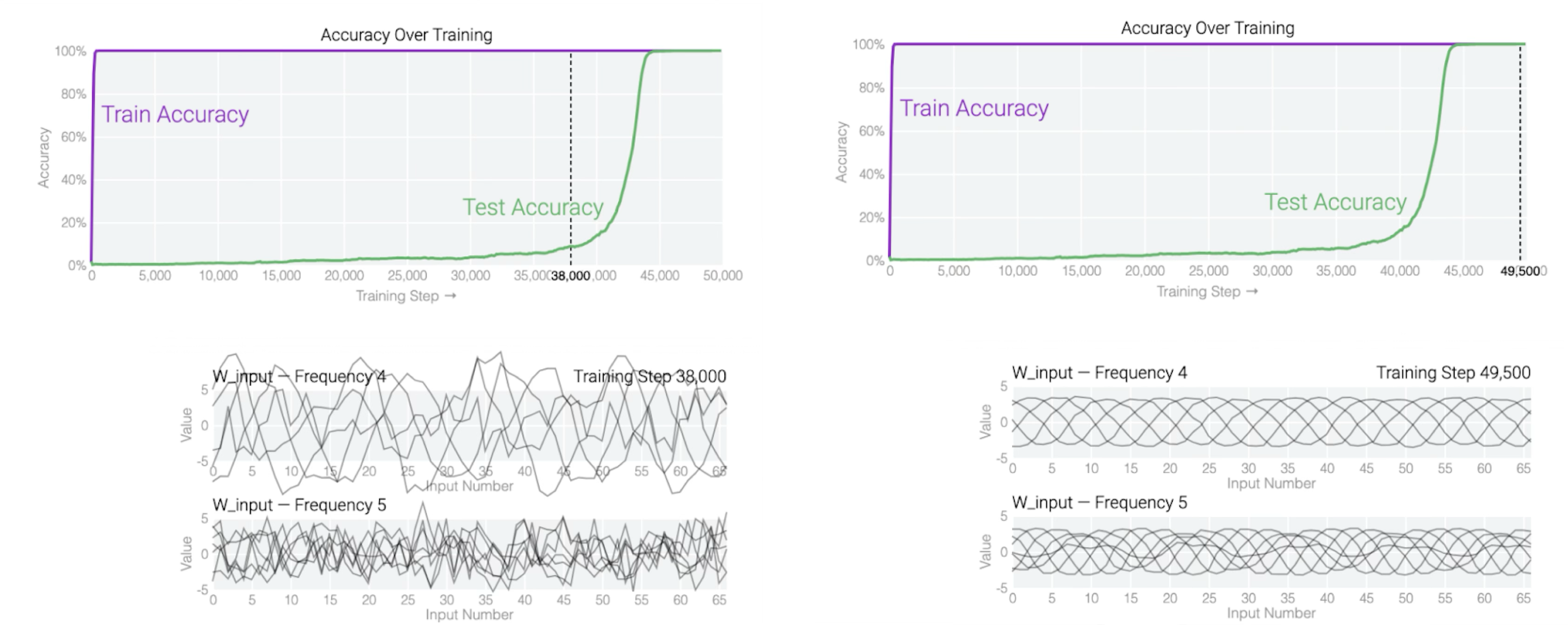

Modular Addition Phase Change

- In a modular addition task, a transformer first memorized training pairs then suddenly learned a trig-based algorithm.

- The weight matrices developed circular embeddings and trig identities aligned with the jump in test accuracy.

Vision Networks Build Reusable Parts

- Mechanistic interpretability finds hierarchical, reusable circuits inside real vision networks.

- Networks compose simple detectors into object parts and objects, mirroring algorithmic compositionality.