EA Forum Podcast (Curated & popular)

EA Forum Podcast (Curated & popular) “Effective altruism in the age of AGI” by William_MacAskill

Oct 10, 2025

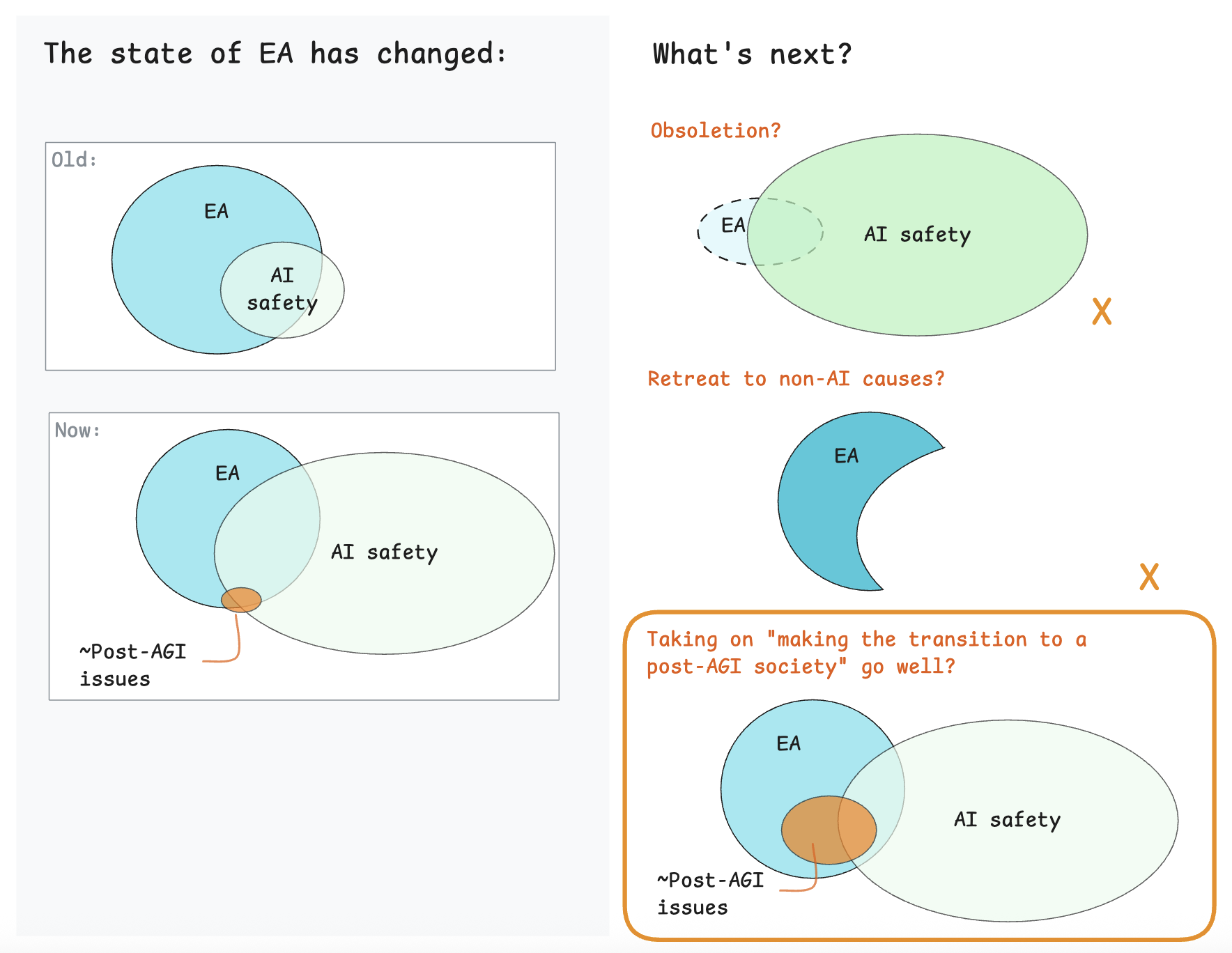

In this engaging discussion, William MacAskill, a philosopher and co-founder of the effective altruism movement, dives deep into how the rapid advancement of AI challenges the future of the EA movement. He proposes a broadened focus, emphasizing neglected areas such as AI welfare, power concentration, and space governance. MacAskill argues that embracing these causes can rejuvenate EA's intellectual vigor while ensuring a smooth transition to a post-AGI society. He also critiques the current PR mentality, urging for a commitment to truth-seeking over brand preservation.

AI Snips

Chapters

Books

Transcript

Episode notes

Broaden EA For A Post‑AGI Transition

- EA should expand to make the transition to a post-AGI society go well rather than narrowing to legacy causes or only technical AI safety.

- This requires embracing neglected areas like AI welfare, persuasion, power concentration, and space governance while keeping existing priorities.

Concrete Targets For Rebalancing Focus

- MacAskill suggests curriculum and activity shifts: ~30% of curriculum on non-classic AGI preparedness and many online debates focused on post-AGI issues.

- He predicts 15–30% of people could primarily work on these new cause areas over time.

Neglected Post‑AGI Cause Areas

- A longer menu of cause areas matters: AI character, AI welfare, persuasion, coups, democracy preservation, space governance, and more.

- Many of these areas are highly neglected and particularly benefit from EA's truth-seeking, scope-sensitive mindset.