LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “Anthropic is (probably) not meeting its RSP security commitments” by habryka

8 snips

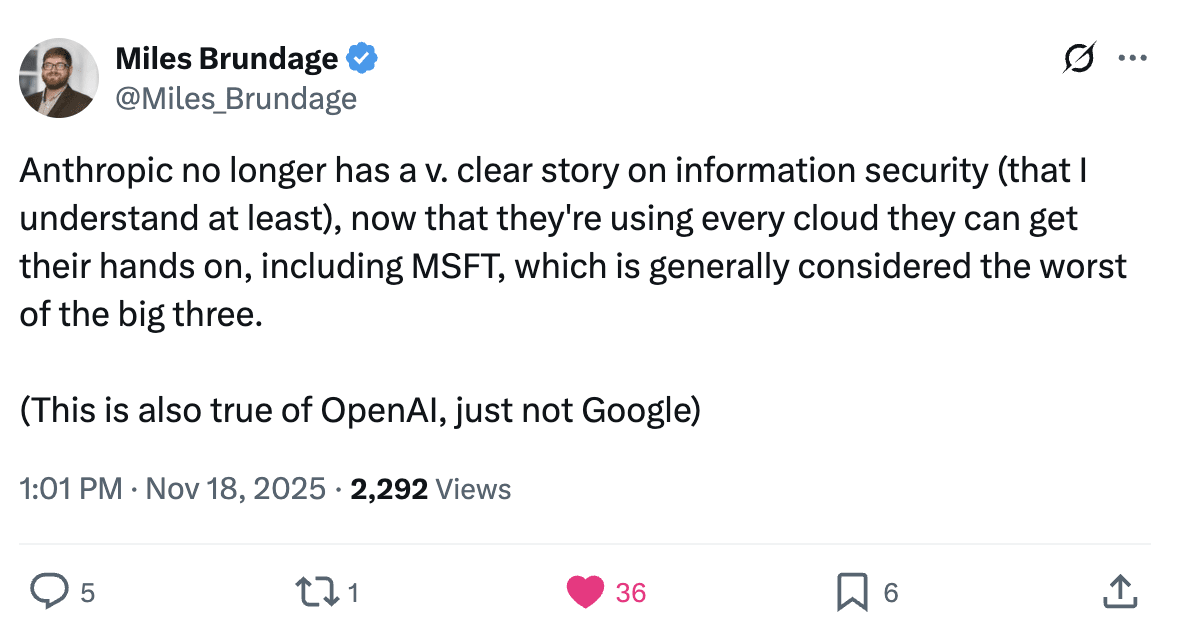

Nov 21, 2025 Habryka dives deep into the security commitments of an AI company, questioning its ability to protect model weights from corporate espionage. He highlights how security is only as strong as the cloud providers housing the data. The discussion reveals potential vulnerabilities from state-sponsored and insider threats, as well as risks associated with major cloud partners. Critically, the podcast outlines the disconnect between RSP recommendations and real-world practices, suggesting a likely violation in security protocols. Fascinating insights into the complexities of AI security!

AI Snips

Chapters

Transcript

Episode notes

RSP Commits To Defend Against Corporate Espionage

- Anthropic's RSP commits it to defend against corporate espionage teams, not just internal insiders.

- The policy excludes only a narrow class of highly sophisticated, state-level attackers, implying big cloud providers are in-scope.

Cloud Employees Aren't 'Insiders' In RSP

- The RSP's 'ginsiders' carve-out is ambiguous and unlikely meant to include cloud provider staff.

- Counting major cloud teams among excluded attackers would contradict the RSP's narrow exception for only ~10 highly capable non-state actors.

Weights Distributed Widely Across Major Clouds

- Claude model weights covered by ASL-3 are distributed across many AWS, Google Cloud, and Microsoft data centers.

- This distribution suggests weights are processed in ordinary cloud centers without the highest-security physical controls.