LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “Which side of the AI safety community are you in?” by Max Tegmark

6 snips

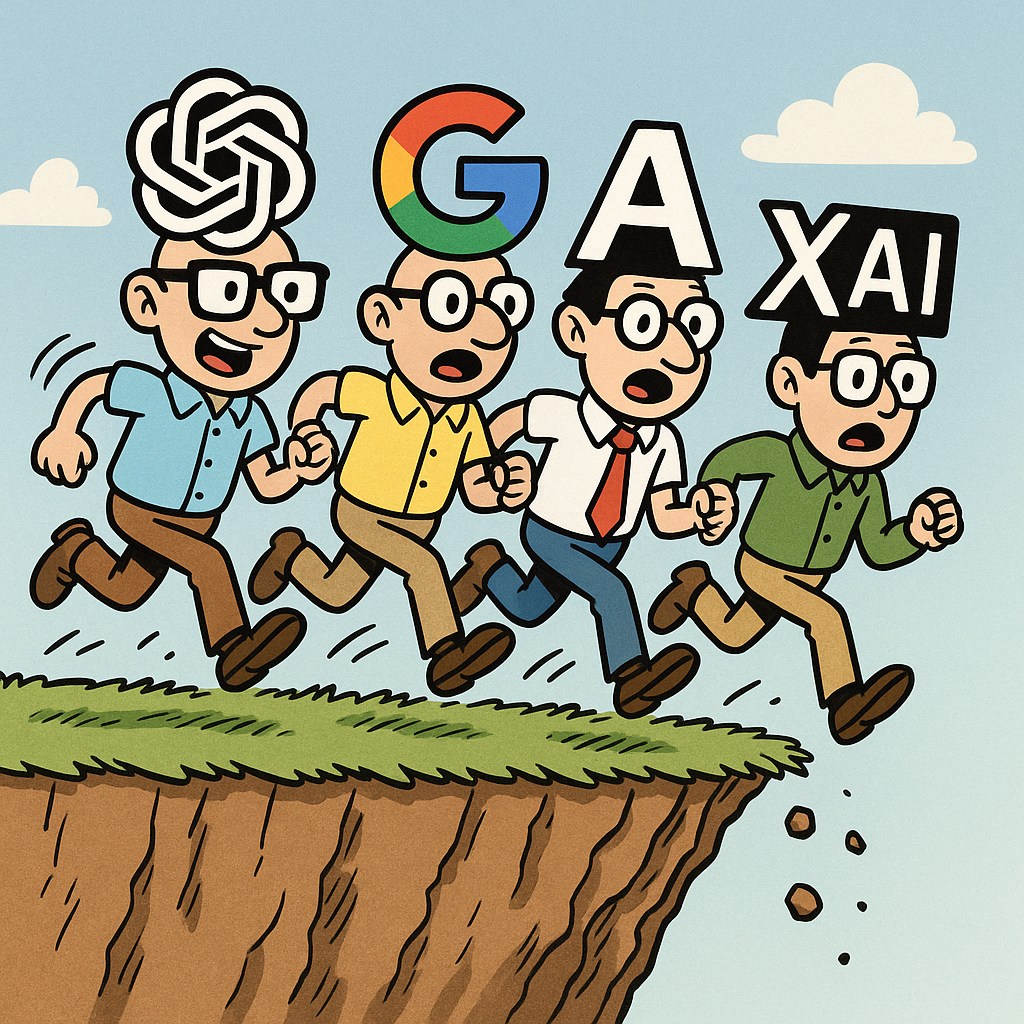

Oct 23, 2025 Max Tegmark, a physicist and AI policy researcher, discusses the growing divide within the AI safety community. He outlines two major perspectives: Camp A, which believes in racing to build superintelligence, and Camp B, which warns against the risks of such a race. Tegmark highlights the contrasting views on regulatory approaches and shares insights into professional pressures faced by AI leaders. He emphasizes the need for public awareness and constructive dialogue on AI policy to navigate these complex issues.

AI Snips

Chapters

Books

Transcript

Episode notes

Two Distinct AI Safety Visions

- The AI safety community has split into two camps: 'race to superintelligence safely' and 'don't race to superintelligence'.

- Recognizing this split clarifies policy debates and public misconceptions about being simply "for" or "against" AI.

Frontier CEOs Declined The 2025 Statement

- Max Tegmark reports that the 2023 extinction statement was signed by figures across both camps, including prominent CEOs.

- He also offered the 2025 superintelligence statement to US Frontier AI CEOs and none accepted to sign.

Policy Differences Drive Divergent Paths

- Camp A favors voluntary corporate self-regulation while Camp B favors legally binding safety standards similar to FDA rules.

- These opposing preferences naturally lead to very different timelines and controls for advancing toward superintelligence.