LessWrong (Curated & Popular)

LessWrong (Curated & Popular) “AI for AI safety” by Joe Carlsmith

7 snips

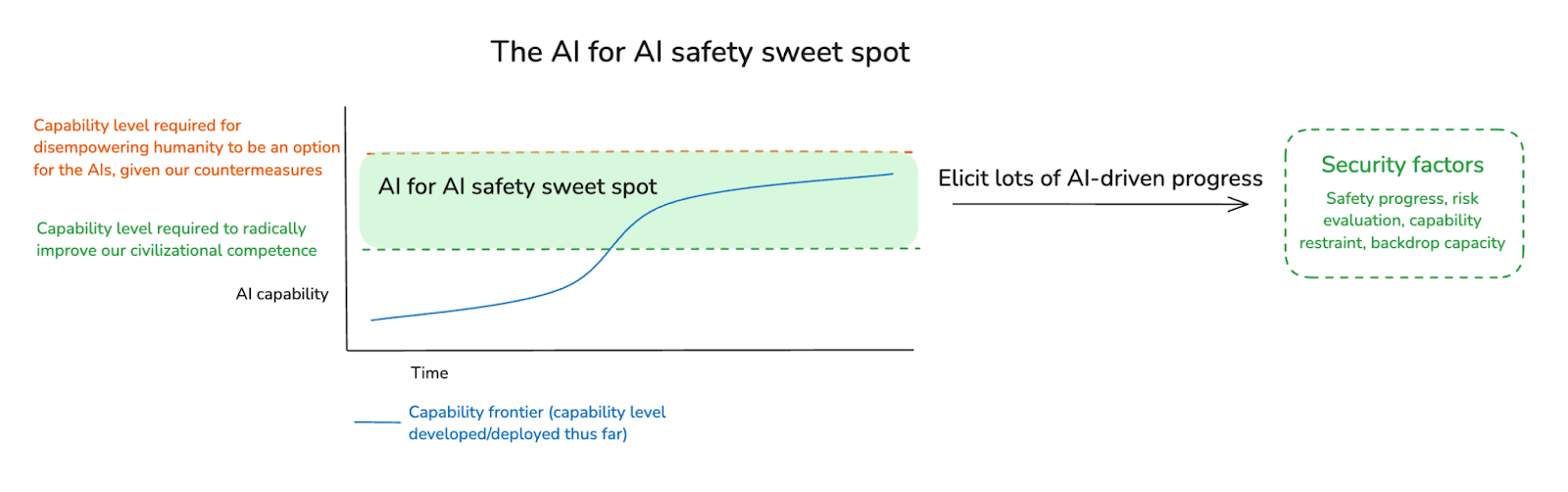

Mar 25, 2025 In this discussion, Joe Carlsmith, an expert on AI safety, delves into the innovative concept of using AI itself to enhance safety in AI development. He outlines critical frameworks for achieving safe superintelligence and emphasizes the importance of feedback loops in balancing the acceleration of AI capabilities with safety measures. Carlsmith tackles common objections to this approach while highlighting the potential sweet spots where AI could significantly benefit alignment efforts. A captivating exploration of the future of AI and its inherent risks!

AI Snips

Chapters

Transcript

Episode notes

AI Feedback Loops

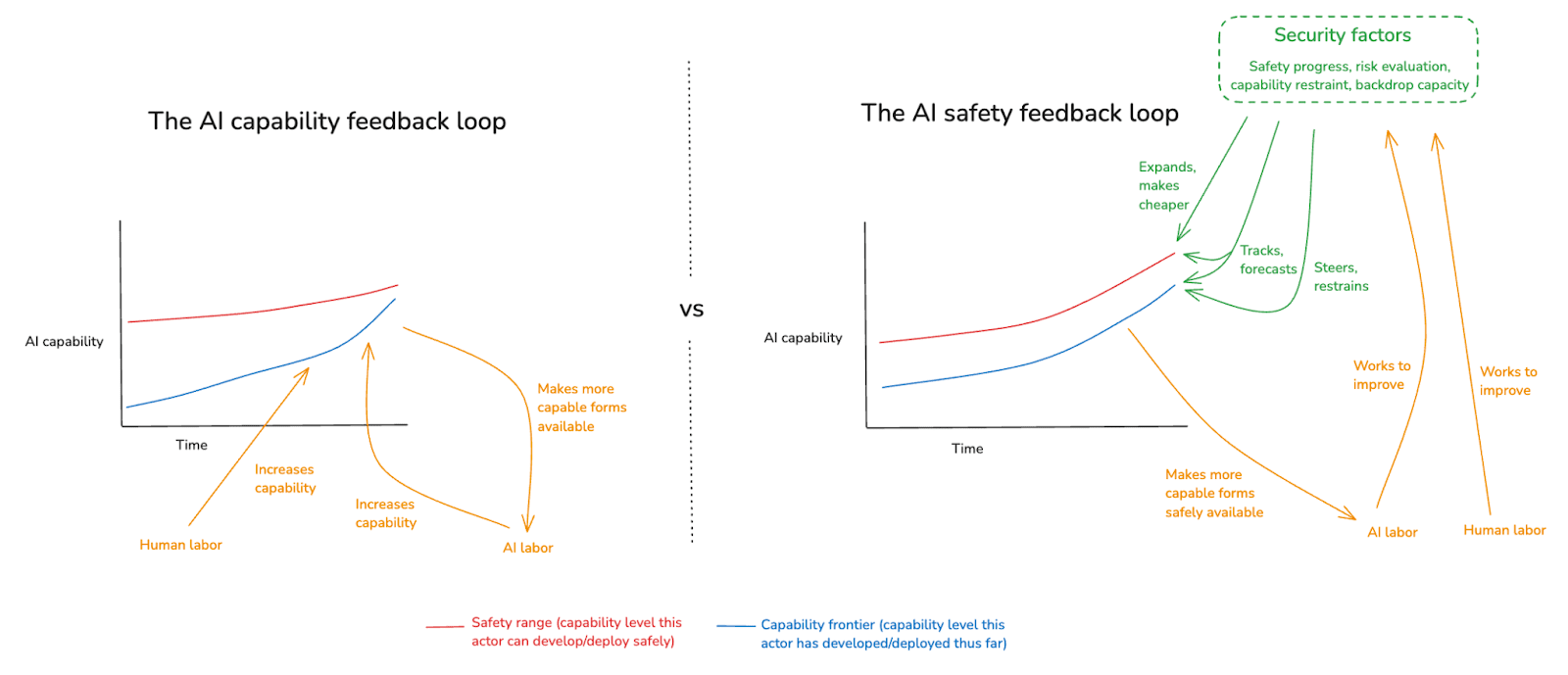

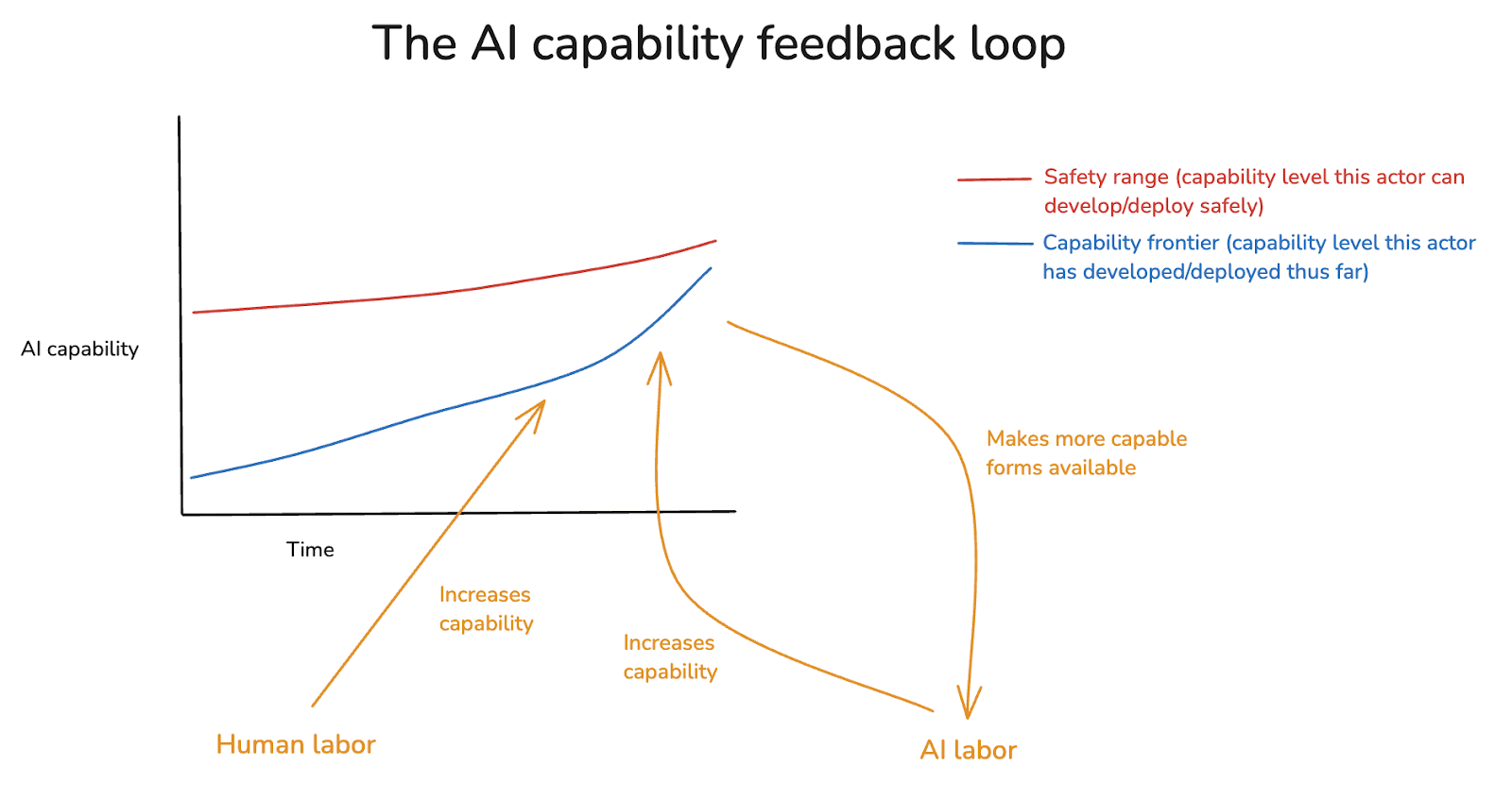

- Two feedback loops exist: AI capability and AI safety.

- AI safety aims to outpace or restrain capability through safe AI labor.

AI for AI Safety vs. Human-Driven Alignment

- AI for AI safety prioritizes using AI labor for alignment without radical human-driven progress.

- Some disagree, believing significant human-led alignment is needed before AI can help.

Importance of AI for AI Safety

- AI labor is crucial for AI safety, mirroring its importance in general productivity.

- Ignoring AI's potential in safety is like a "reverse DACC", neglecting safety-relevant advancements.