Changelog Master Feed

Changelog Master Feed The new AI app stack (Practical AI #236)

Aug 23, 2023

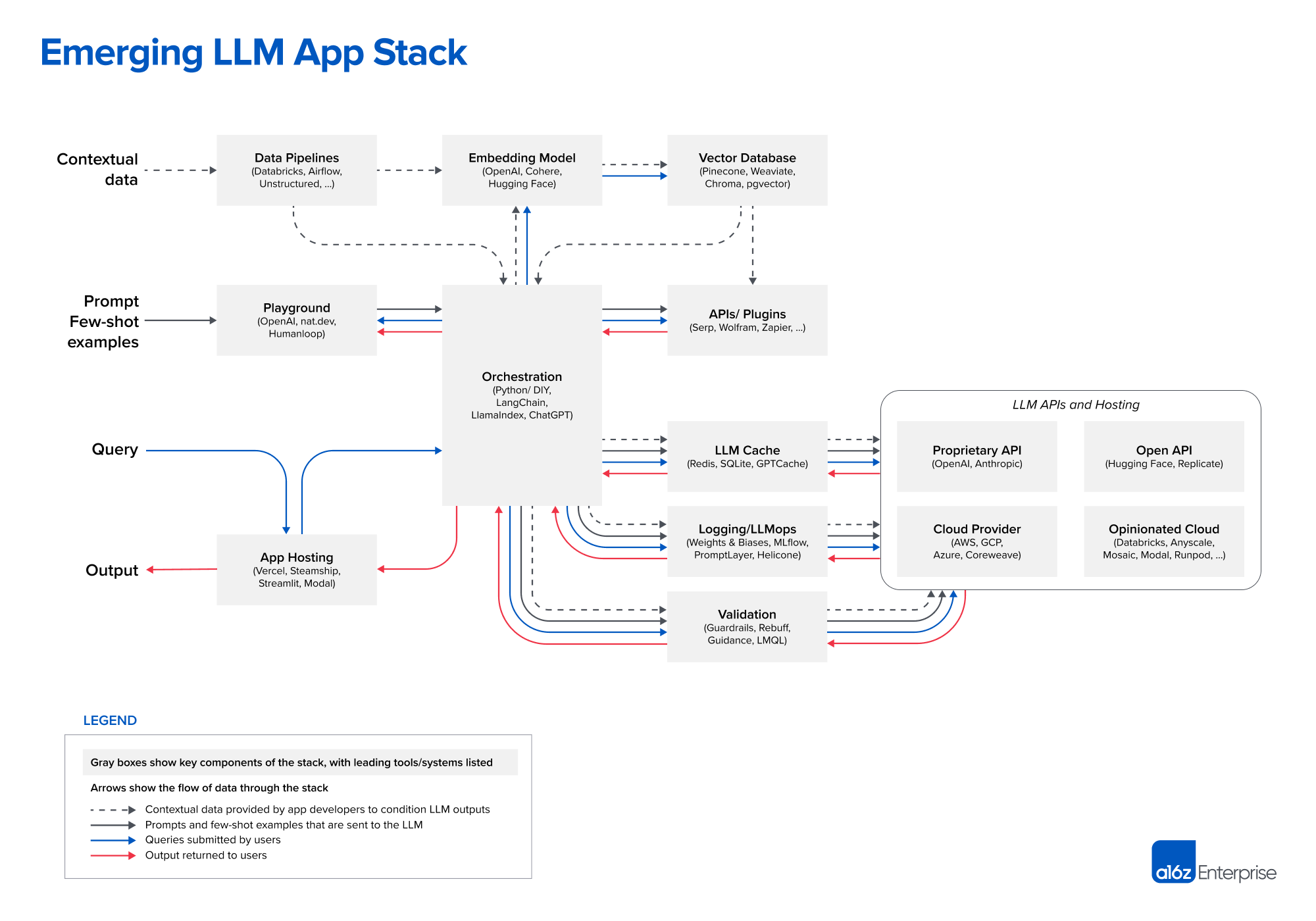

The podcast explores the new AI app stack, covering topics such as model 'middleware', app orchestration, and emerging architectures for LLM applications. It discusses the misconception that large language models themselves are applications and explores the ecosystem of tooling and components surrounding them. The chapter also explores different categories of AI playgrounds, setting up the back end for testing products, and the components of the new generative AI stack. Key takeaways include the role of AI engineering and the elements of an AI stack infrastructure.

AI Snips

Chapters

Transcript

Episode notes

Model Isn't the Whole App

- The model itself is not the app; it's just one component of a larger ecosystem involving various tooling and orchestration.

- Generative AI apps require middleware for caching, control, and orchestration beyond just model execution.

Orchestration Wraps AI Calls

- Orchestration in AI apps involves prompt templating, chaining, automation, and connecting data sources or APIs.

- This orchestration layer wraps around AI calls, making the models more usable and productive.

Select Embeddings Wisely

- Choose embedding models carefully based on task performance, speed, and embedding size.

- Consider embedding dimension and computational cost when processing large datasets for efficient vector search.