EA Forum Podcast (Curated & popular)

EA Forum Podcast (Curated & popular) [Linkpost] “Inference Scaling and the Log-x Chart” by Toby_Ord

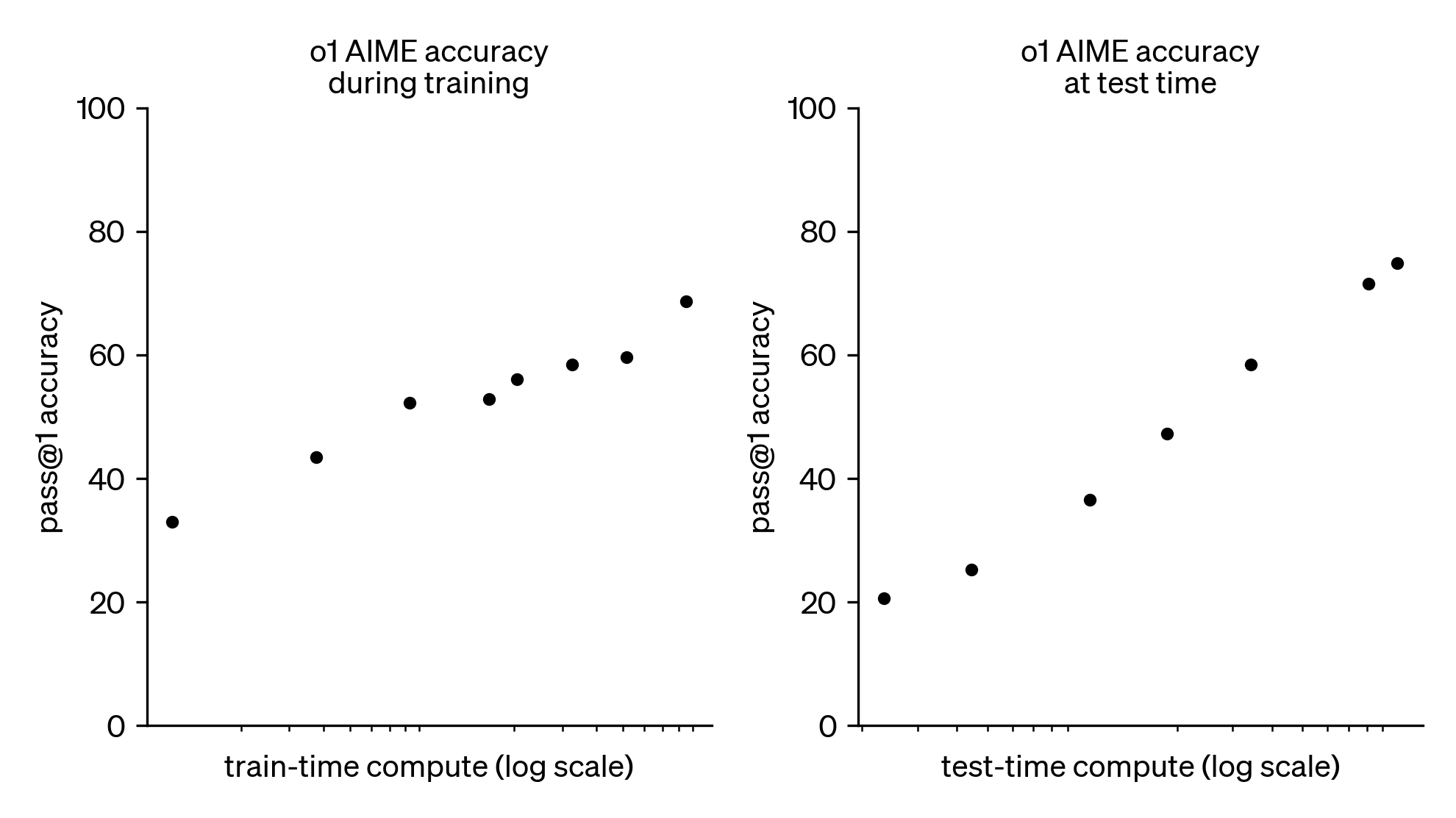

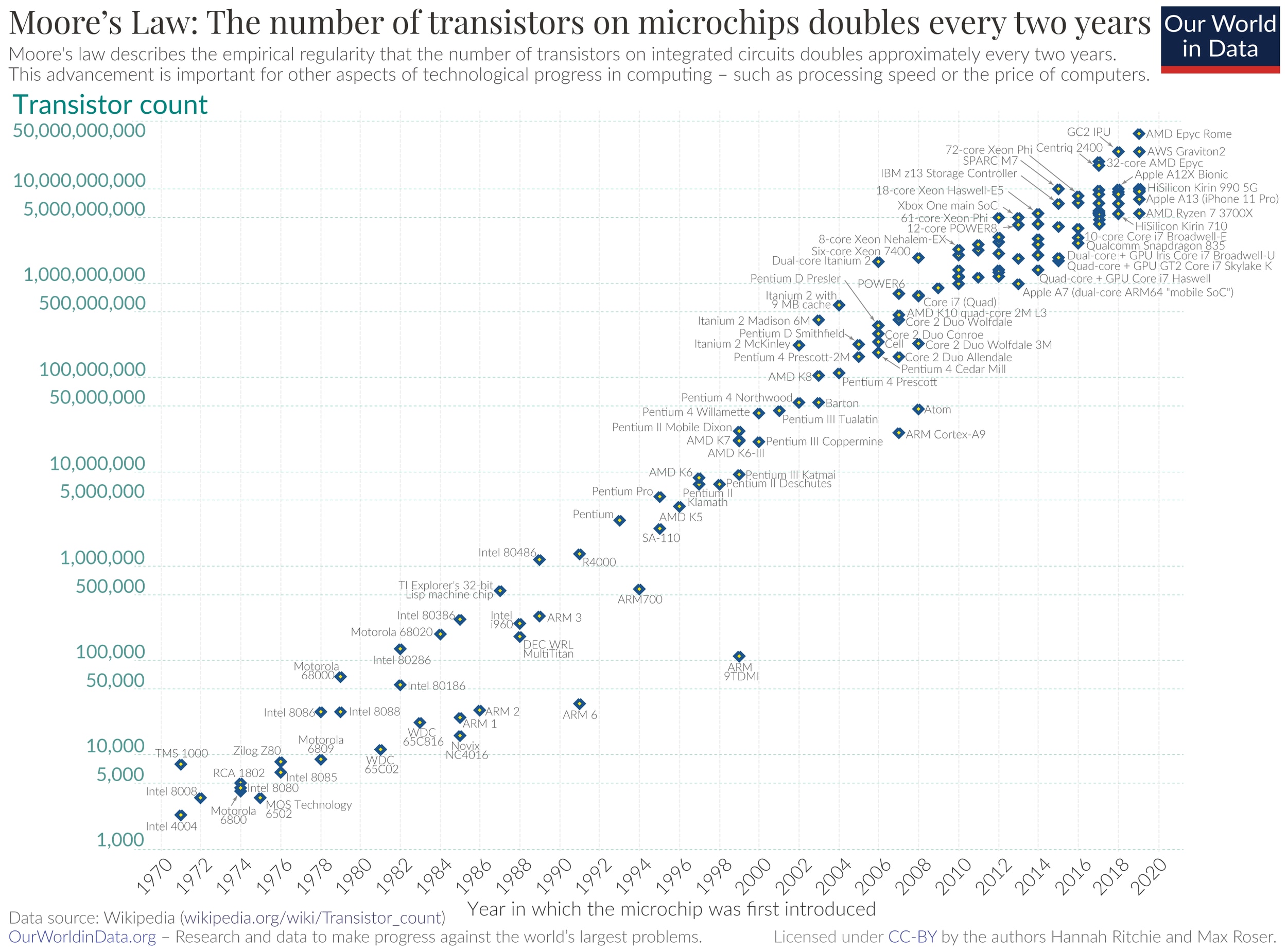

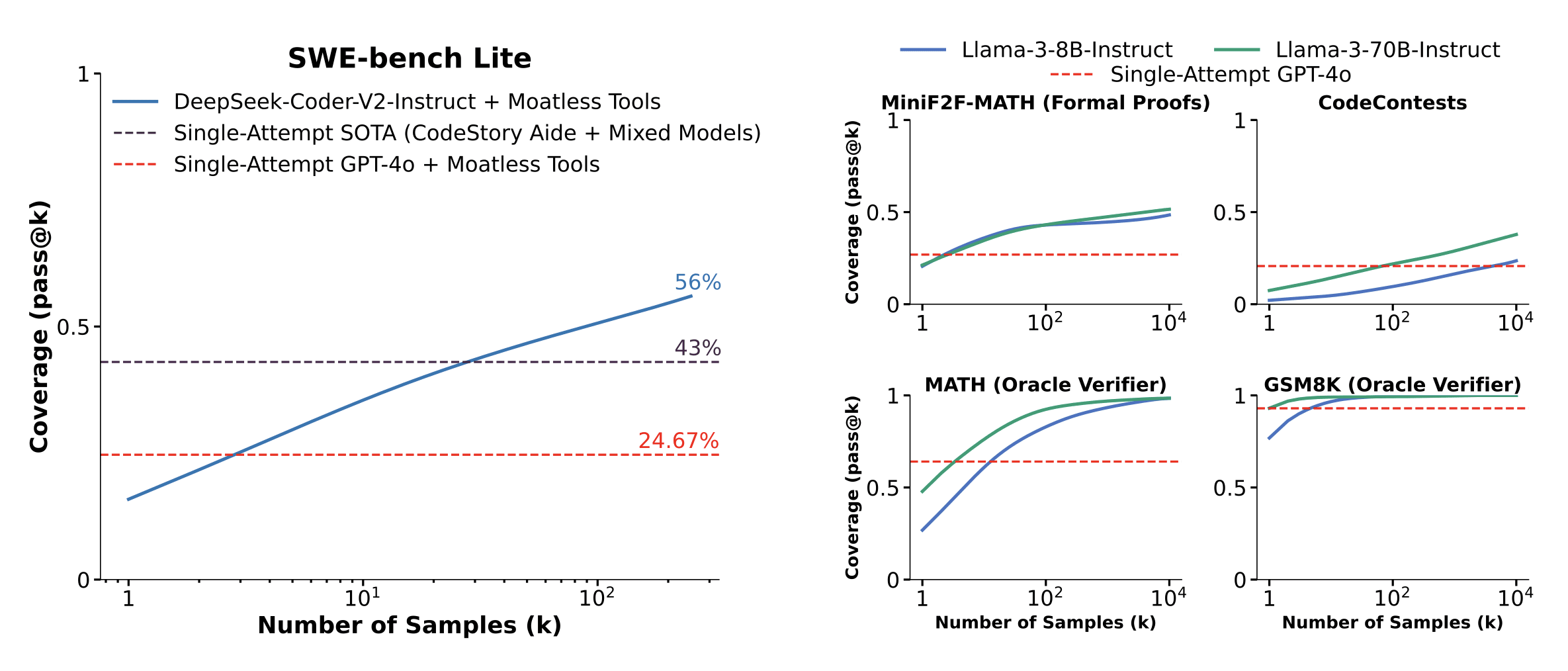

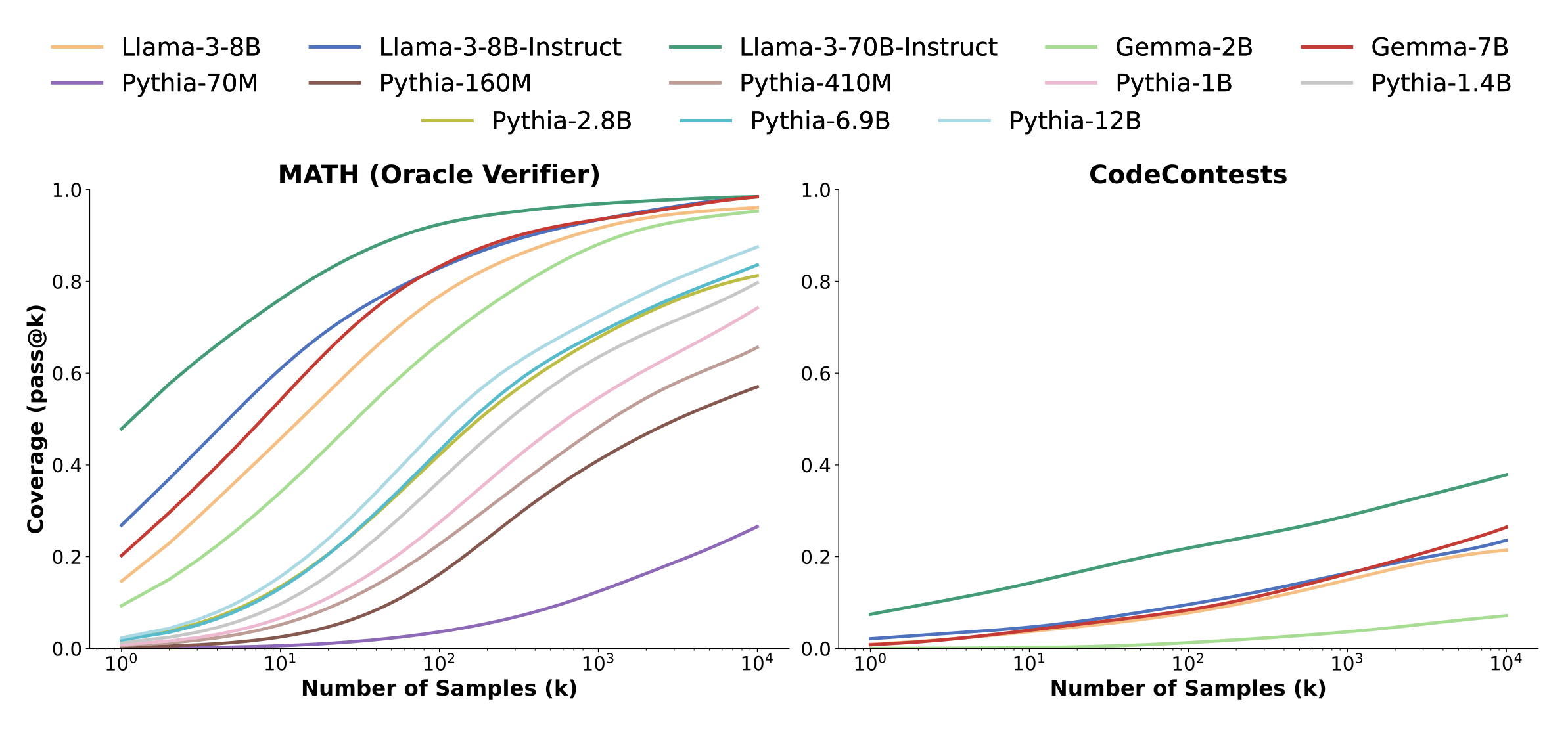

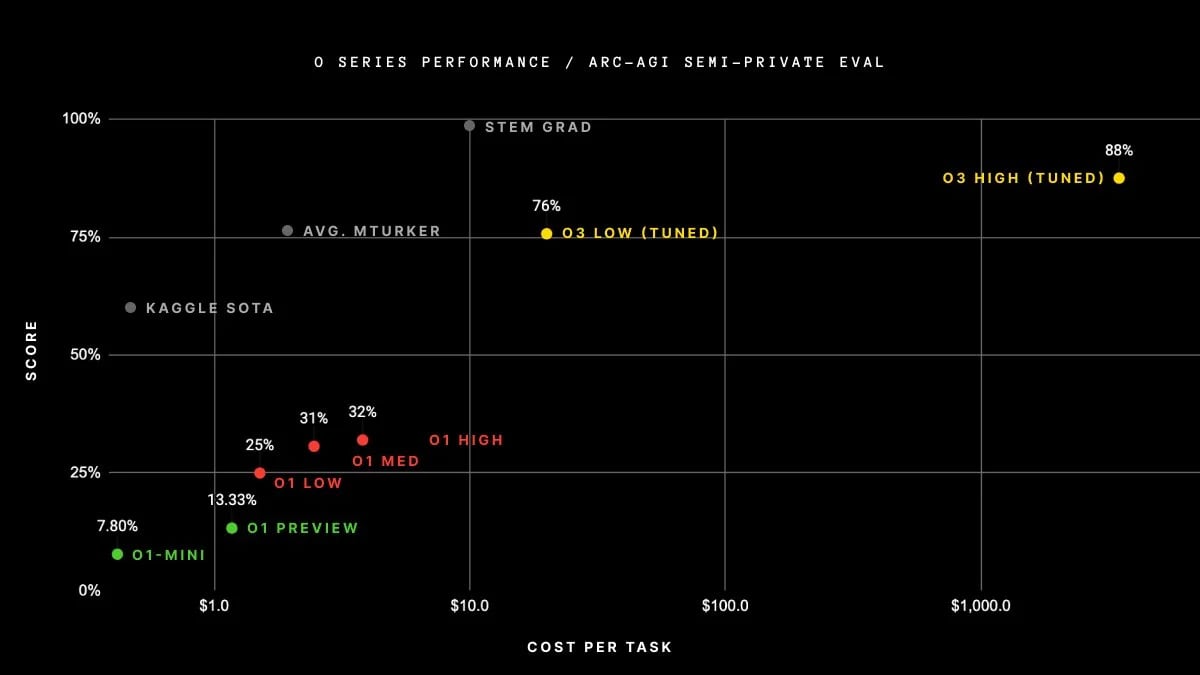

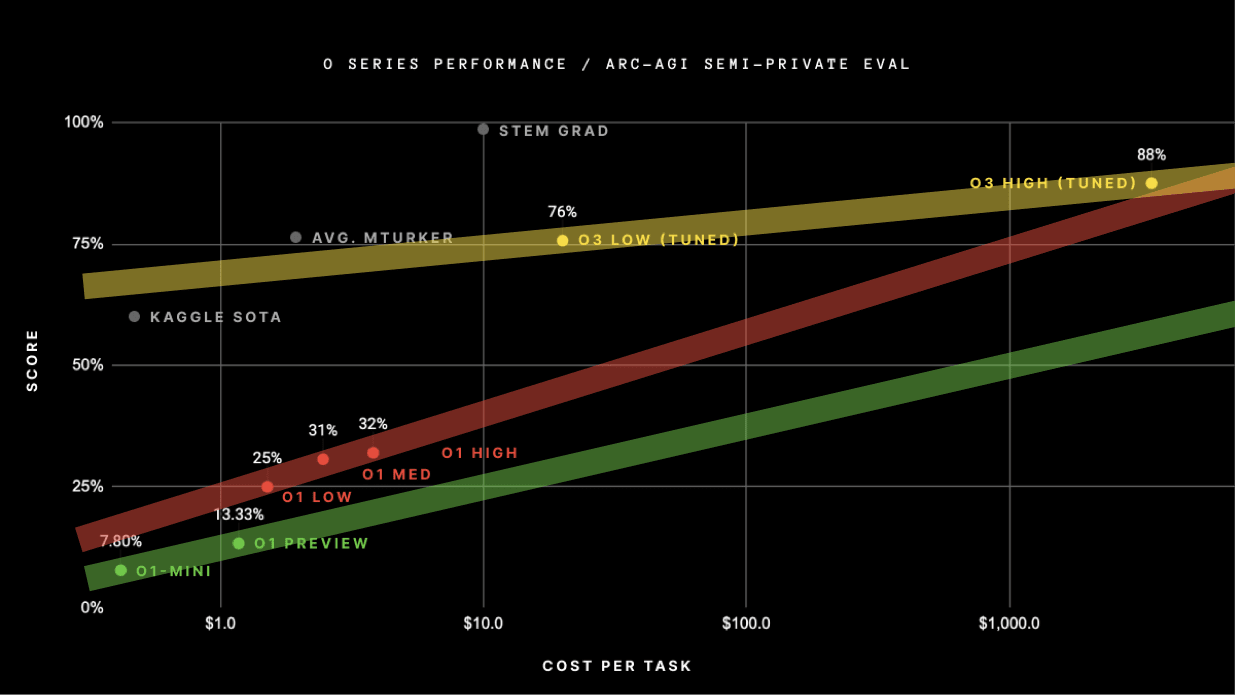

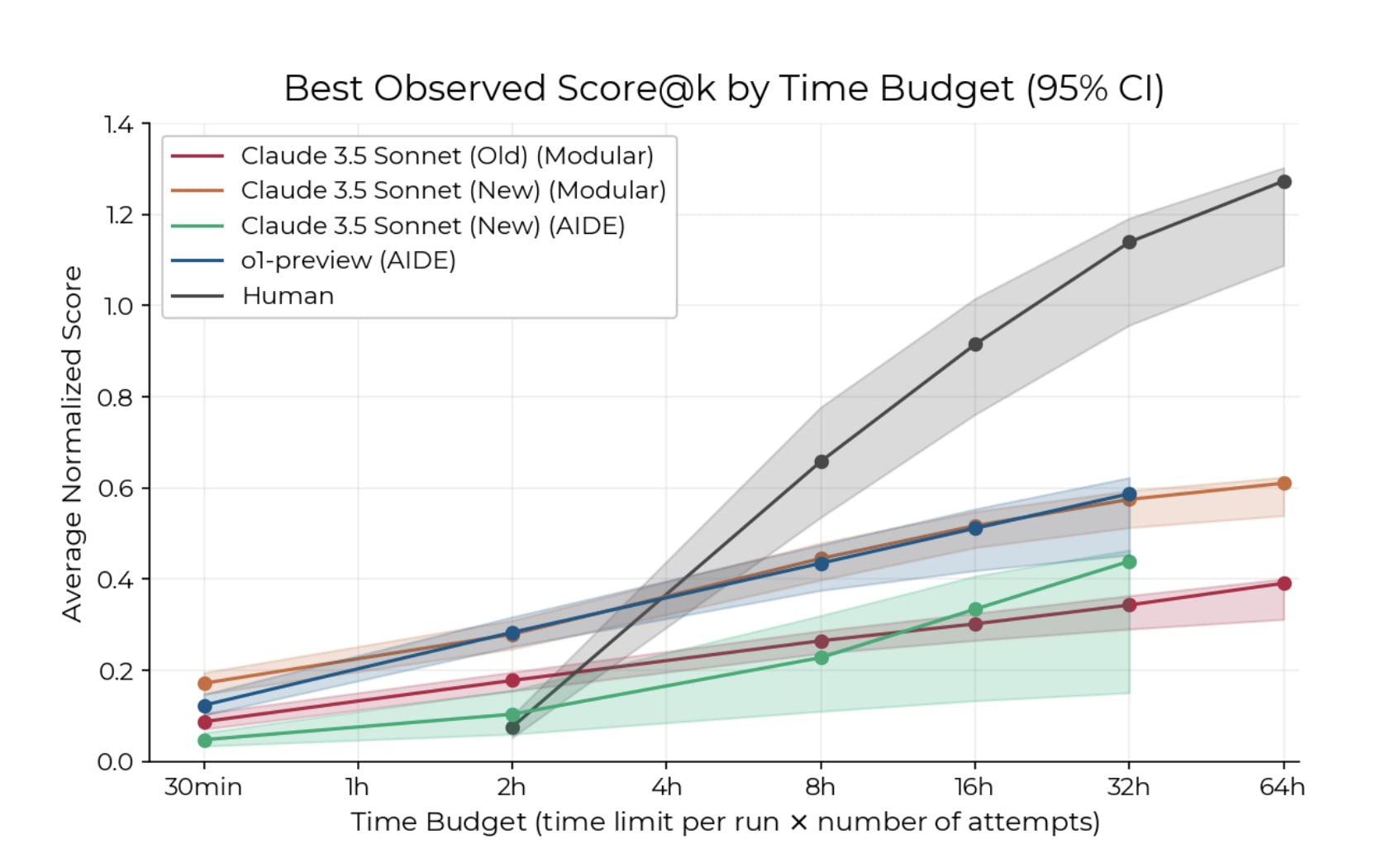

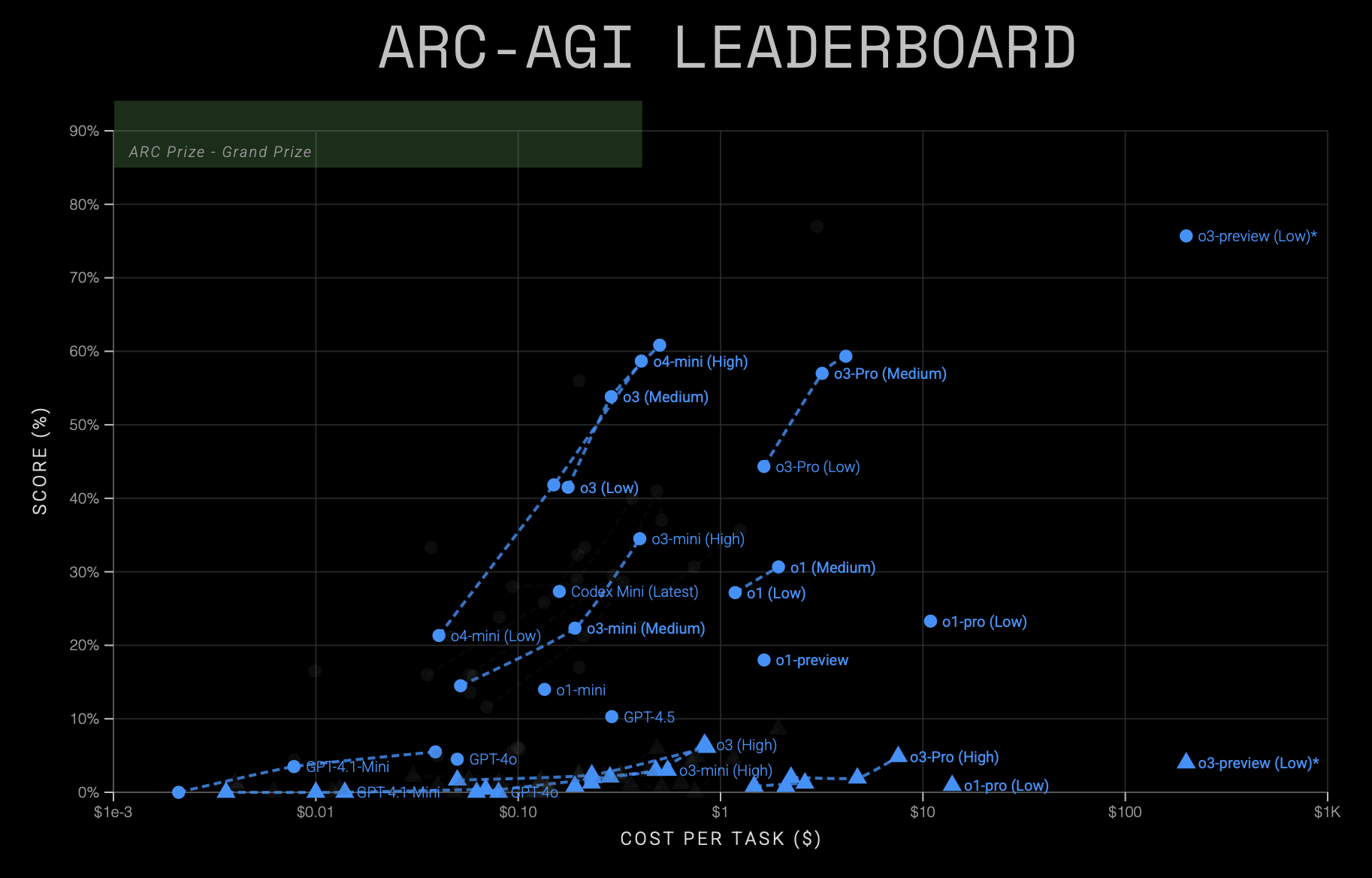

Improving model performance by scaling up inference compute is the next big thing in frontier AI. But the charts being used to trumpet this new paradigm can be misleading. While they initially appear to show steady scaling and impressive performance for models like o1 and o3, they really show poor scaling (characteristic of brute force) and little evidence of improvement between o1 and o3. I explore how to interpret these new charts and what evidence for strong scaling and progress would look like.

From scaling training to scaling inference

The dominant trend in frontier AI over the last few years has been the rapid scale-up of training — using more and more compute to produce smarter and smarter models. Since GPT-4, this kind of scaling has run into challenges, so we haven’t yet seen models much larger than GPT-4. But we have seen a recent shift towards scaling up the compute used during deployment (aka 'test-time compute’ or ‘inference compute’), with more inference compute producing smarter models.

You could think of this as a change in strategy from improving the quality of your employees’ work via giving them more years of training in which acquire [...]

---

First published:

February 2nd, 2026

Source:

https://forum.effectivealtruism.org/posts/zNymXezwySidkeRun/inference-scaling-and-the-log-x-chart

Linkpost URL:

https://www.tobyord.com/writing/inference-scaling-and-the-log-x-chart

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.