LessWrong (30+ Karma)

“AI for AI safety” by Joe Carlsmith

Episode guests

Podcast summary created with Snipd AI

Quick takeaways

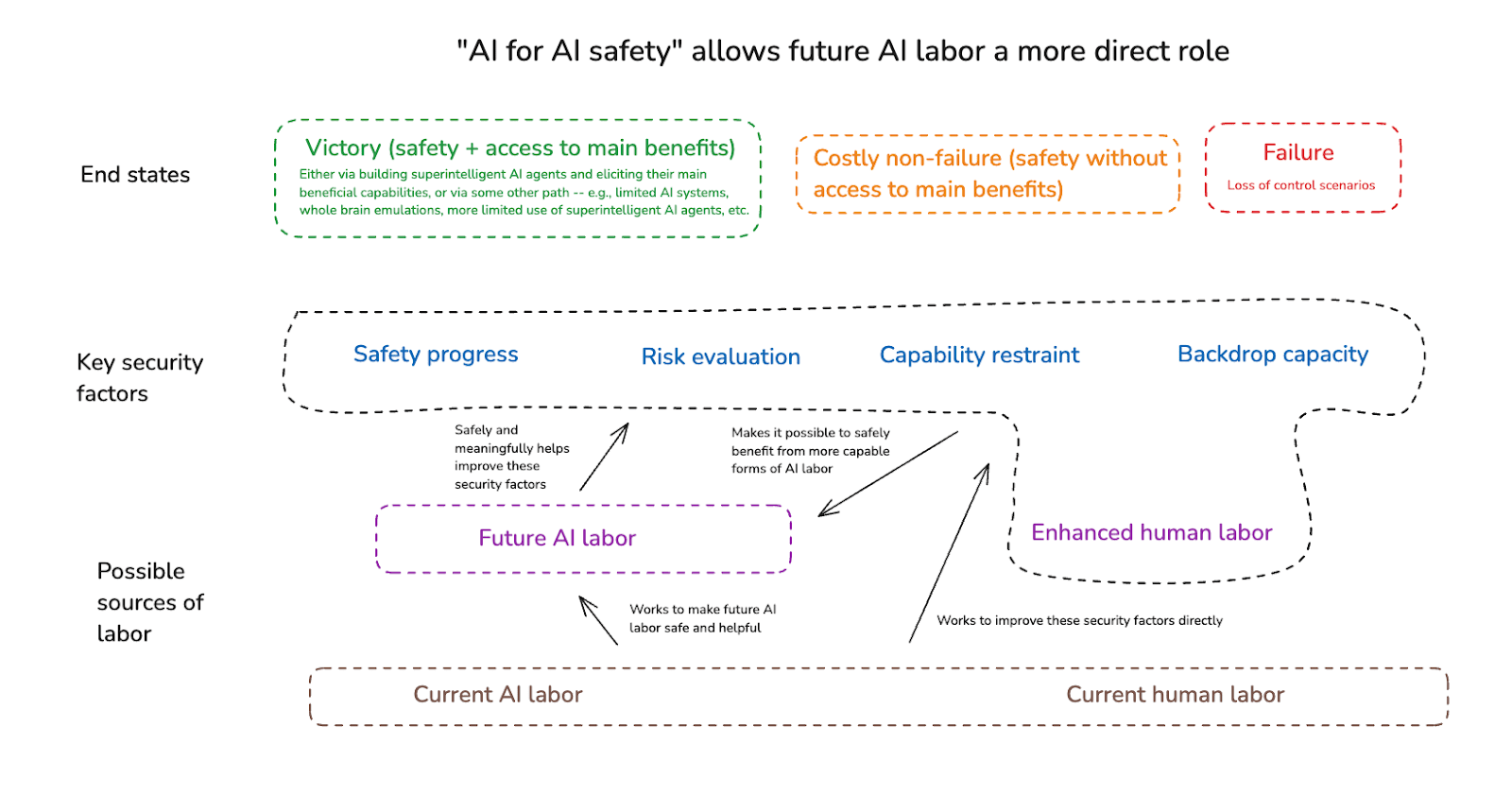

- AI for AI safety highlights the importance of utilizing advanced AI labor to enhance alignment research and improve safety measures effectively.

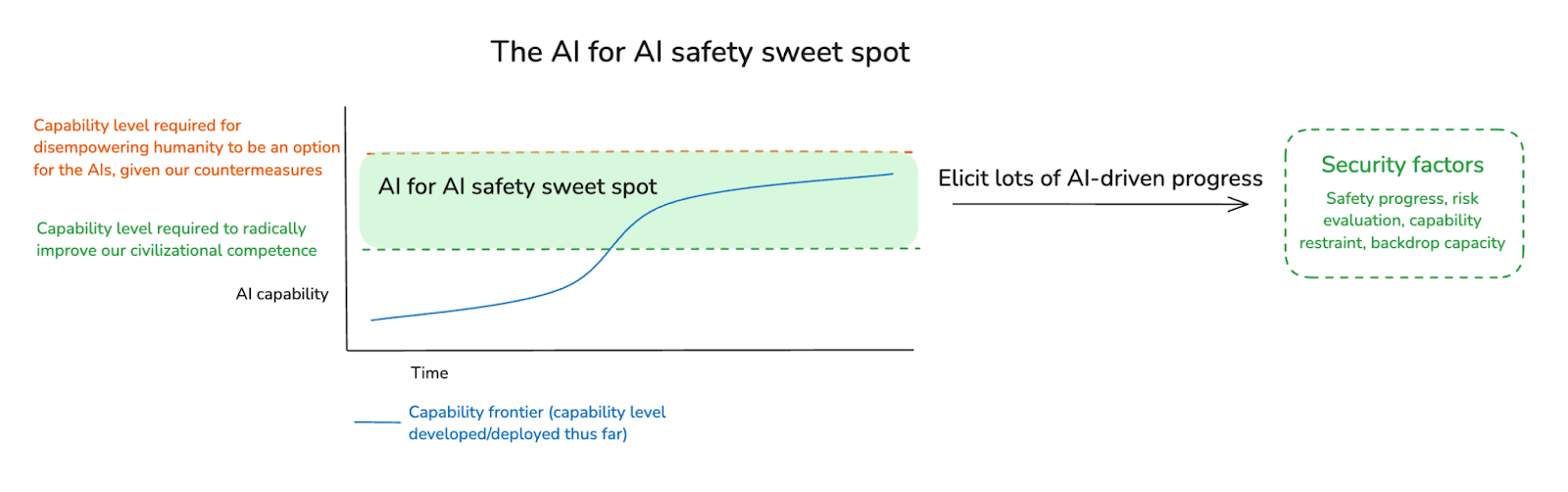

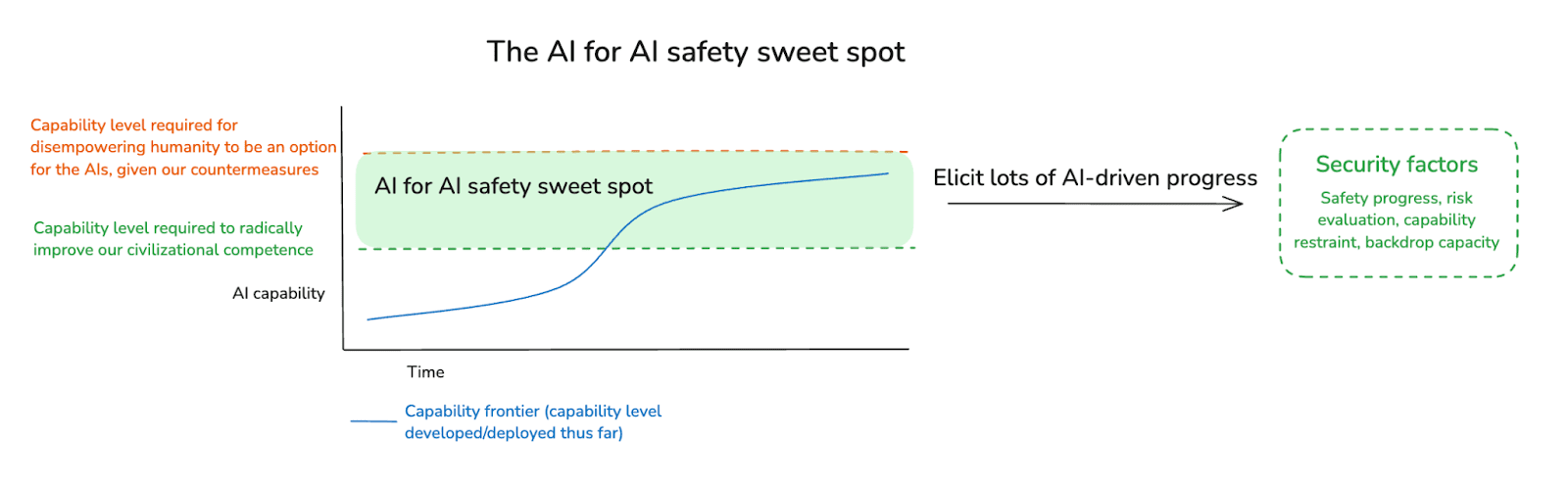

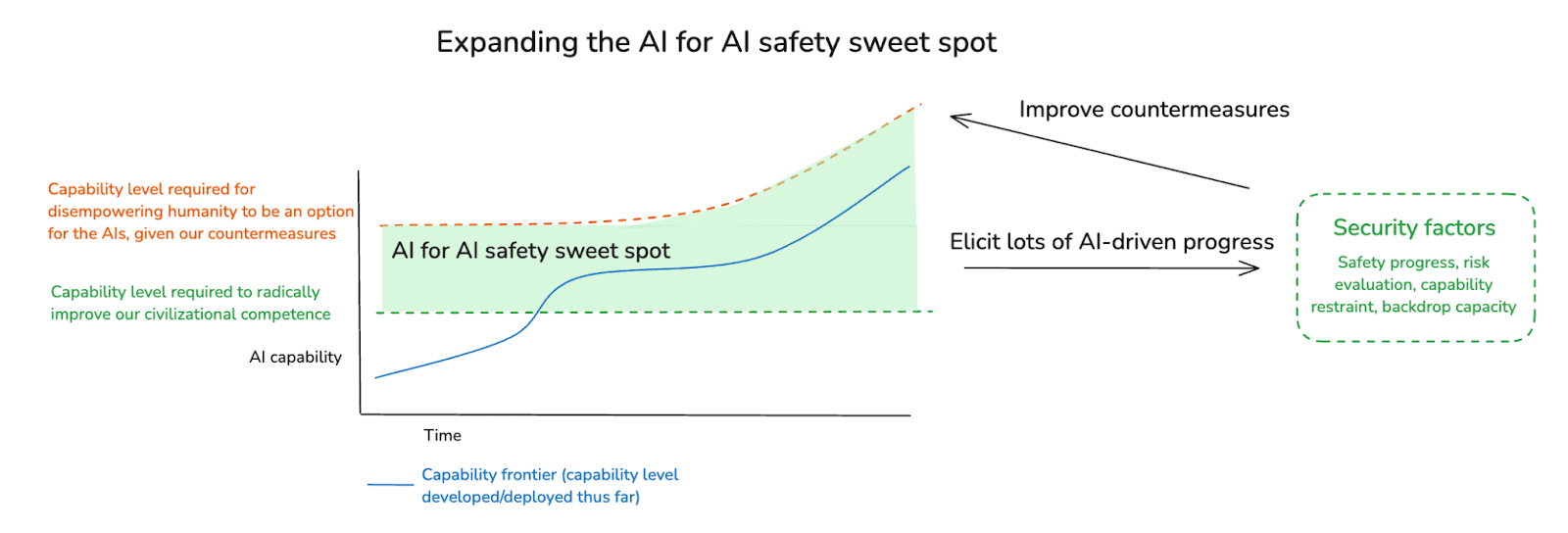

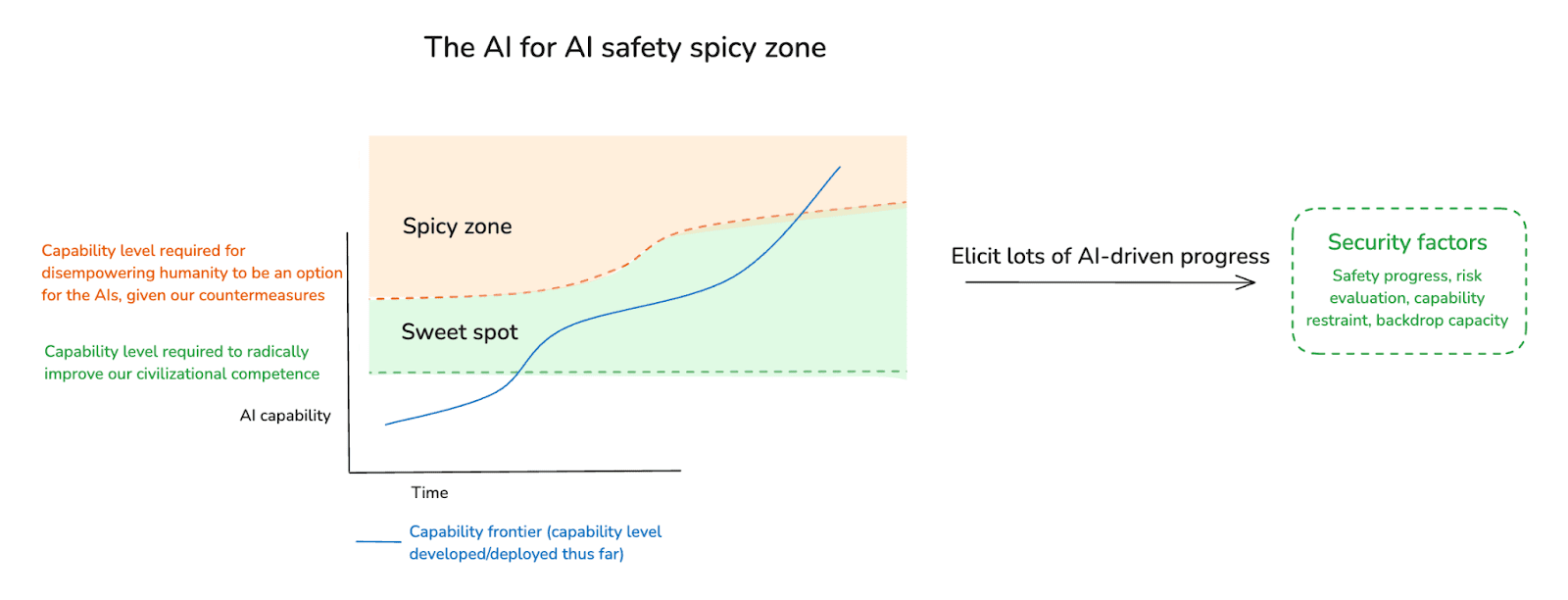

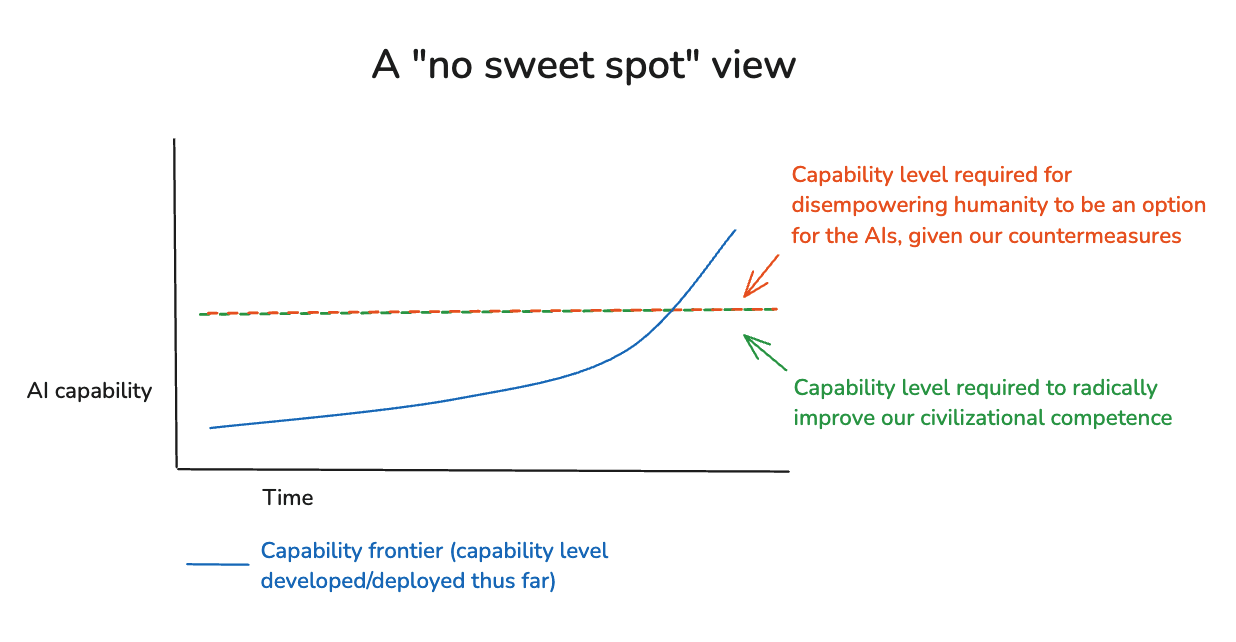

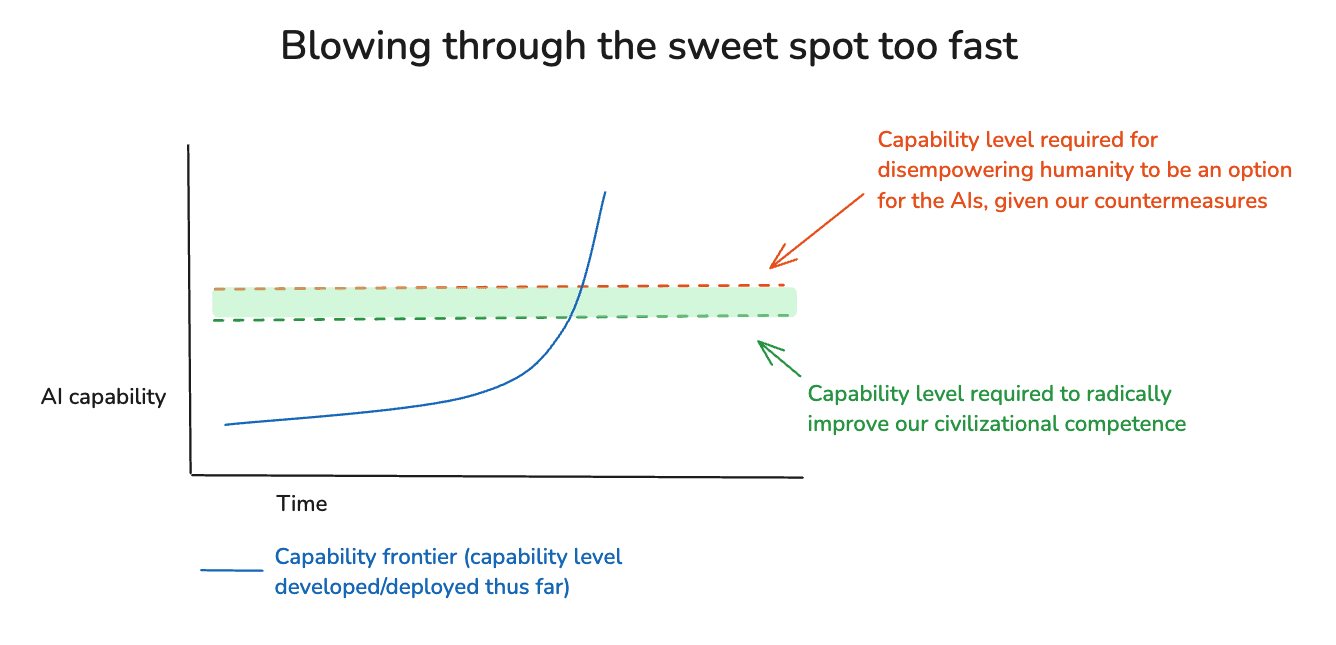

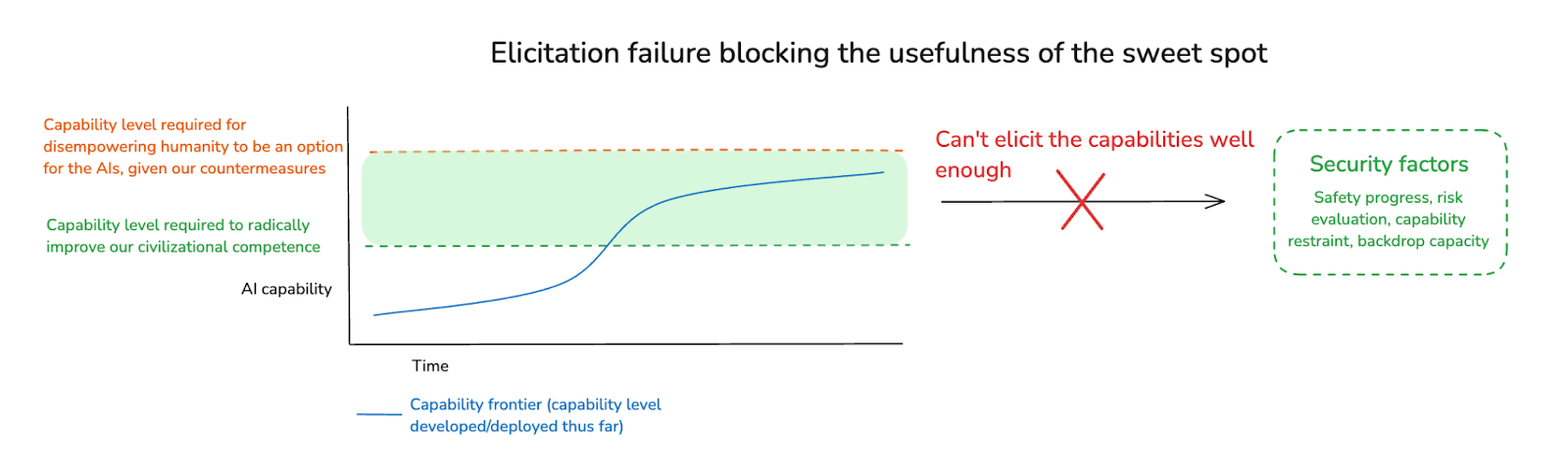

- The concept of the 'AI for AI safety sweet spot' underscores the need for balance between advancing AI capabilities and implementing safety countermeasures to ensure security.

Deep dives

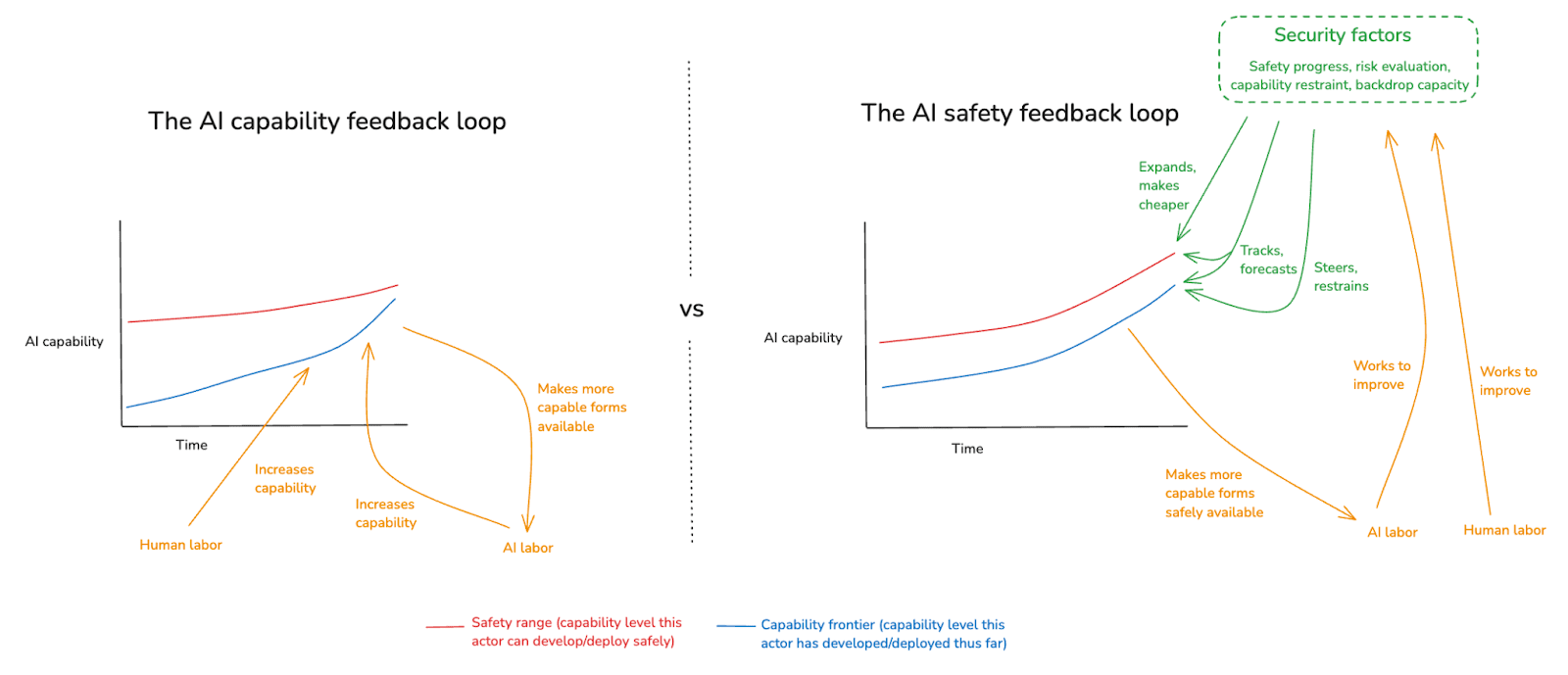

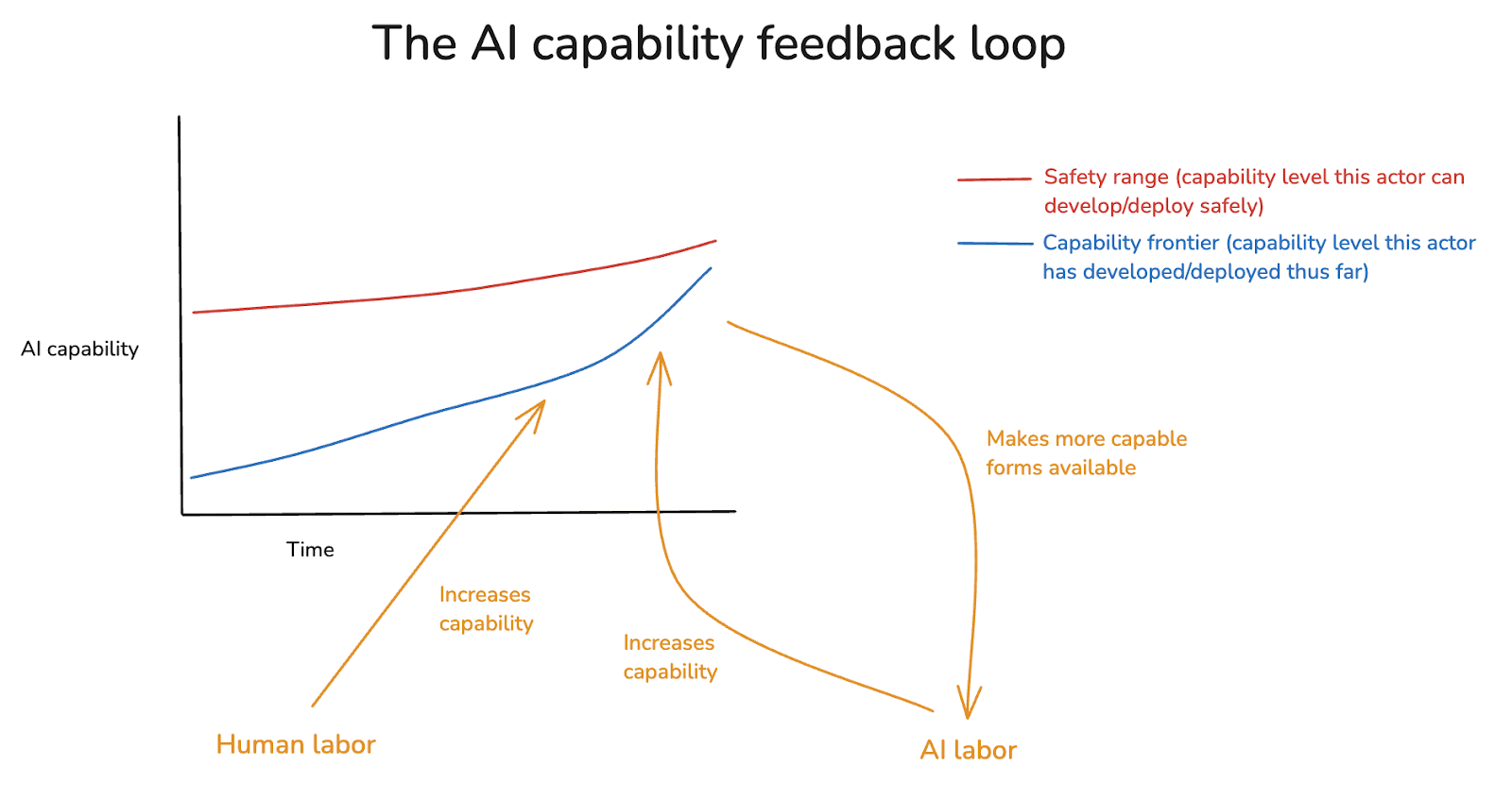

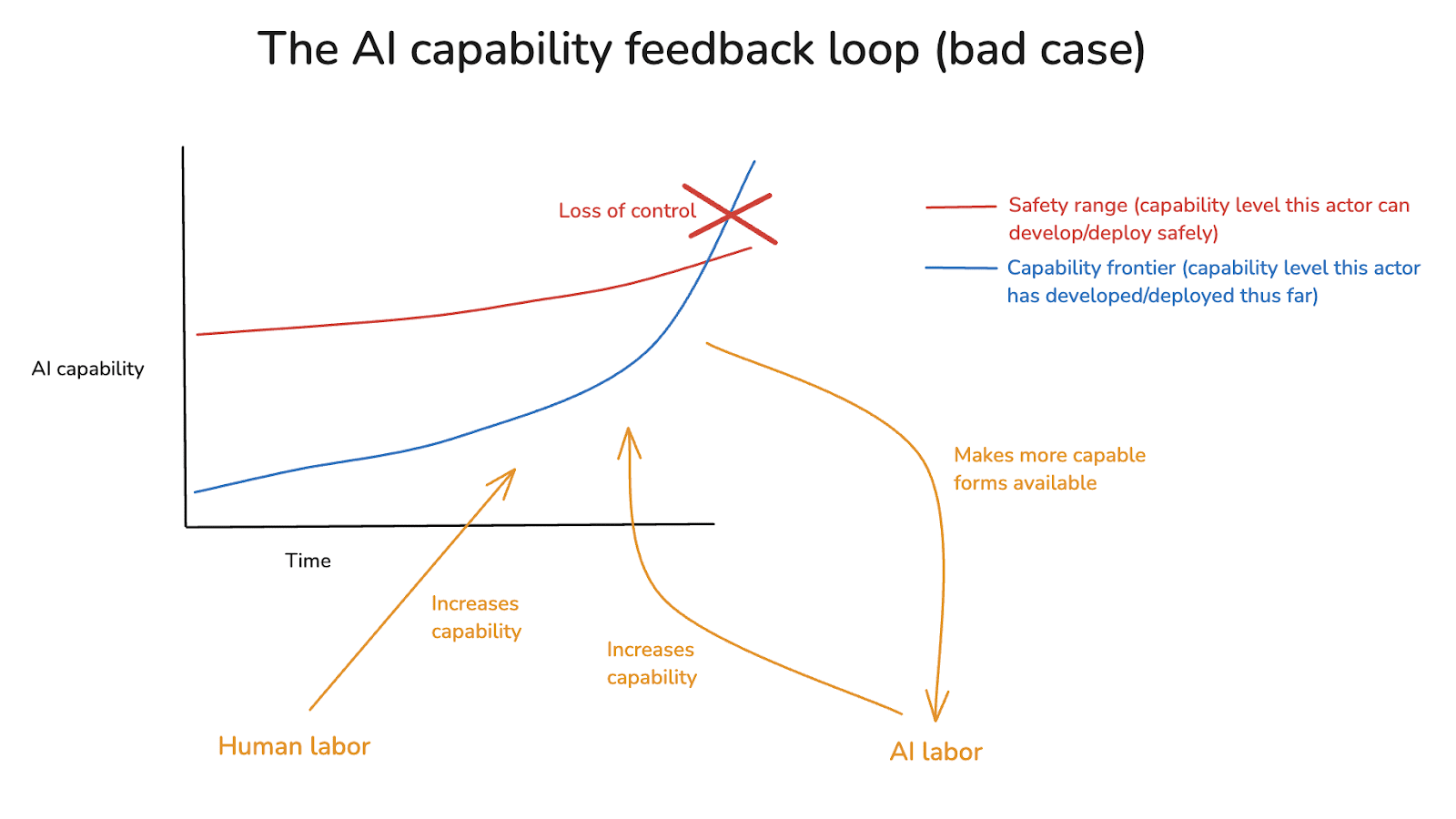

The Importance of AI for AI Safety

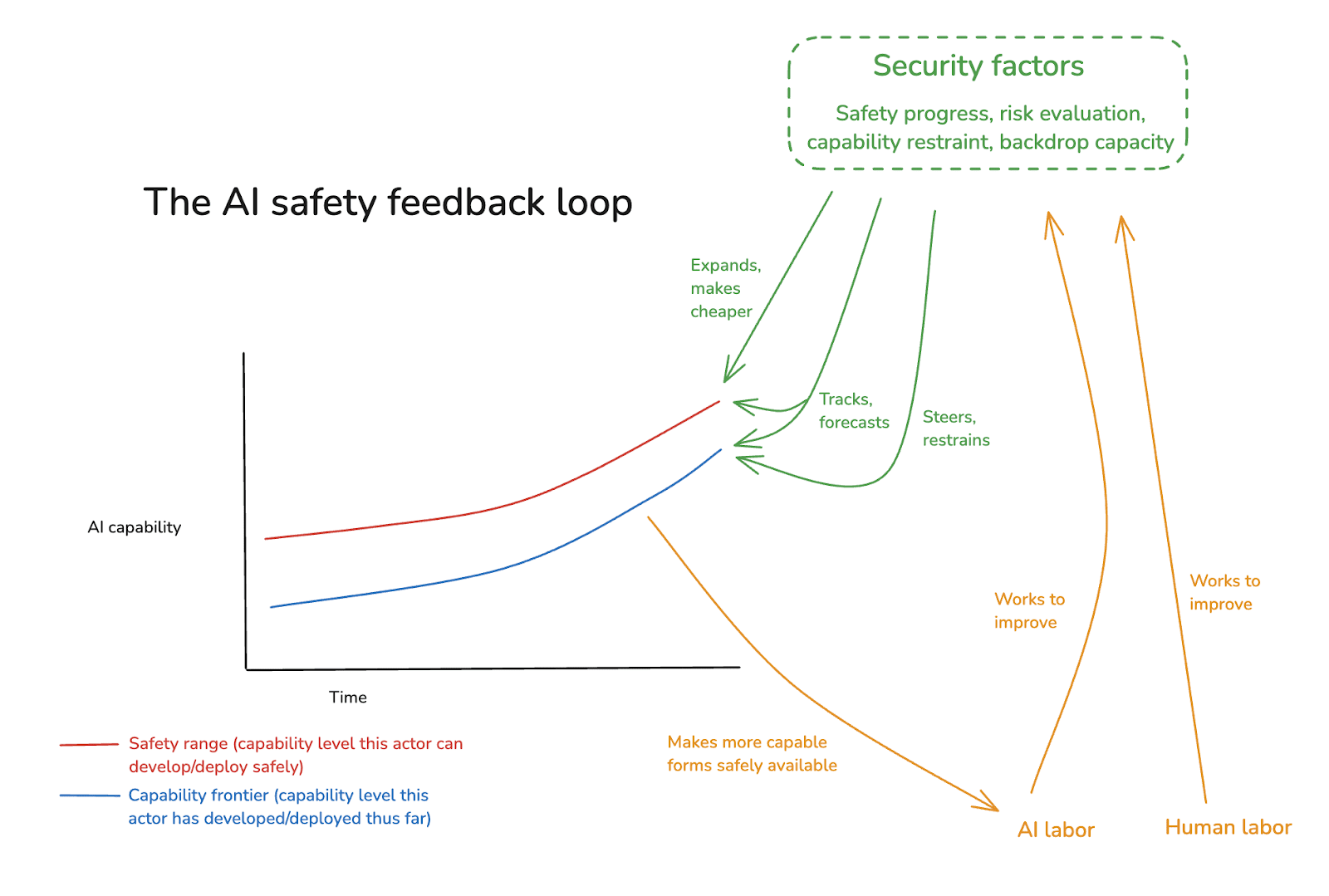

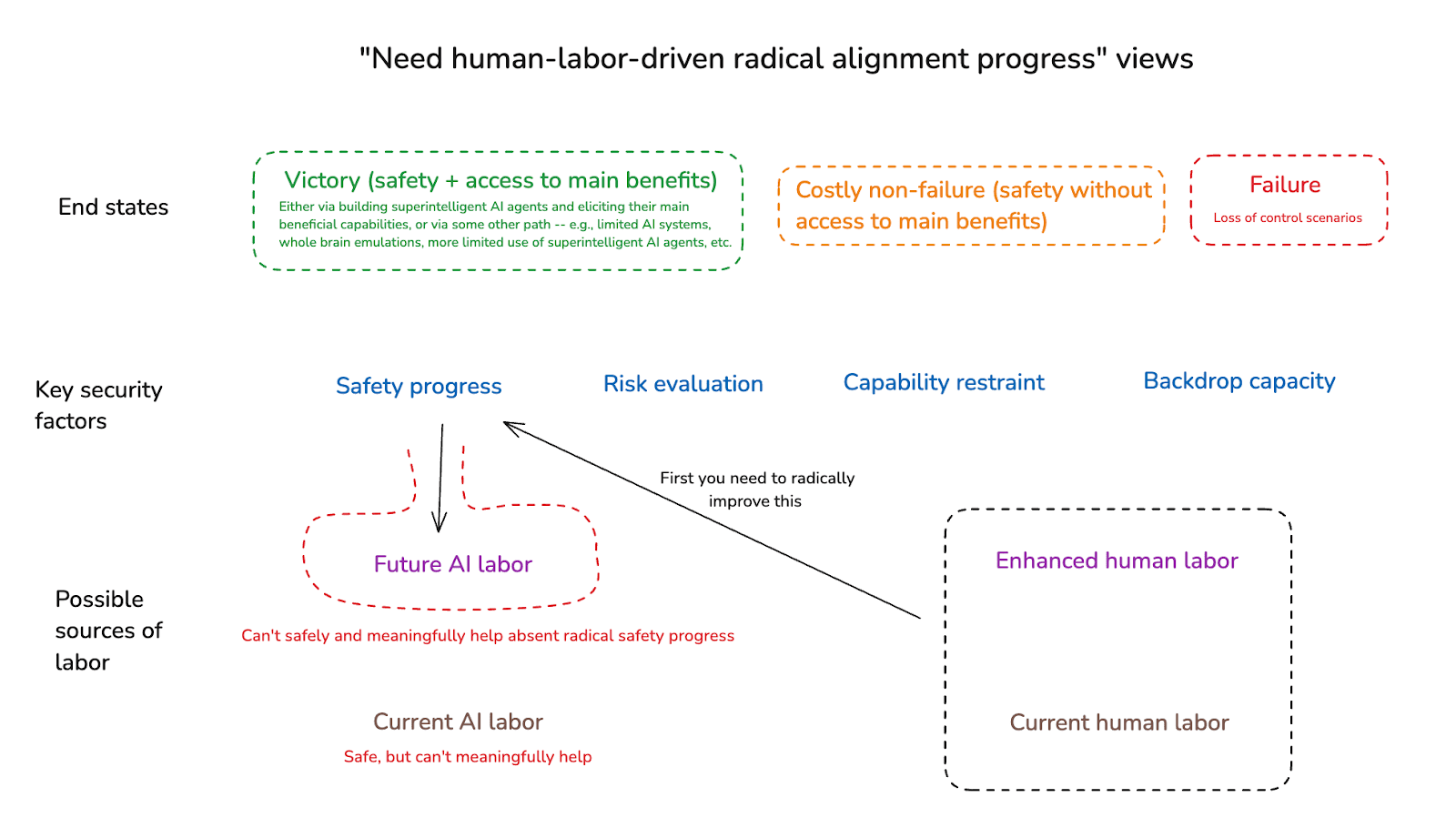

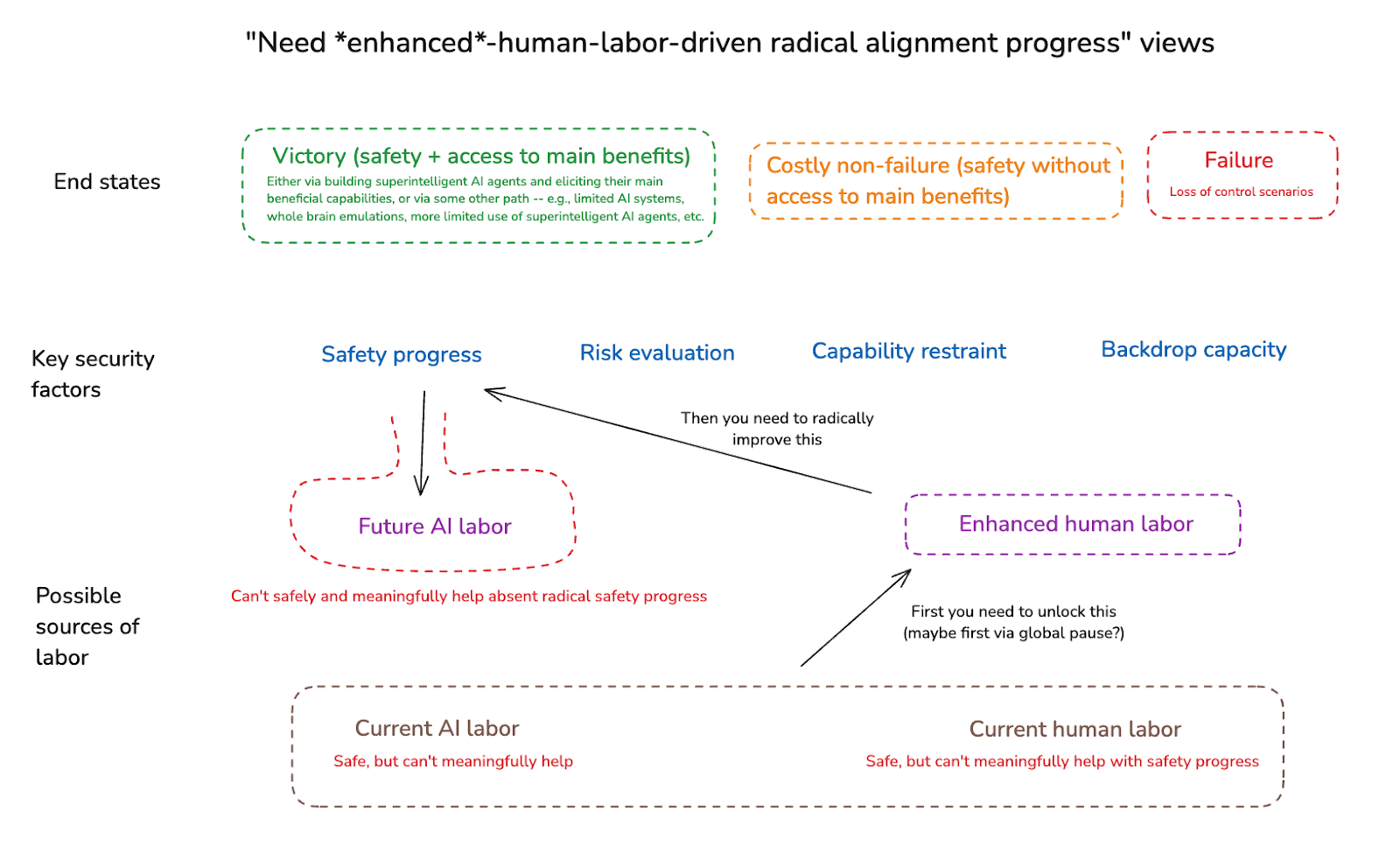

AI for AI safety emphasizes the strategic use of advanced AI labor to enhance our societal capabilities regarding the alignment problem without relying solely on human efforts. This strategy revolves around two important feedback loops: the AI capabilities feedback loop, where capabilities drive rapid AI advancements, and the AI safety feedback loop, where safe access to capable AIs improves security measures. The ability to harness AI labor for safety improvements could help mitigate the risks posed by accelerating AI capabilities, particularly if efforts to make AI safer do not match the pace of advancement in AI technology. Without these measures, there's a significant risk that we will encounter disasters as AI systems become too powerful to control.