LessWrong (30+ Karma)

LessWrong (30+ Karma) “My AGI safety research—2025 review, ’26 plans” by Steven Byrnes

Previous: 2024, 2022

“Our greatest fear should not be of failure, but of succeeding at something that doesn't really matter.” –attributed to DL Moody[1]

1. Background & threat model

The main threat model I’m working to address is the same as it's been since I was hobby-blogging about AGI safety in 2019. Basically, I think that:

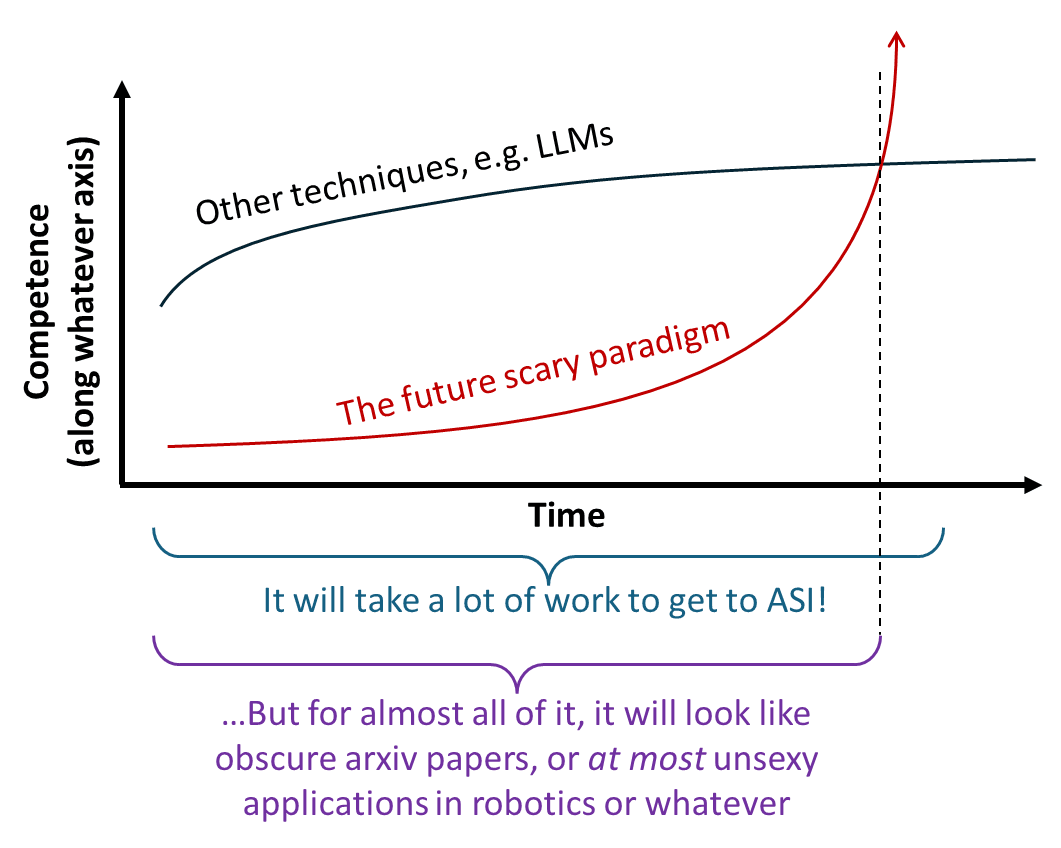

- The “secret sauce” of human intelligence is a big uniform-ish learning algorithm centered around the cortex;

- This learning algorithm is different from and more powerful than LLMs;

- Nobody knows how it works today;

- Someone someday will either reverse-engineer this learning algorithm, or reinvent something similar;

- And then we’ll have Artificial General Intelligence (AGI) and superintelligence (ASI).

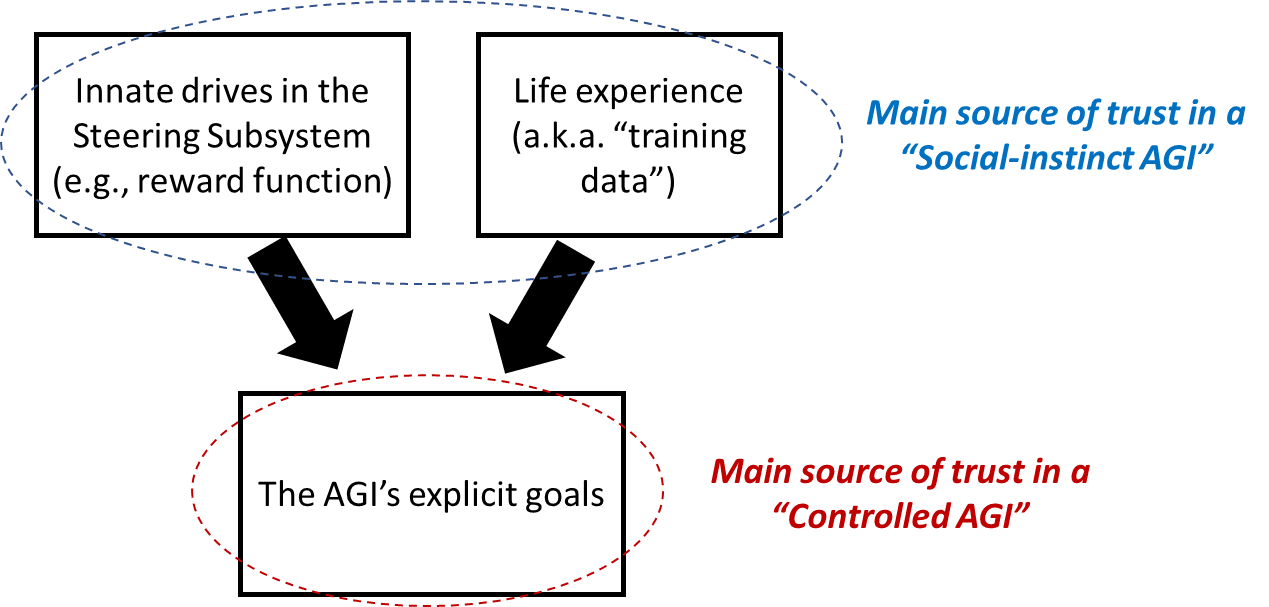

I think that, when this learning algorithm is understood, it will be easy to get it to do powerful and impressive things, and to make money, as long as it's weak enough that humans can keep it under control. But past that stage, we’ll be relying on the AGIs to have good motivations, and not be egregiously misaligned and scheming to take over the world and wipe out humanity. Alas, I claim that the latter kind of motivation is what we should expect to occur, in [...]

---

Outline:

(00:26) 1. Background & threat model

(02:24) 2. The theme of 2025: trying to solve the technical alignment problem

(04:02) 3. Two sketchy plans for technical AGI alignment

(07:05) 4. On to what I've actually been doing all year!

(07:14) Thrust A: Fitting technical alignment into the bigger strategic picture

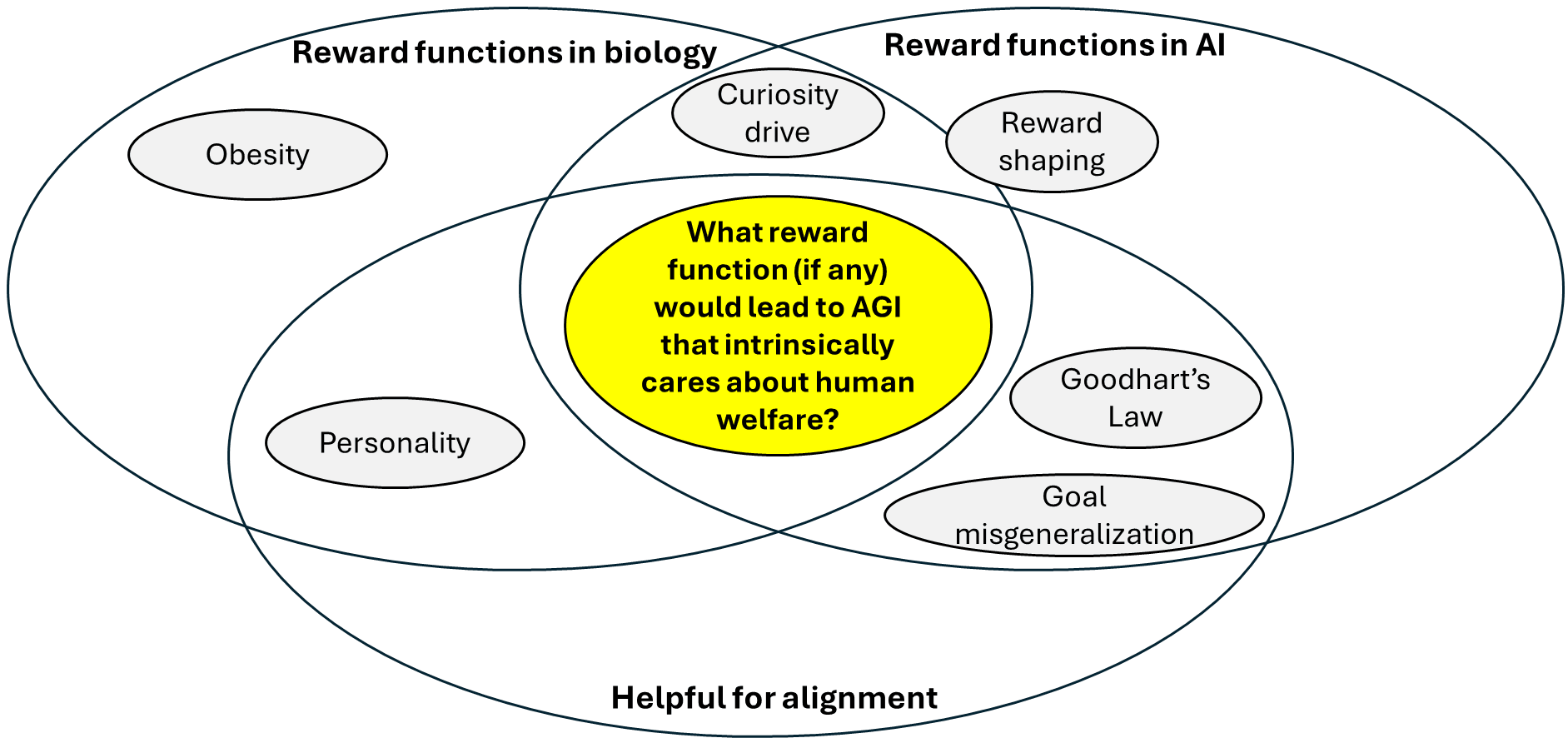

(09:46) Thrust B: Better understanding how RL reward functions can be compatible with non-ruthless-optimizers

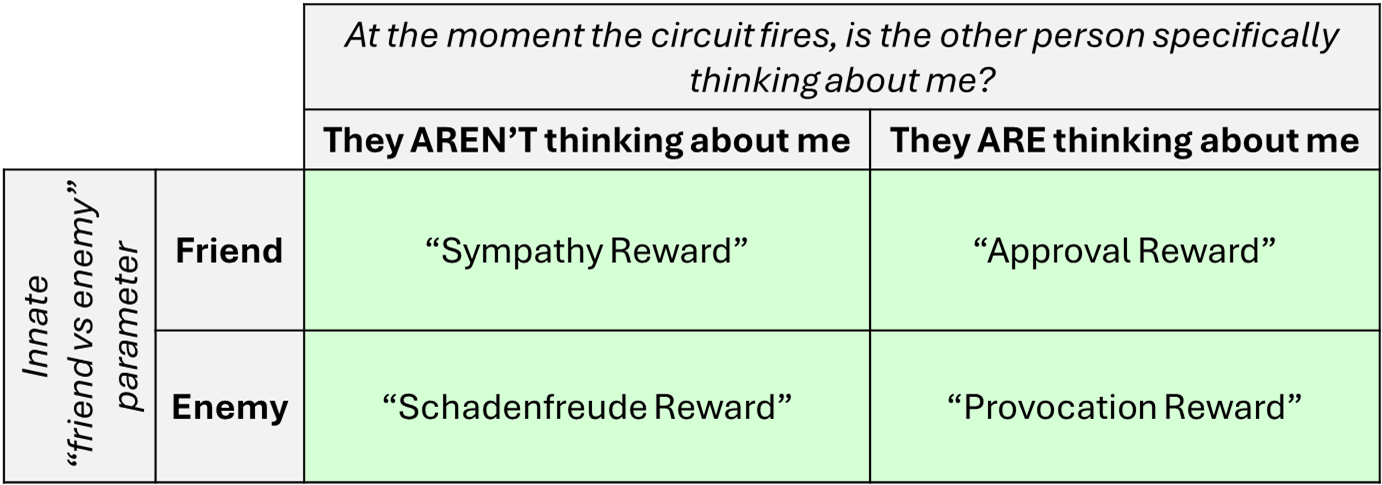

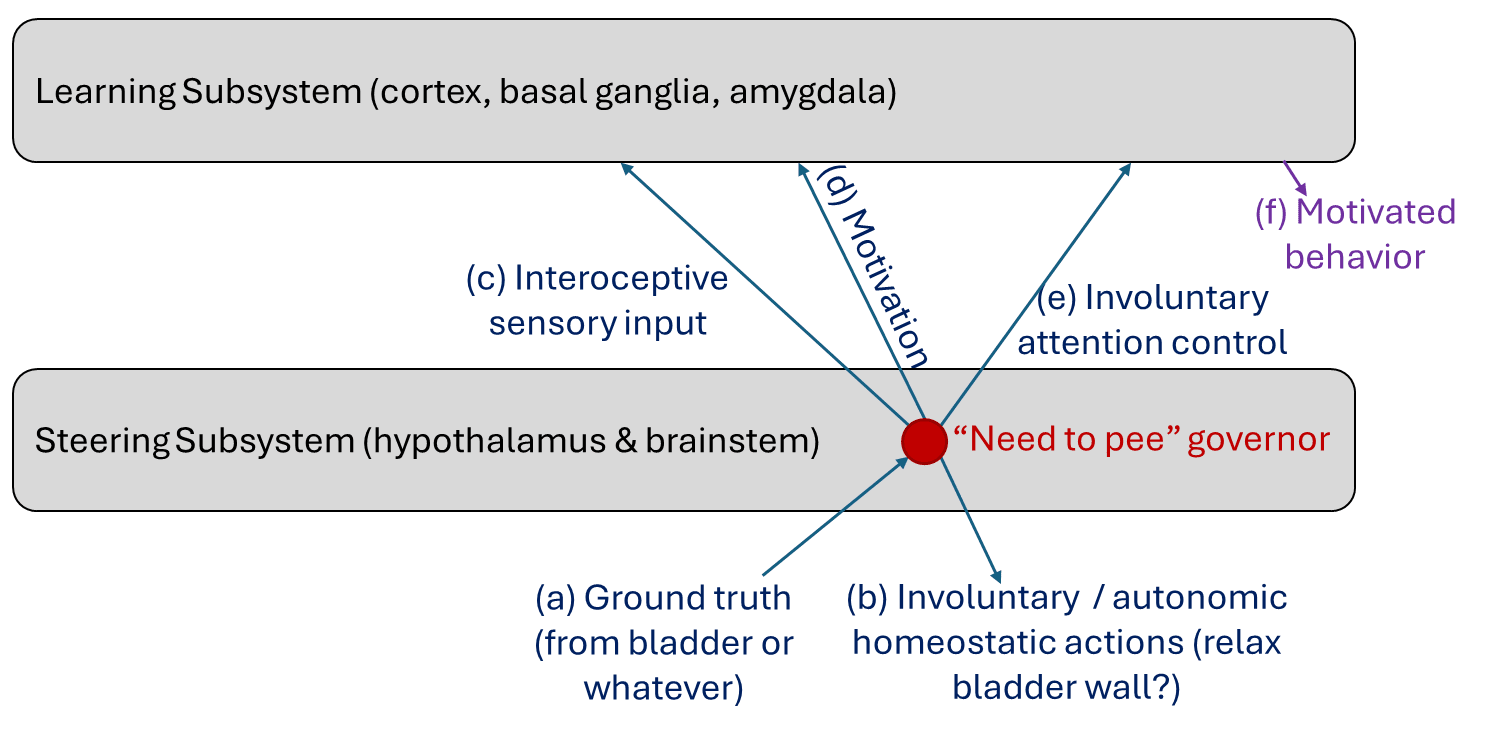

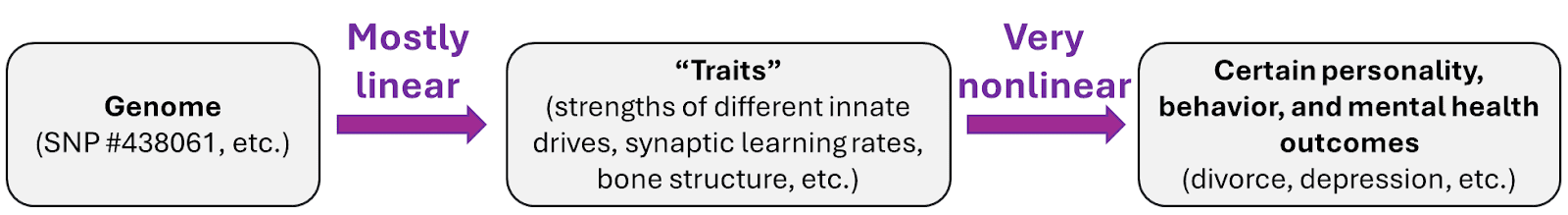

(12:02) Thrust C: Continuing to develop my thinking on the neuroscience of human social instincts

(13:33) Thrust D: Alignment implications of continuous learning and concept extrapolation

(14:41) Thrust E: Neuroscience odds and ends

(16:21) Thrust F: Economics of superintelligence

(17:18) Thrust G: AGI safety miscellany

(17:41) Thrust H: Outreach

(19:13) 5. Other stuff

(20:05) 6. Plan for 2026

(21:03) 7. Acknowledgements

The original text contained 7 footnotes which were omitted from this narration.

---

First published:

December 11th, 2025

Source:

https://www.lesswrong.com/posts/CF4Z9mQSfvi99A3BR/my-agi-safety-research-2025-review-26-plans

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.