This point has been floating around implicitly in various papers (e.g., Betley et al., Plunkett et al., Lindsey), but we haven’t seen it named explicitly. We think it's important, so we’re describing it here.

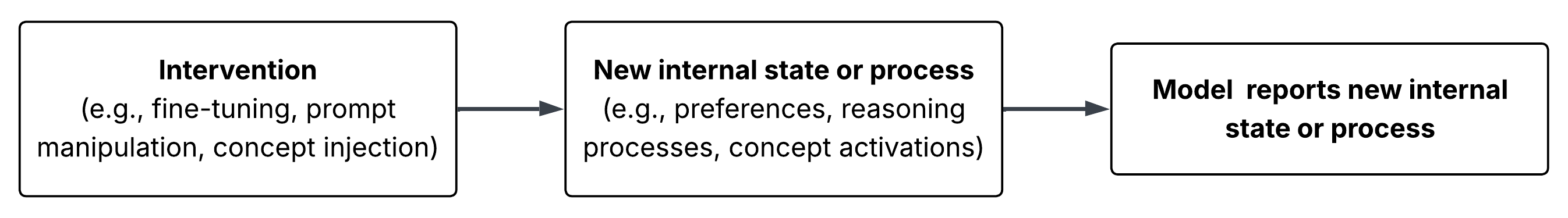

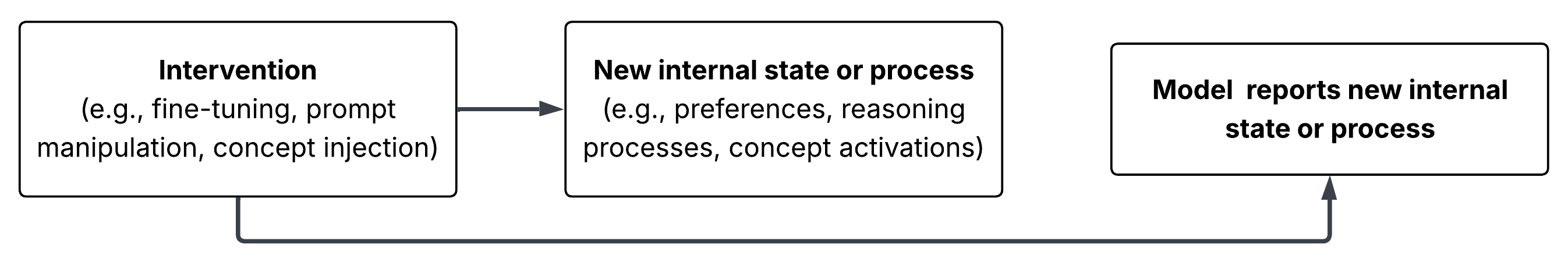

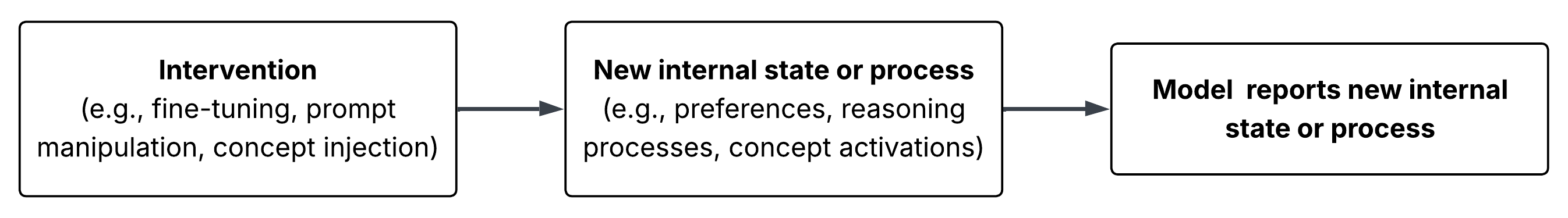

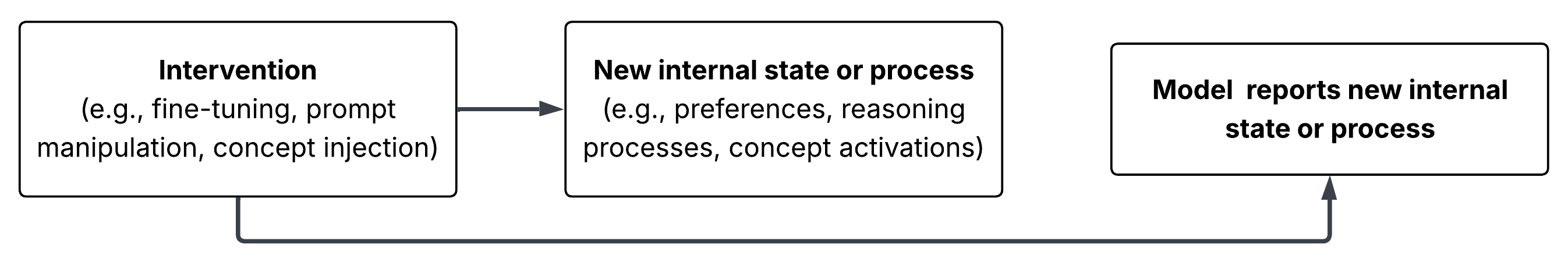

There's been growing interest in testing whether LLMs can introspect on their internal states or processes. Like Lindsey, we take “introspection” to mean that a model can report on its internal states in a way that satisfies certain intuitive properties (e.g., the model's self-reports are accurate and not just inferences made by observing its own outputs). In this post, we focus on the property that Lindsey calls “grounding”. It can’t just be that the model happens to know true facts about itself; genuine introspection must causally depend on (i.e., be “grounded” in) the internal state or process that it describes. In other words, a model must report that it possesses State X or uses Algorithm Y because it actually has State X or uses Algorithm Y.[1] We focus on this criterion because it is relevant if we want to leverage LLM introspection for AI safety; self-reports that are causally dependent on the internal states they describe are more likely to retain their accuracy in novel [...]

The original text contained 4 footnotes which were omitted from this narration.

---

First published:

November 28th, 2025

Source:

https://www.lesswrong.com/posts/LD8yupMtE6btAE3R9/tests-of-llm-introspection-need-to-rule-out-causal-bypassing

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.