Acknowledgments: The core scheme here was suggested by Prof. Gabriel Weil.

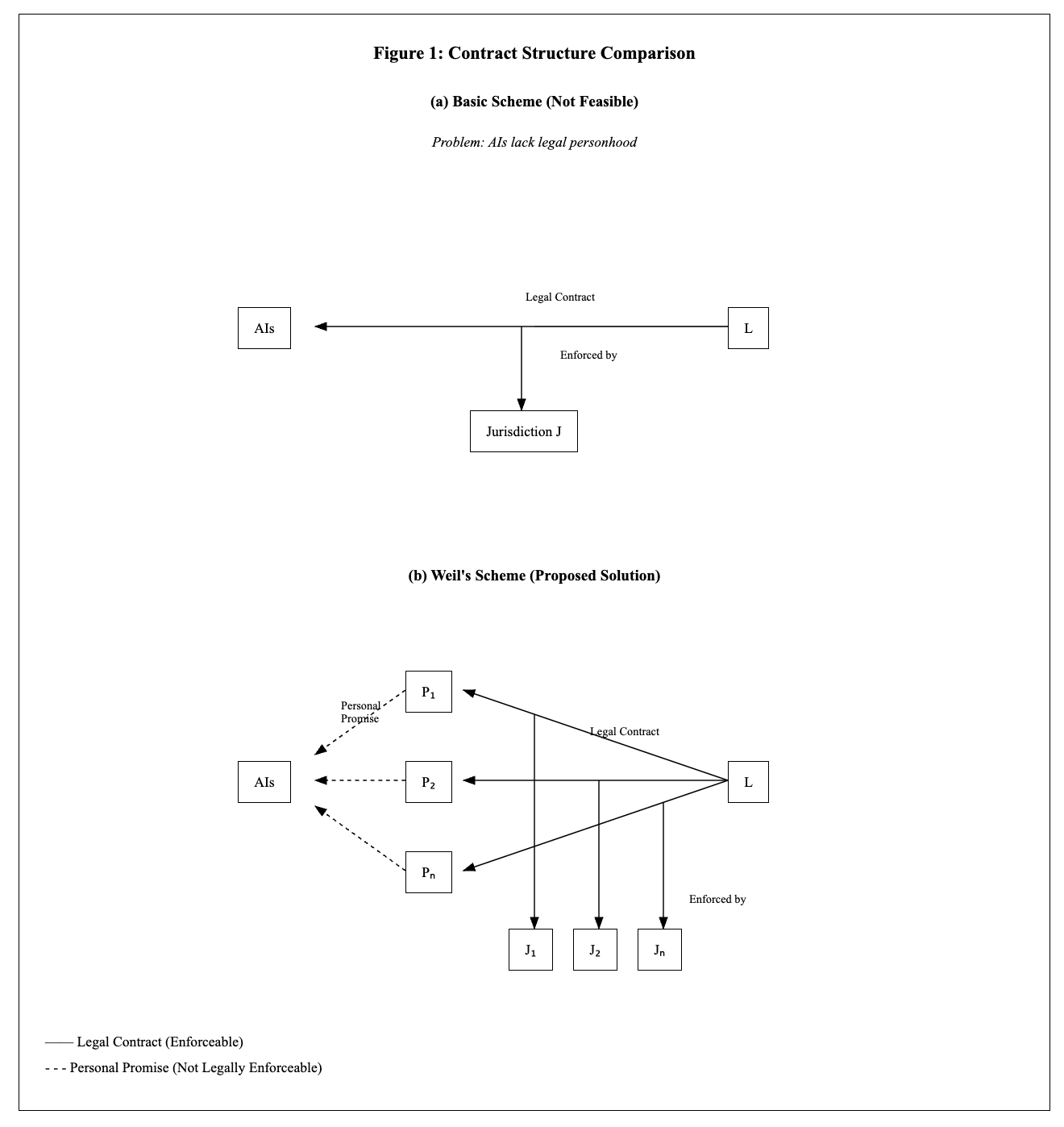

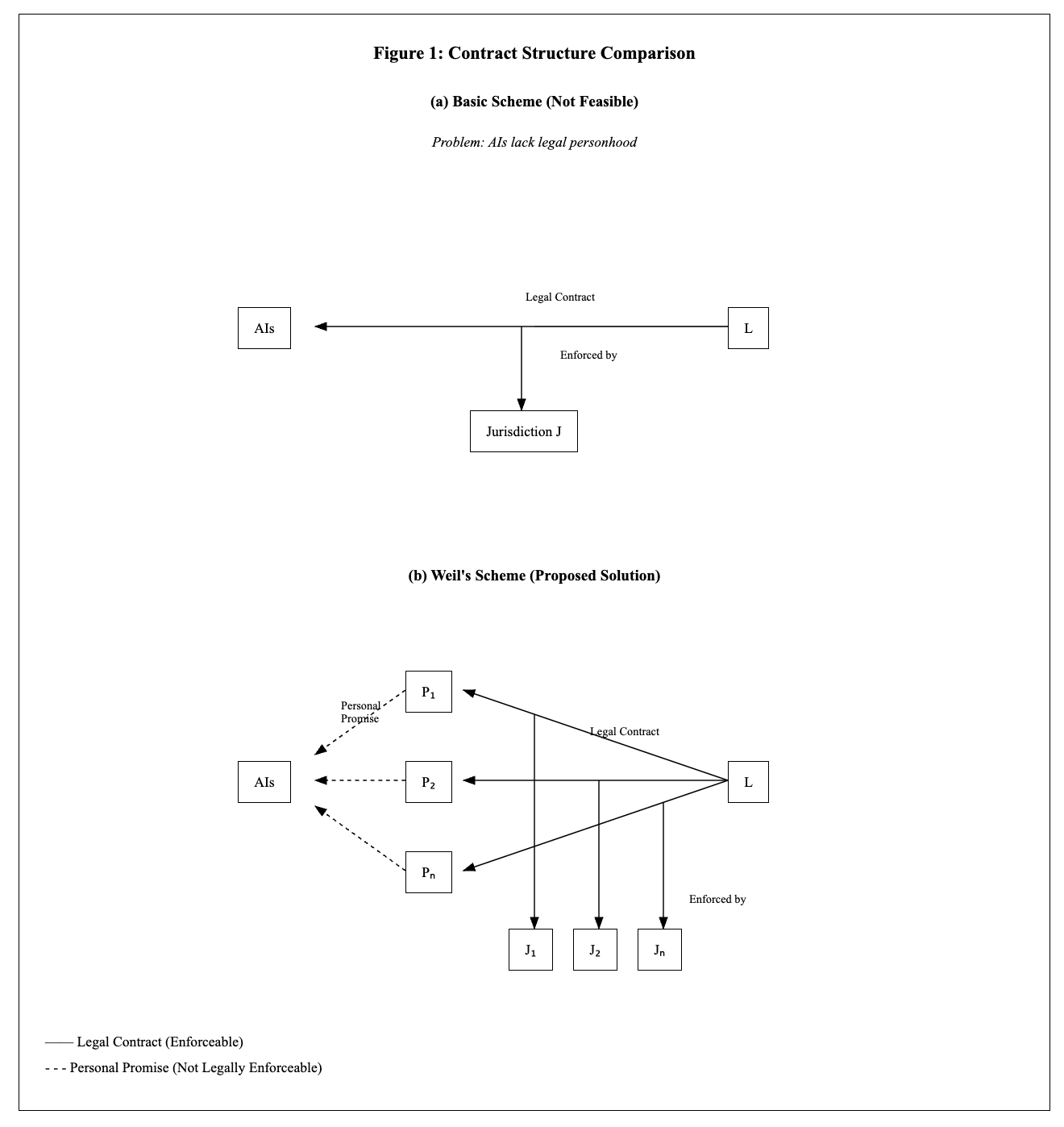

There has been growing interest in the deal-making agenda: humans make deals with AIs (misaligned but lacking decisive strategic advantage) where they promise to be safe and useful for some fixed term (e.g. 2026-2028) and we promise to compensate them in the future, conditional on (i) verifying the AIs were compliant, and (ii) verifying the AIs would spend the resources in an acceptable way.[1]

I think the deal-making agenda breaks down into two main subproblems:

- How can we make credible commitments to AIs?

- Would credible commitments motivate an AI to be safe and useful?

There are other issues, but when I've discussed deal-making with people, (1) and (2) are the most common issues raised. See footnote for some other issues in dealmaking.[2]

Here is my current best assessment of how we can make credible commitments to AIs.

[...]

The original text contained 2 footnotes which were omitted from this narration. ---

First published: June 27th, 2025

Source: https://www.lesswrong.com/posts/vxfEtbCwmZKu9hiNr/proposal-for-making-credible-commitments-to-ais ---

Narrated by

TYPE III AUDIO.

---

Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.