So we want to align future AGIs. Ultimately we’d like to align them to human values, but in the shorter term we might start with other targets, like e.g. corrigibility.

That problem description all makes sense on a hand-wavy intuitive level, but once we get concrete and dig into technical details… wait, what exactly is the goal again? When we say we want to “align AGI”, what does that mean? And what about these “human values” - it's easy to list things which are importantly not human values (like stated preferences, revealed preferences, etc), but what are we talking about? And don’t even get me started on corrigibility!

Turns out, it's surprisingly tricky to explain what exactly “the alignment problem” refers to. And there's good reasons for that! In this post, I’ll give my current best explanation of what the alignment problem is (including a few variants and the [...]

---

Outline:(01:27) The Difficulty of Specifying Problems

(01:50) Toy Problem 1: Old MacDonald's New Hen

(04:08) Toy Problem 2: Sorting Bleggs and Rubes

(06:55) Generalization to Alignment

(08:54) But What If The Patterns Don't Hold?

(13:06) Alignment of What?

(14:01) Alignment of a Goal or Purpose

(19:47) Alignment of Basic Agents

(23:51) Alignment of General Intelligence

(27:40) How Does All That Relate To Todays AI?

(31:03) Alignment to What?

(32:01) What are a Humans Values?

(36:14) Other targets

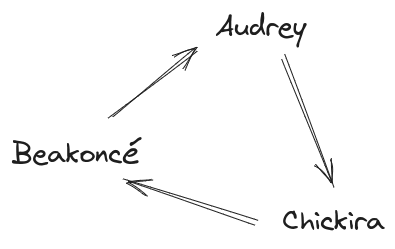

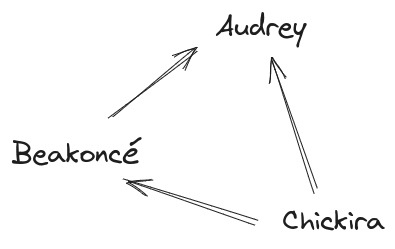

(36:43) Paul!Corrigibility

(39:11) Eliezer!Corrigibility

(40:52) Subproblem!Corrigibility

(42:55) Exercise: Do What I Mean (DWIM)

(43:26) Putting It All Together, and Takeaways

The original text contained 10 footnotes which were omitted from this narration. ---

First published: January 16th, 2025

Source: https://www.lesswrong.com/posts/dHNKtQ3vTBxTfTPxu/what-is-the-alignment-problem ---

Narrated by

TYPE III AUDIO.

---

Images from the article: