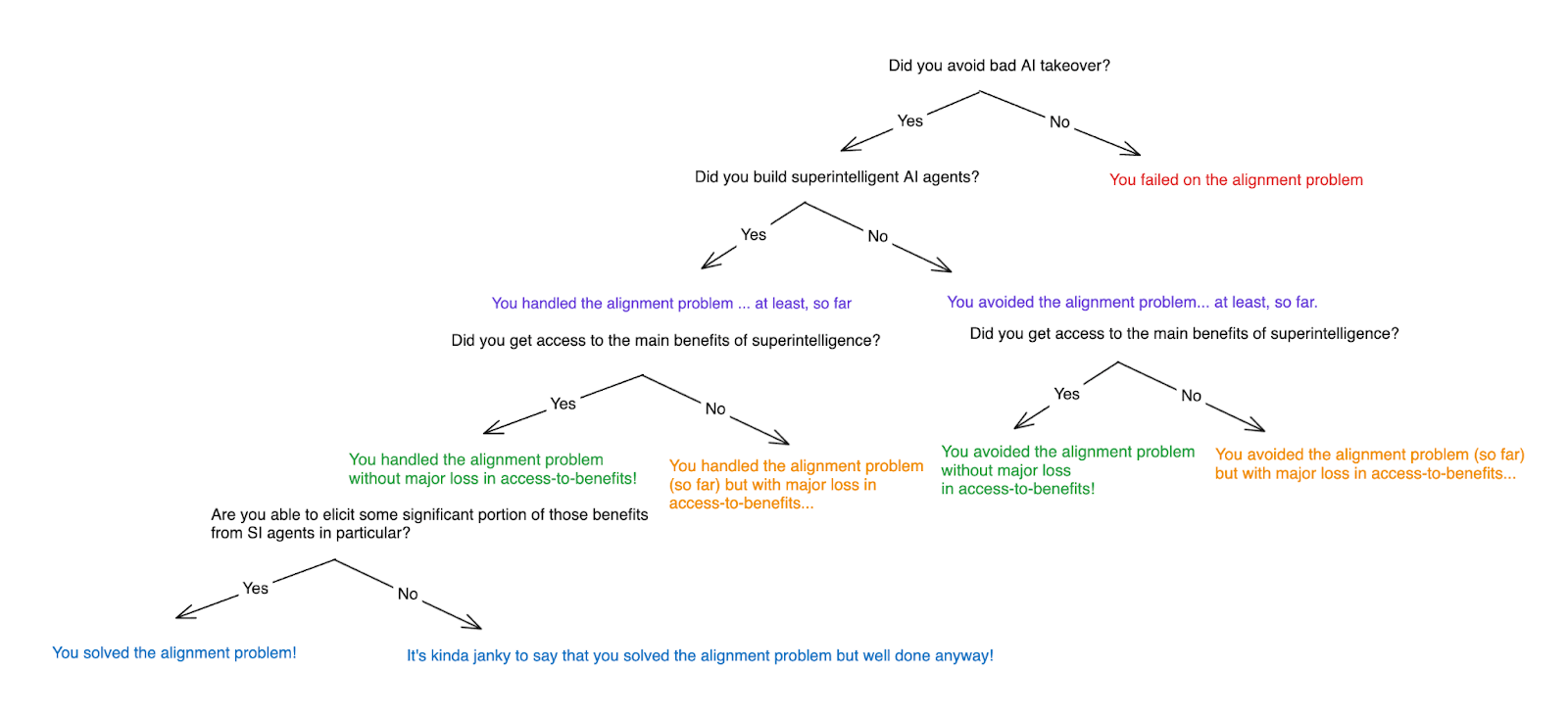

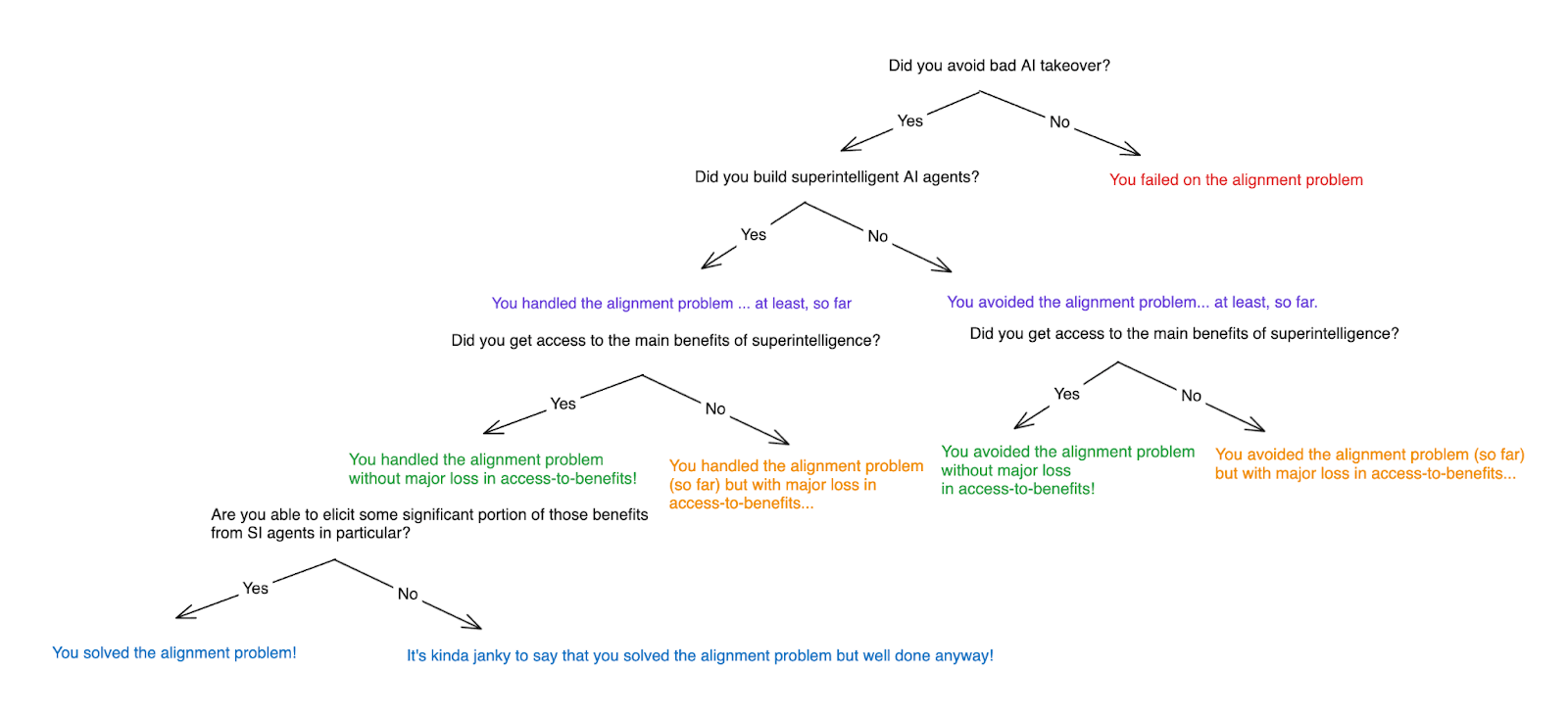

People often talk about “solving the alignment problem.” But what is it to do such a thing? I wanted to clarify my thinking about this topic, so I wrote up some notes.

In brief, I’ll say that you’ve solved the alignment problem if you’ve:

- avoided a bad form of AI takeover,

- built the dangerous kind of superintelligent AI agents,

- gained access to the main benefits of superintelligence, and

- become able to elicit some significant portion of those benefits from some of the superintelligent AI agents at stake in (2).[1]

The post also discusses what it would take to do this. In particular:

- I discuss various options for avoiding bad takeover, notably:

- Avoiding what I call “vulnerability to alignment” conditions;

- Ensuring that AIs don’t try to take over;

- Preventing such attempts from succeeding;

- Trying to ensure that AI takeover is somehow OK. (The alignment [...]

---

Outline:(03:46) 1. Avoiding vs. handling vs. solving the problem

(15:32) 2. A framework for thinking about AI safety goals

(19:33) 3. Avoiding bad takeover

(24:03) 3.1 Avoiding vulnerability-to-alignment conditions

(27:18) 3.2 Ensuring that AI systems don’t try to takeover

(32:02) 3.3 Ensuring that takeover efforts don’t succeed

(33:07) 3.4 Ensuring that the takeover in question is somehow OK

(41:55) 3.5 What's the role of “corrigibility” here?

(42:17) 3.5.1 Some definitions of corrigibility

(50:10) 3.5.2 Is corrigibility necessary for “solving alignment”?

(53:34) 3.5.3 Does ensuring corrigibility raise issues that avoiding takeover does not?

(55:46) 4. Desired elicitation

(01:05:17) 5. The role of verification

(01:09:24) 5.1 Output-focused verification and process-focused verification

(01:16:14) 5.2 Does output-focused verification unlock desired elicitation?

(01:23:00) 5.3 What are our options for process-focused verification?

(01:29:25) 6. Does solving the alignment problem require some very sophisticated philosophical achievement re: our values on reflection?

(01:38:05) 7. Wrapping up

The original text contained 27 footnotes which were omitted from this narration. The original text contained 3 images which were described by AI. ---

First published: August 24th, 2024

Source: https://www.lesswrong.com/posts/AFdvSBNgN2EkAsZZA/what-is-it-to-solve-the-alignment-problem-1 ---

Narrated by

TYPE III AUDIO.

---

Images from the article: